While reviewing Kubernetes Infrastructures of various organizations, I have discovered that Container Resources (Requests and Limits) are often misconfigured resulting in node failures and Kubernetes Cluster becoming unstable. It’s very important to allocate optimum resources to your deployments in order to guarantee stability and SLA of your deployments.

Requests (Guaranteed Resources)

Requests are guaranteed resources that Kubernetes will ensure for the container on a node. If the required Requests resources are not available, the pod is not scheduled and lies in a Pending state until the required resources are made available (by cluster autoscaler).

Increasing or decreasing the Request value can have direct implications on your Cloud Infrastructure Cost as the more Request you allocate to a container, the fewer number of pods will be scheduled per node.

Limits (Maximum Resources)

Limits are the maximum resource that can be utilized by a container. If the container exceeds this quota, it is forcefully killed with a status OOMKilled. The resources mentioned in limits are not guaranteed to be available to the container, it may or may not be fulfilled depending upon resource allocation situation on the node.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

resources:

limits:

memory: 200Mi

cpu: 1

requests:

memory: 100Mi

cpu: 100m

ports:

- containerPort: 80Consider the resources section of the above-mentioned Deployment, the container specs requests 100m of CPU and 100Mi of memory. This means that this container will be allocated 100m of cpu resource and 100Mi of memory resource on whichever node it is scheduled on.

requests:

memory: 100Mi

cpu: 100mHowever, if you check the limits section, it allocates 1 CPU (or 1000m CPU) and 200Mi of memory, which means, that this container can use upto 1000m CPU and 200Mi memory but if it requests resources more than that, then it will be either throttled or OOMKilled by k8s controller.

limits:

memory: 200Mi

cpu: 1What if no Resources are specified?

It is strongly recommended to always specify CPU and Memory resources in specs of your containers in your Kubernetes deployments. If no resources are specified, the Kubernetes controller will keep on stuffing your pods onto one node without triggering any autoscaling, which will eventually make the Kubernetes node overloaded and unstable. Your app’s performance will suffer as the requests will start throttling.

Tradeoffs: Stability vs Cost

If you specify too less resources or no resources in your container specs in Kubernetes Deployments, you’ll risk rendering your nodes unstable. If you’ll specify too much resources in the container specs of your Kubernetes Deployments, you’ll incur more cost and your nodes may be left underutilized.

It’s important to specify the correct resources for your app depending on your app’s criticality, performance, and historic resource usage patterns. There’s no one fits all formula when it comes to specifying your kubernetes deployment container’s resource specs. You’ll come to the sweet spot after adjusting your container’s specs multiple times.

However, there are some some pointers to keep in mind while adjusting the specs.

1. Request and Limits proportion

Always set your Limit values in proportion with the Request values. For extreme stability, the Limit value should not be greater than 110% of the Request value, giving your pod scope for occasional spikes only.

2. Startup Resource Requirements

Some applications like Java apps need more resources during startup and less resource once they’re running, you can consider raising the Limit values upto 130-140% of the Request values in such cases so that your application can successfully start while keeping the Request value low (direct implication on cost, remember?).

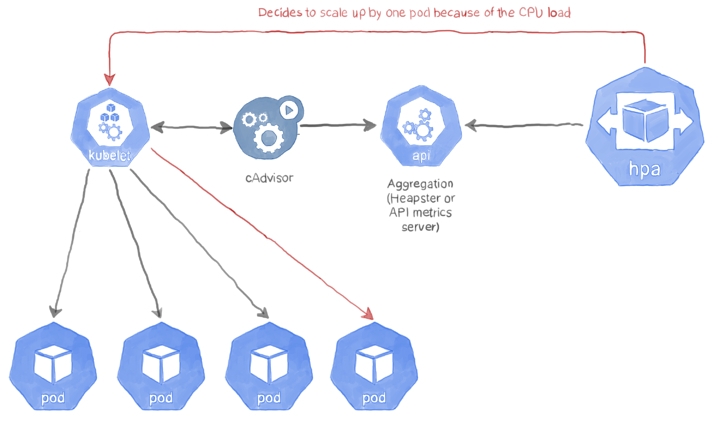

3. Leverage Autoscaling / HPA

Autoscaling and ability to create and run multiple replicas of containers in short time is one of the advantages of Kubernetes. Always consider running your application containers into smaller (in terms of resources) multiple replicas. This will ensure that your application’s total resource consumption is lowest when load on the application is minimum and your application will be able to quickly scale-up (produce more replicas to share the load) as the load increases.

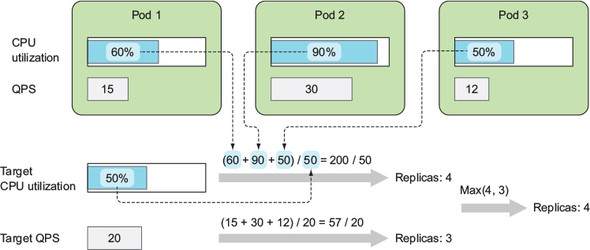

4. HPA Configuration

Container resources when tuned properly along with HPA Configurations give tremendous stability to the cluster while keeping the cost minimum. For example, you can reduce request resources and configure HPA to trigger scaleup at 60% cpu utilization by setting TargetCpuUtilization as 60%. Checkout How to configure HPA on a Kubernetes Cluster

5. Selecting Right Instance type

If your applications are memory intensive and your resources allocation ratio of cpu to memory is 1Core : 6GBs, it does not makes sense to use a C5.2xlarge instance with 8 vCPU and 16GBs memory as the autoscaler will trigger a scaleup as soon as 2 replicas of such a deployment are scheduled on this node rendering 6 vCPUs as waste and the resources will remain underutilized. Instead, you would want to use an R4.2xlarge instance with 8 vCPU and 61GB memory which will accommodate approx 8 replicas of the same configuration on the same node.