In the rapidly evolving landscape of software development, containerization has become a critical technology for deploying applications. Container orchestration tools help manage these containers' lifecycle, and Kubernetes has emerged as a leader in this space, thanks to its robust API, scalability, and comprehensive functionality. But what makes Kubernetes stand out, and why do many organizations choose it for container orchestration? This article delves into the purpose, advantages, distinctions from Docker, importance in DevOps, and cost benefits of using Kubernetes, along with its use cases in various cloud environments.

The Purpose of Kubernetes

Kubernetes, originally developed by Google and now maintained by the Cloud Native Computing Foundation (CNCF), is an open-source platform designed to automate deploying, scaling, and operating application containers. It aims to optimize the complexity of managing a large number of containers, especially in a microservices architecture, by providing a framework for running distributed systems resiliently. With features like self-healing, rollbacks, and a desired state configuration, Kubernetes facilitates large-scale deployments and enhances observability across the board.

Challenges with Previous Technologies

Managing containers at scale presents various challenges. Before Kubernetes, administrators and developers had limited tools for container management, leading to issues with provisioning, scaling, and maintaining the desired state of applications. Kubernetes introduces a control plane that abstracts complex management tasks, making it easier to deploy and manage applications across various distributions, from data centers to cloud platforms.

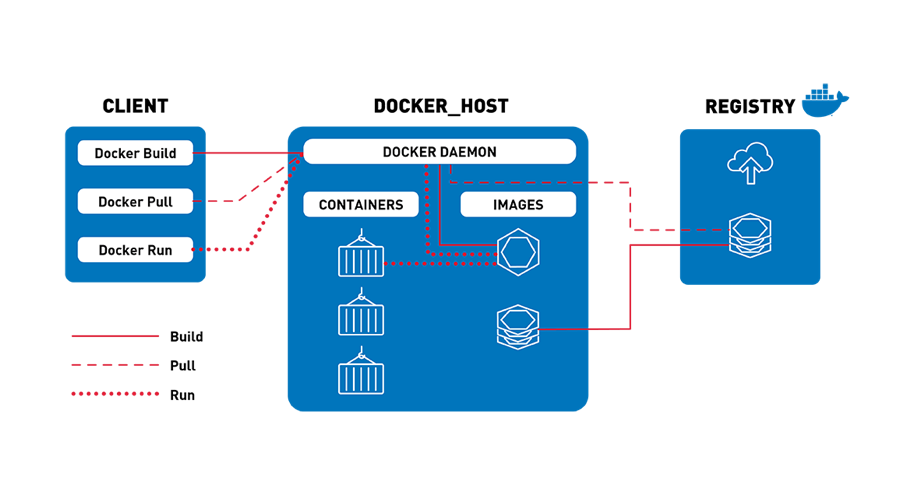

Containers became popular because they simplified going from application development to deployment without having to worry about portability or reproducibility. Developers can package an application and all its dependencies, libraries, and configuration files needed to execute the application into a container image. A container is a runnable instance of an image. Container images can be pulled from a registry and deployed anywhere the container runtime is installed: on your laptop, servers on-premises, or in the cloud.

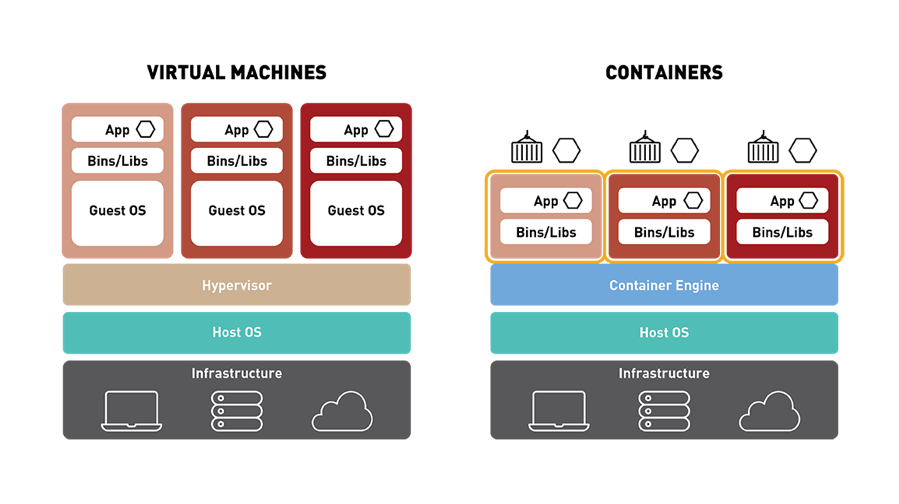

Compared to virtual machines, containers have similar resources and isolation benefits, but are lighter in weight because they virtualize the operating system instead of the hardware. Containers are more portable and efficient, take up less space, use far fewer system resources, and can be spun up in seconds.

Managing containers for production is challenging. As the container market continued to grow and many workloads transitioned to fully production-grade containers, it was clear cluster admins needed something beyond a container engine. Key capabilities were missing, such as:

- Using multiple containers with shared resources

- Monitoring running containers

- Handling dead containers

- Moving containers so utilization improves

- Autoscaling container instances to handle the load

- Making the container services easily accessible

- Connecting containers to a variety of external data sources

Advantages of Kubernetes

Kubernetes not only addresses the need for efficient container management but also introduces capabilities essential for modern software deployment. These include automating CD pipelines, supporting multi-cloud and hybrid cloud strategies, and ensuring applications can run on Kubernetes service in any environment. Kubernetes' API server facilitates seamless integration with external systems, enhancing the platform's flexibility and utility.

Containers paved the way to build cloud-native systems, in which services are implemented using small clouds of containers. This created an enormous opportunity to add and adopt new services to make the use of containers easier, faster, and far more productive. Since it was open-sourced by Google in 2014, Kubernetes has become the de-facto standard for container orchestration. Kubernetes leverages the power of containers while simplifying the management of services and machines in a cluster.

A Pod is a logical grouping of one or more containers, which are scheduled together and share resources. Pods enable multiple containers to run on a host machine and share resources such as storage, networking, and container runtime information.

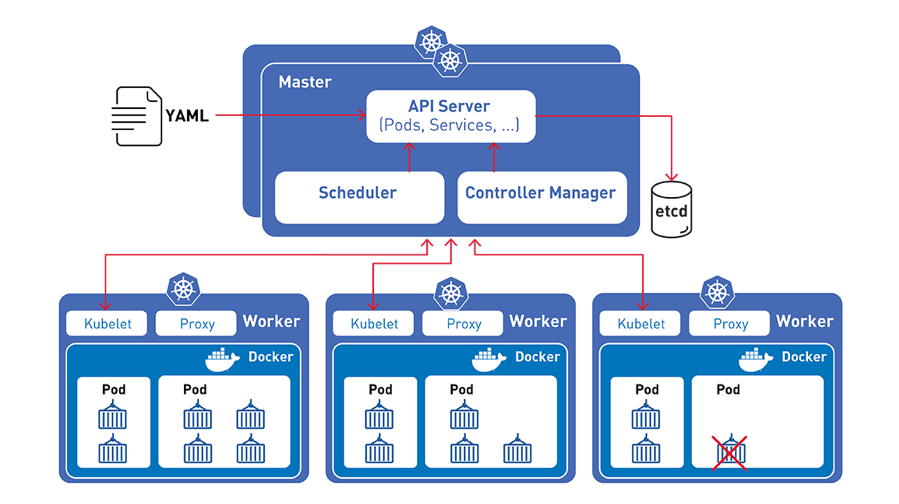

Kubernetes Clusters abstract their underlying computing resources, allowing users to deploy workloads to the entire cluster as opposed to a particular server. A Kubernetes cluster consists of at least one master node that manages the cluster and multiple worker nodes, where containerized applications run using Pods.

Kubernetes architecture enables:

- A single administrator to manage thousands of containers running simultaneously

- Workload portability and orchestration of containers across on-site deployments to public or private clouds and to hybrid deployments in between

Kubernetes offers numerous advantages that make it an attractive option for container orchestration:

- Scalability: Kubernetes can automatically scale applications based on resource usage like CPU and memory, or other metrics, making it highly efficient for managing workloads.

- High Availability: It ensures that applications are always available and can replace any instances that fail or become unresponsive automatically.

- Portability: Kubernetes can run on any environment: public cloud (Amazon AWS, Microsoft Azure, Google GCP), private cloud, or on-premises, making applications portable across different infrastructures.

- Resource Efficiency: By packing containers efficiently, Kubernetes maximizes resource utilization, which can lead to cost savings on infrastructure.

- Ecosystem: Being part of the Cloud Native Computing Foundation, Kubernetes has a vast ecosystem of tools and community support, enhancing its capabilities and ease of adoption.

Kubernetes vs. Docker

A common confusion arises between Kubernetes and Docker, primarily because they operate in the same space of containerization. However, they serve different but complementary purposes. Docker is a tool designed to create, deploy, and run applications in containers, while Kubernetes is a system for orchestrating those containers. Docker can manage containers on a single host, but when it comes to managing containers across multiple hosts, Kubernetes provides the orchestration and management capabilities needed to deploy applications at scale.

While Docker containers revolutionized the way applications are packaged and deployed, Kubernetes extends this innovation by offering a platform for orchestrating these containers at scale. Unlike Docker, Kubernetes provides a comprehensive solution for container management, including features for auto-scaling, load balancing, and ensuring high availability across clusters.

Kubernetes' Importance in DevOps

In the realm of DevOps, Kubernetes bridges the gap between development and operations, streamlining workflows and promoting efficiency. By automating deployment processes and facilitating continuous integration and continuous deployment (CI/CD) practices, Kubernetes plays a crucial role in modern software development, especially in environments that leverage cloud platforms like AWS, Azure, GCP, and OpenShift.

Why Running Kubernetes is Considered the Best Container Orchestration Tool

Kubernetes is often considered the best container orchestration tool due to its flexibility, scalability, and the strong community support it enjoys. Its ability to manage complex distributed systems across varied environments aligns well with modern application development and deployment needs. Kubernetes' comprehensive feature set, including but not limited to service discovery, load balancing, secret management, and storage orchestration, addresses the challenges of running applications at scale, making it a go-to choice for many organizations.

Kubernetes stands out for its scalability, flexibility, and the strong community support it enjoys from the CNCF. It is ideally suited for managing complex, distributed systems across varied environments, including cloud, on-premises, and hybrid setups. Its ability to minimize downtime through automated rollbacks and self-healing capabilities makes it a preferred choice for large-scale deployments.

Important Note: A successful Kubernetes deployment requires a thorough understanding of container management principles and the ability to configure and manage the cluster's resources efficiently.

Cost Benefits of Using Kubernetes

Using Kubernetes for container orchestration can lead to significant cost benefits:

- Improved Resource Utilization: Kubernetes efficiently manages resources, which means you can do more with less hardware.

- Reduced Overhead: Automating deployment, scaling, and operations reduces the need for manual intervention, lowering operational costs.

- Flexibility in Cloud Provider Choice: Kubernetes' portability allows organizations to avoid vendor lock-in and choose the most cost-effective cloud service provider or move workloads to cheaper environments.

- Scalability Without Complexity: Kubernetes' ability to automatically scale applications up or down based on demand means organizations pay only for the resources they use, without needing to maintain excess capacity.

Conclusion

In conclusion, Kubernetes stands out in the container orchestration landscape for its comprehensive capabilities, scalability, and cost efficiency. Its importance to DevOps practices and its role in facilitating modern application deployment strategies make it an indispensable tool for organizations looking to leverage containerization effectively. As the technology and its ecosystem continue to evolve, Kubernetes is likely to remain at the forefront of container orchestration tools, helping businesses achieve their operational and developmental goals.