For any organization, developing applications with speed, while ensuring that they can be deployed with efficiency and minimal service disruption is a priority. Whenever releasing a new version of an application, you want to ensure that you can do it with minimal service disruption for your users. When deploying new versions of the microservices, DevOps engineers use various deployment strategies to ensure a smooth rollout. In this blog, you will learn about various deployment strategies for Kubernetes which you can leverage to minimize downtime and ensure a seamless rollout of application updates.

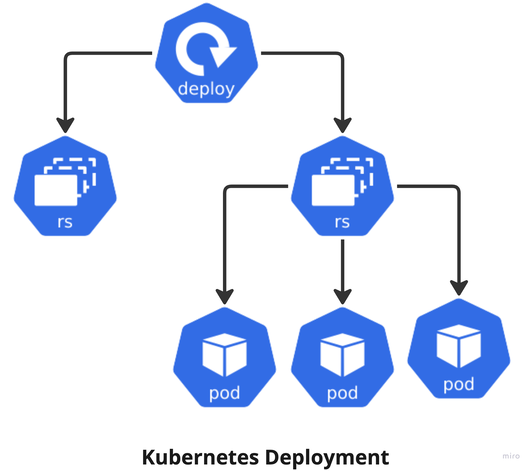

What is a Kubernetes Deployment?

Within Kubernetes, every application runs within a pod. However, the problem with running applications within a pod is that pods are ephemeral in nature which means that they can get destroyed at any point in time. When that happens, the application running within the pod stops as well. Imagine if you had five pods running the same application, and all five were destroyed at 3 a.m without any mechanism to recreate the pods.

Kubernetes has the ReplicaSet resource for addressing the ephemeral nature of pods. ReplicaSets ensures that a desired number of pods are running at any given point in time. If the number of running pods drops below the desired number, the ReplicaSet is responsible for communicating with the kubelet and creating the missing pods. However, the limitation of a ReplicaSet is that updating applications becomes very tricky.

An enhanced version of the ReplicaSet is the Deployment object within Kubernetes. The Deployment object acts similarly to a ReplicaSet, with additional functionality that makes it easier to roll out version updates. The deployment object enables you to define a deployment strategy that will update the application to a newer version without the need for any supervision. It also makes it easier to roll back to a previous version in case of any errors in the newer version.

The below diagram shows the relationship between a pod, a ReplicaSet, and a deployment.

Deployment Strategies

When you release an update of your application, you want to ensure that you can deliver the updates without interrupting the user experience or posing any downtime. With that being the goal, deleting the old application pods and deploying the new application all at once isn’t the best idea. DevOps teams will need to devise a proper deployment strategy that will ensure that the updates are rolled out smoothly without any service downtime.

As you learned earlier, Kubernetes deploys applications using the Deployment object. It comes with a few strategies out of the box to roll out application updates. These strategies are the

- Rollout Deployment Strategy

- Recreate Deployment Strategy

Apart from these two basic deployment strategies, there are several other deployment strategies. These deployment strategies are considered advanced deployment strategies. Sometimes, the above two basic strategies may not satisfy your business requirements. In that case, you will make use of one of the advanced strategies. Some of the advanced strategies include

- Blue-Green Deployments

- Canary Deployments

- A/B Testing

- Shadow Deployments

Let’s look at each one of the deployment strategies mentioned above, how they work, and when you might want to use them.

Rolling Updates

When you create a deployment, it uses the rolling update deployment strategy by default. Within the manifest of the deployment resource, you can define a deployment strategy. If it isn’t defined, the rolling updates strategy will be used by default. It works slowly by replacing pods of the previous version of your application with pods of the new version ensuring that there is no application downtime. The pods are replaced a few at a time. We will shortly look at how we can define the number of pods that can be upgraded at once.

Rolling deployment typically waits for new pods to become ready via a readiness check before scaling down the old components. If a significant issue occurs, the rolling deployment can be aborted.

For configuring the rollouts, Kubernetes provides two options out of the box

- Max Surge: The number of pods that can be created above the desired value in the deployment spec. This can either be an absolute number or a percentage. Default: 25%

- Max Unavailable: The number of pods that can be unavailable at any given point in time. This can either be an absolute number or a percentage. Default: 25%

As mentioned above, the rolling updates are the default deployment strategy that is used when you create a Kubernetes deployment object. You can change the Max Surge and Max Unavailable weightage and configure what level of availability you would like for the pods. Within the YAML manifest, the rolling strategy will look as follows:

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

Use Cases

- It is mostly used when organizations want a simple solution to update any application or roll back to previous changes without allocating any extra resources or performing any complex deployment tasks.

- It is best suited for applications that are smaller in size and can afford downtime in case of any failures.

Recreate

The second deployment strategy that comes with Kubernetes out of the box is the Recreate strategy. Unlike the Rolling Deployment strategy, the Recreate deployment strategy involves an abrupt replacement process where all old pods are terminated, and then instantaneously substituted with new ones. Since this strategy deletes every single pod of the deployment in one go, you are going to face a certain amount of downtime until the new pods are in a ready state.

Using the Recreate strategy is only recommended for development or testing environments, and never in a production environment. You can see the recreate strategy in action in the below diagram

Similar to the rolling update strategy, recreate is a built-in deployment strategy for the Kubernetes deployment object. To use the recreate strategy, the following YAML snippet can be used with the deployment manifest

strategy:

type: Recreate

Use Cases

- This deployment strategy is typically only used in development environments where downtime won’t affect any production users.

- The recreate method is best used when you need to run data migrations in between terminating your old code and starting your new code, or when your deployment doesn’t support running version A and version B of your application at the same time.

Blue/Green Deployment

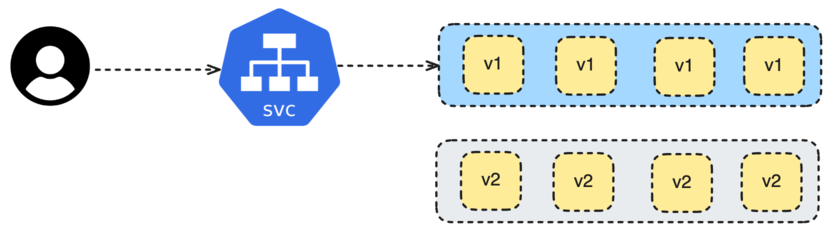

The Blue/Green deployment strategy, also known as the Red/Black strategy, is one of the more advanced deployment strategies. The blue-green strategy involves deploying two versions of the application at the same time. While both versions are deployed, only one of the versions is live i.e. accessible by end users.

Within the blue/green deployment strategy, traffic is routed to the blue deployment, while the green deployment is still being deployed. Once the green application is fully deployed, tested, and validated, we can then start to route the traffic to the blue deployment. We can either delete the green deployment or keep it for some time in case we wish to trigger a rollback.

Let’s try to understand this with an example. Let’s say that you currently have v1 of an application running in the cluster, and you want to deploy v2 of the application via the blue/green deployment strategy. The v1 application is the blue application and v2 is the green application. Currently, your service will point to v1 of the application, even after deploying the pods for v2 of the application. Next, you can update the existing service, to direct traffic to v2.

Creating the Blue/Green deployment strategy in Kubernetes requires several considerations. Usually, you will have two deployment objects, one for the blue version and another for the green version of the application. Since both application versions will be running at the same time in the cluster, you have to play around with the services to route the traffic to the correct version.

You can create both deployments using the rolling updates strategy, but attach a load balancer service to only the blue version of the application. Then, you can gradually attach a load balancer to the green deployment and remove the one for the blue.

To learn more about the considerations for a blue-green deployment, check out this article for further reading.

Use Cases

- This allows you to live-test the new version of your application without impacting your users. Once your testing is complete, you update the load balancer to send user traffic to the green version of your application.

- A blue/green deployment strategy works well for avoiding API versioning issues because you’re changing the entire application state in one go, but as you need to double your cloud resources to run both versions at the same time, it can be very expensive.

Canary Deployment

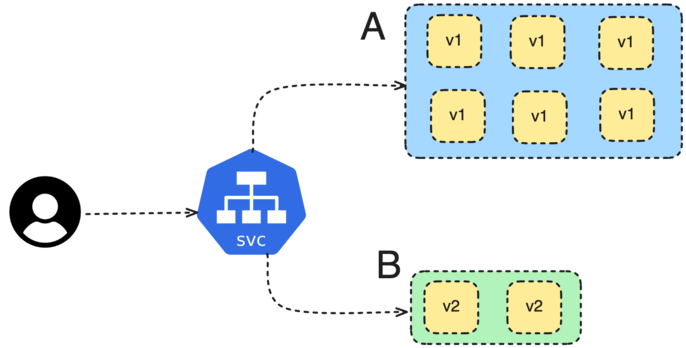

The Canary deployment strategy is a partial rollout that only upgrades a few pods to the newer versions of the application. They are similar to the Blue/Green deployment strategy we saw earlier, but a key difference is that canary is a more controlled deployment mode, and the application is progressively delivered.

When an application is deployed via Canary, a very small number of real users will get access to the newer version of the application. Imagine that the application has a total of 10 pods. In the beginning, only 3 of these pods will get updated to the new version. Which means only a small percentage of users will be able to access it. As time goes on, you update more pods until all the 10 pods are running the new version of the application.

A Canary deployment strategy is useful when you want to roll out the updates slowly. This is useful when you want to collect user feedback about the newer release or want to change more things before every single user can access it. Canary deployments are also useful when you want to monitor how the service behaves before it’s available to all your users. This helps resolve any potential bugs or issues.

When implementing a Canary deployment strategy, the first thing to understand is what’s the distribution of traffic you want between the older service and the newer service. While it’s possible to create a canary deployment without using any other tools, it can get tricky to distribute the traffic.

To understand more about Canary deployments and how they can be implemented, please check out these articles for further reading:

- Basics of Canary Deployment Strategy

- Canary Deployment with NGINX Ingress

- Canary Deployment with Istio and Flagger

Use Cases

- One of the best use cases is when you need real traffic testing of your new versions and have the resources to manage the complex setup.

- It can also limit the damage caused by the release of a faulty software update and also roll back that update quickly and easily.

- It allows you to release software faster. Since it contains the damage caused by a problem to a small subset of users, canary deployments give organizations the freedom to deploy to production constantly, allowing them to remain competitive.

A/B Testing

Within the A/B testing deployment strategy, the newer version of the software is released to only a small group of users. While A/B testing is generally confused with blue-green deployments, the two are not the same. The biggest difference between the two is that the A/B testing strategy is mainly used to test new features in your applications such as a new design, a new UX, noticeability, etc.

The main distinction is that the blue-green strategy is used for actually releasing the updates in batches so that rollbacks are easier to perform in case of any errors. A/B testing on the other hand is for measuring functionality in the application.

Use Cases

- A/B deployments allow developers to make continuous changes to the case base and test the impact of those changes. This is most useful for UI and visual components.

- It allows developers to develop a fault-tolerant architecture by testing various approaches and observing their impact in real time on a small user base.

Shadow Deployment

When choosing the Shadow deployment strategy, any changes to an application are deployed in a parallel environment that mimics the production environment. The deployed changes are not visible to the end-users, hence the term “shadow.” This kind of hidden deployment makes it possible to observe the application's behavior and the impact of its changes without causing any service disruption to the live application.

The primary purpose of shadow deployment is to test real-world application behavior under load, identify performance bottlenecks, and ensure that the application changes don’t affect the user experience negatively. It allows developers to test changes in an environment that closely replicates the live production environment, thereby reducing the risk of unforeseen issues cropping up post-deployment.

Shadow deployments are a way of providing a safety net to developers and aid in ensuring a smooth transition when the changes are finally deployed to the live environment.

Use Cases

- Shadow deployments allow for testing in a production environment without impacting user experience or experiencing service degradation.

- It is also useful to quickly find problems and mitigate them before the application reaches the end users. This ensures a great and bug-free experience for the end users.

Conclusion

There are multiple types of different deployment strategies that can be used for rolling out application upgrades within Kubernetes. Out of the box, Kubernetes has the rolling updates and recreate deployment strategy. While these are simple types of deployment strategies, they are quite beneficial for a smaller-scale application and for testing or development environments.

As the scale of the application increases, some more advanced deployment strategies can be implemented for rolling out updates. The advanced deployment strategies consist of Canary, Blue/Green, A/B testing, and Shadow deployments. Rather than just rolling out the updated version, these advanced strategies are also useful for testing out different code changes in a production environment, without negatively impacting the user experience.

While implementing the simple strategies is quite easy, the more advanced strategies will require more setup, and you will have to familiarize yourself with multiple different tools. If you’d like to explore a solution for creating and testing various strategies, I urge you to give Devtron a spin.

Your thoughts are welcome - feel free to join our Discord Community if you wish to know more about Devtron or which strategy to choose for your organization. We'll always be there to assist you!