Deploying releases into production always gives goosebumps, even though we have been doing it multiple times. Different deployment strategies are advocated to alleviate some nervousness, and Canary Deployment is one of them. With the release of specific canary annotations by NGINX Ingress, we can also achieve canary deployments using the ingress controller. In this blog, we will see how easily we can perform canary deployments in a few clicks without writing multiple YAML files. In this blog, we will talk about -

- What are Canary Deployment and their benefits

- NGINX Ingress Canary annotations

- Traditional approach to Canary release

- How to achieve Canary deployments using Devtron

Canary Deployments

Canary Deployments allow teams to expose newer features to a selective percentage of the total user traffic instead of 100% of the user traffic in a single go. It allows organizations to calibrate the percentage of users who will be exposed to the newer feature in a phased manner, for e.g., 5%, 15%, 75%, and 100%. Since Canary is one of the least risk-prone and not as resource intensive as a Blue-Green strategy, it is primarily used for production releases.

Some Benefits of Using Canary Deployments

- Allows real-time testing with live traffic for new releases

- Reduces the damage caused by the faulty release as it is exposed only to a portion of the traffic

- Easy and fast rollback

- Boosted Developer confidence in experimenting with new features

- Faster Feedback incorporation

Nginx Ingress Canary Annotations

The Nginx ingress controller is a production-grade ingress that provides routing rules for end-users to manage the routing of north-south traffic to microservices, typically by using HTTPS/HTTP. With the new releases of NGINX, `Canary` deployment has been introduced in ingress annotations. Some of the annotations that we will use for the demonstration are :

- nginx.ingress.kubernetes.io/canary: "true"

For any ingress to support canary deployments, you must set the value of canary annotation totrue. - nginx.ingress.kubernetes.io/canary-by-header: X-Canary

This annotation is used to specify where to route the incoming traffic to. If this is set toalways, all the traffic will be routed to the canary service, and if it is set tonever, none of the incoming requests would route to the canary. - nginx.ingress.kubernetes.io/canary-weight: 50

Canary weight specifies the amount of traffic to be routed to the canary service and the stable service. If it is 50 (in our case), the incoming traffic will be distributed equally between the canary and the stable service.

To know more about the other annotations supported by NGINX ingress, please refer to Kubernetes ingress documentation.

Traditional Approach to Canary Release

Let’s assume you have already deployed your application in the respective environments with ingress objects. Now for the canary release, you have to add the annotations mentioned above in your ingress object. Apply the following YAML after changing labels, service.name, namespace, and ingressClassName as per your configuration.

Tada! We have successfully rolled out a canary release. At the end of the article, we will also see how we can check the pod where requests are being directed and play around with the annotations used.

Devtron’s Approach to Canary Release

Let us now set aside the traditional approach to Canary release using ingress controller annotations and jump straight to a much simpler process using Devtron.

Time to get our hands dirty.

Prerequisite: Before getting started, follow the installation documentation to install Devtron in your system and set up the Global Configuration before deploying an app.

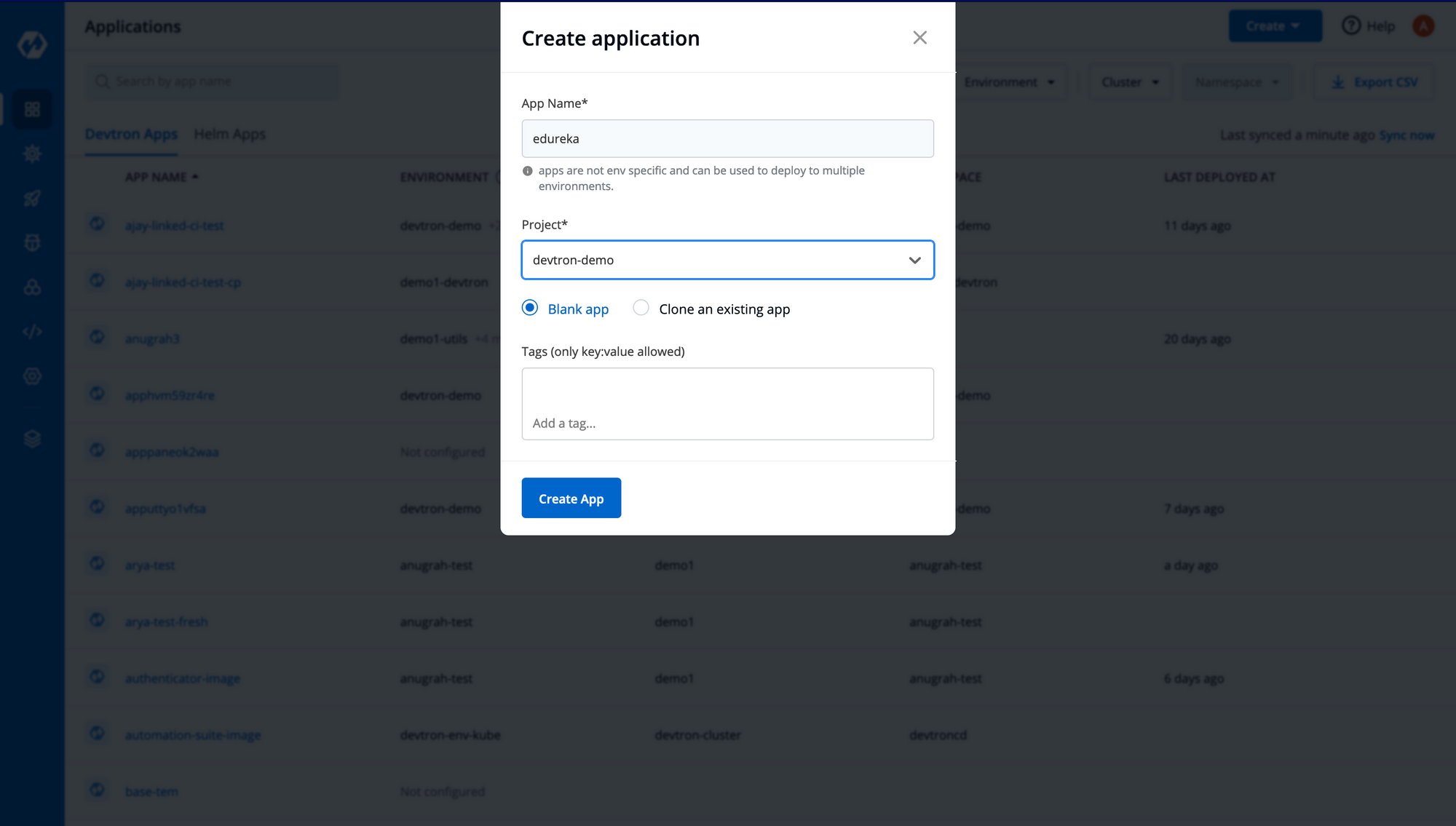

Step-1: Let’s create an application, that is pretty straightforward on Devtron. On the dashboard, click on Create New → Custom App → Fill in the details as shown below and click on Create App.

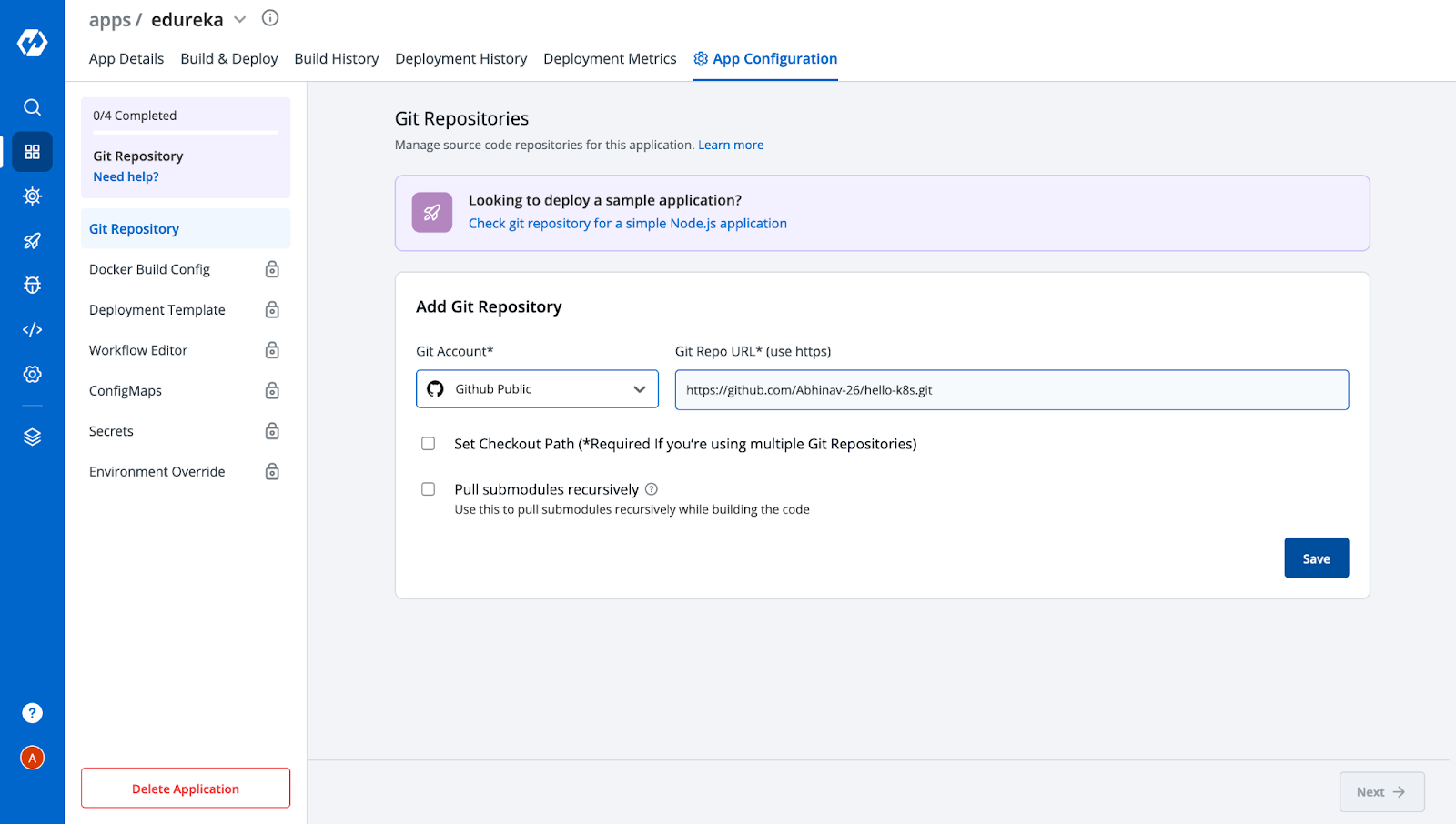

Step-2: Now, we will be automatically redirected to the App Configuration tab, where we need to do a few configurations as shown below. The first is to set up the Git Repository where your application is present.

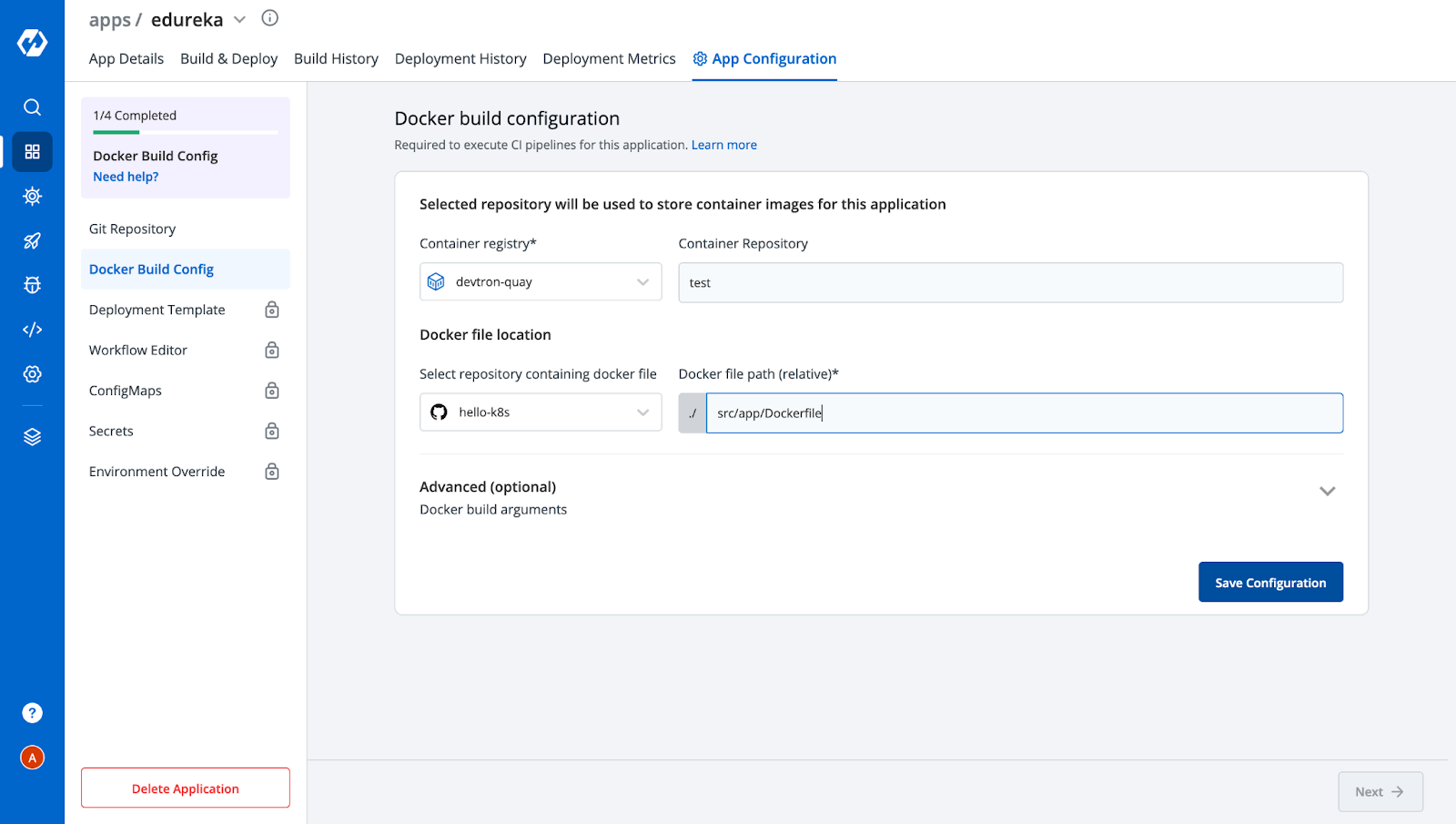

Step-3: Next we need to provide a Docker Build Config to build an image for your application.

Step-4: This is where the magic happens. Devtron comes with a pre-defined Deployment Template which contains almost every configuration that a typical microservice would need. Everything is already configured in the Deployment Template out of the box, from giving ingress configuration to autoscaling. Here we need to provide a container port where the application would be accessible, then enable ingress and give the canary annotation like we discussed above. We can also configure other parameters like resources, autoscaling, etc as per the requirement.

[Note: Ingress Controller should be deployed on your cluster before deploying ingress]

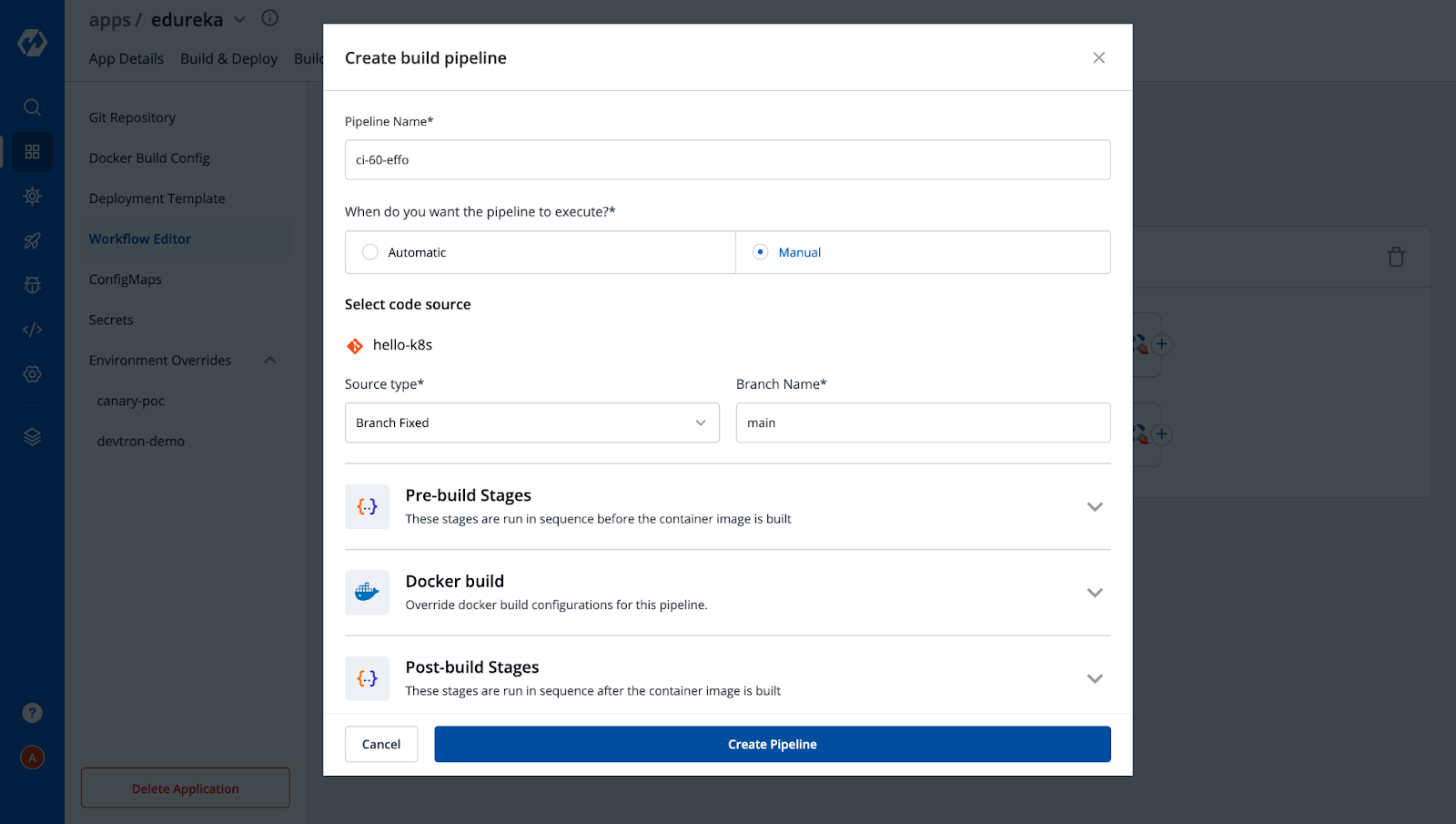

Step-5: Since we have configured our application specifications, we are ready to move to Workflow Editor to create CI and CD pipelines. Click on New Build Pipeline → Continuous Integration and provide your necessary build configs as shown below.

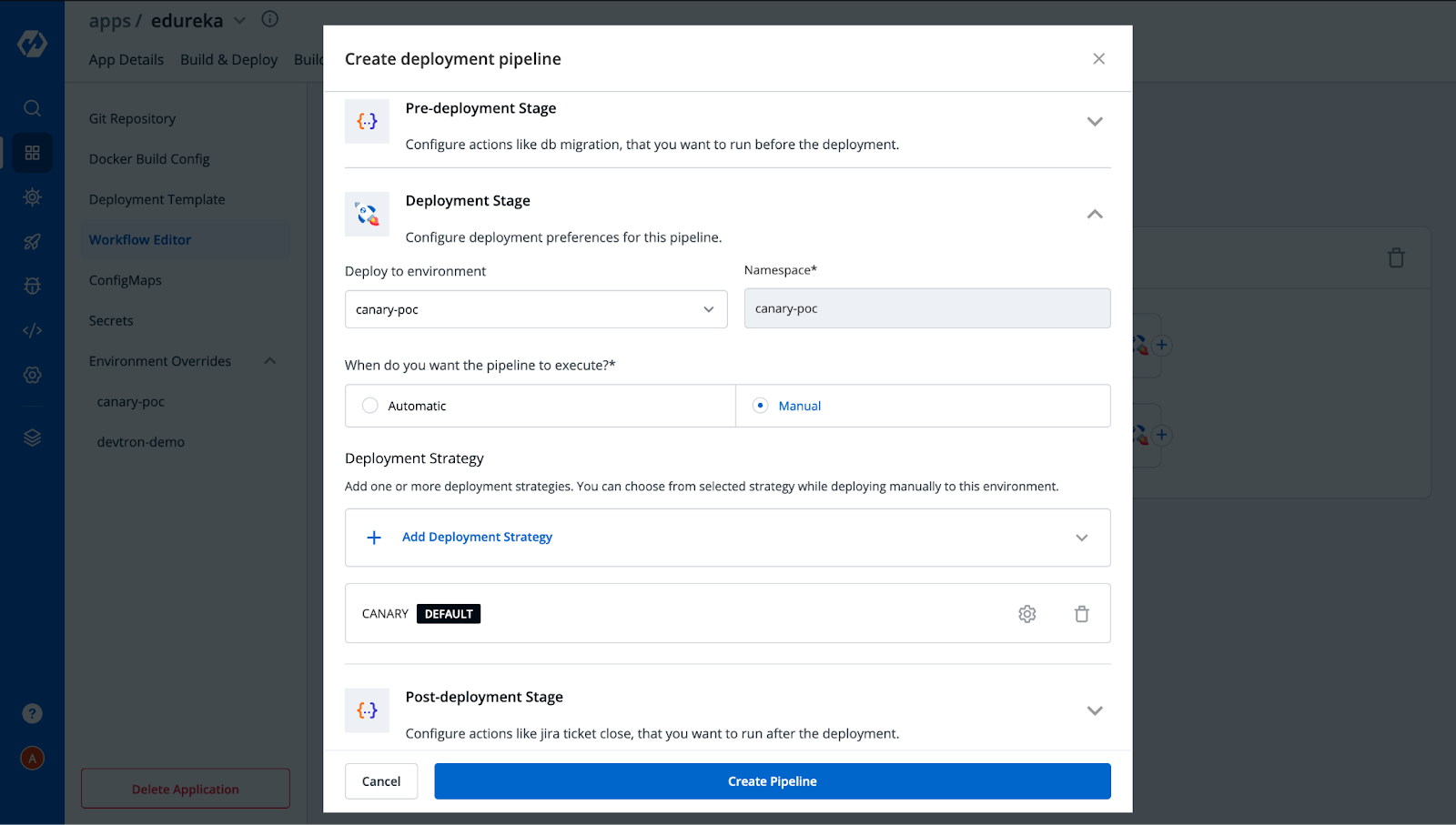

After creating Build Pipeline, click on the + button to Add Deployment Pipeline and provide the necessary configs as per your requirement.

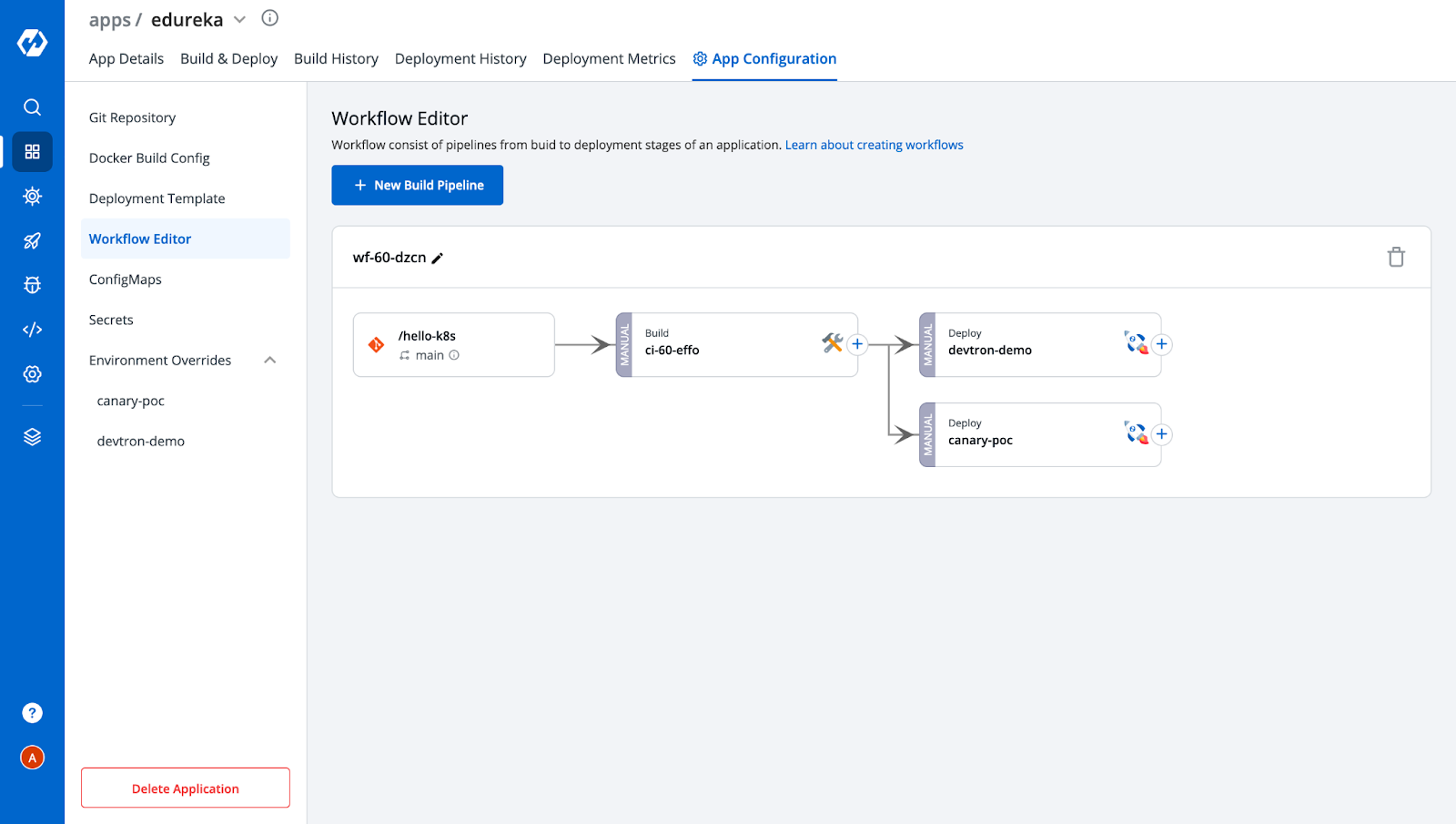

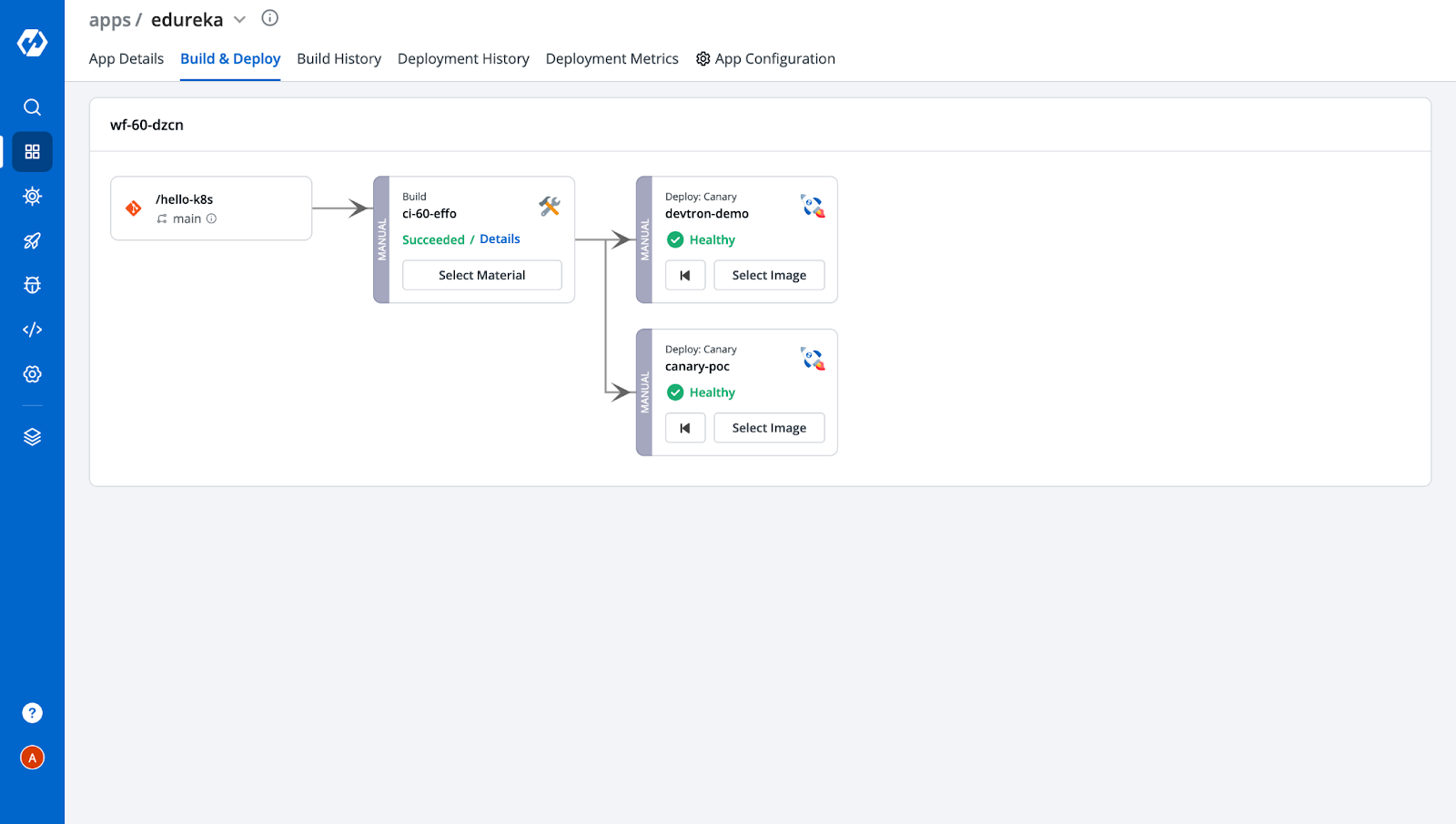

Similarly, you can also create multiple deployment pipelines for a single build. In our case, we have created two deployment pipelines, one with Canary enabled and another without Canary, to check and verify services serving incoming traffic. After adding two pipelines, the Workflow editor would look like this.

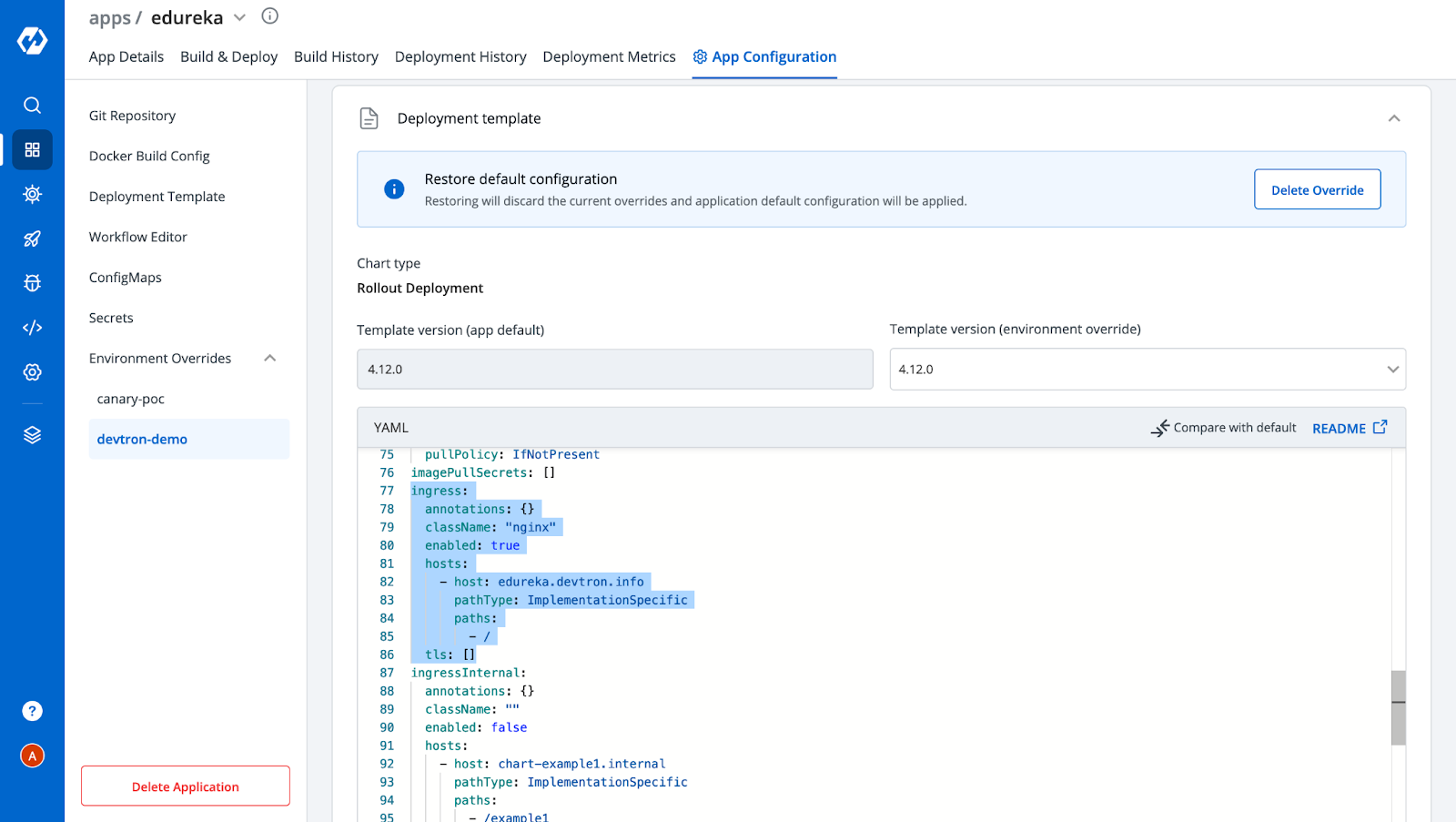

Also, you can observe from the above image that after creating the deployment pipelines, we got two environments under the Environment Overrides Config. It will increase with the number of deployment pipelines that you add. These represent the configuration you saved for each deployment. Since for the other environment i.e, devtron-demo, we don’t want the Canary Ingress, let's remove the ingress annotations from it.

Step-6: Click on devtron-demo to expand the Deployment template configuration and then click on Allow Override. After that, you can make changes in configurations and save them. In our case, we have removed the NGINX annotations and saved the deployment template.

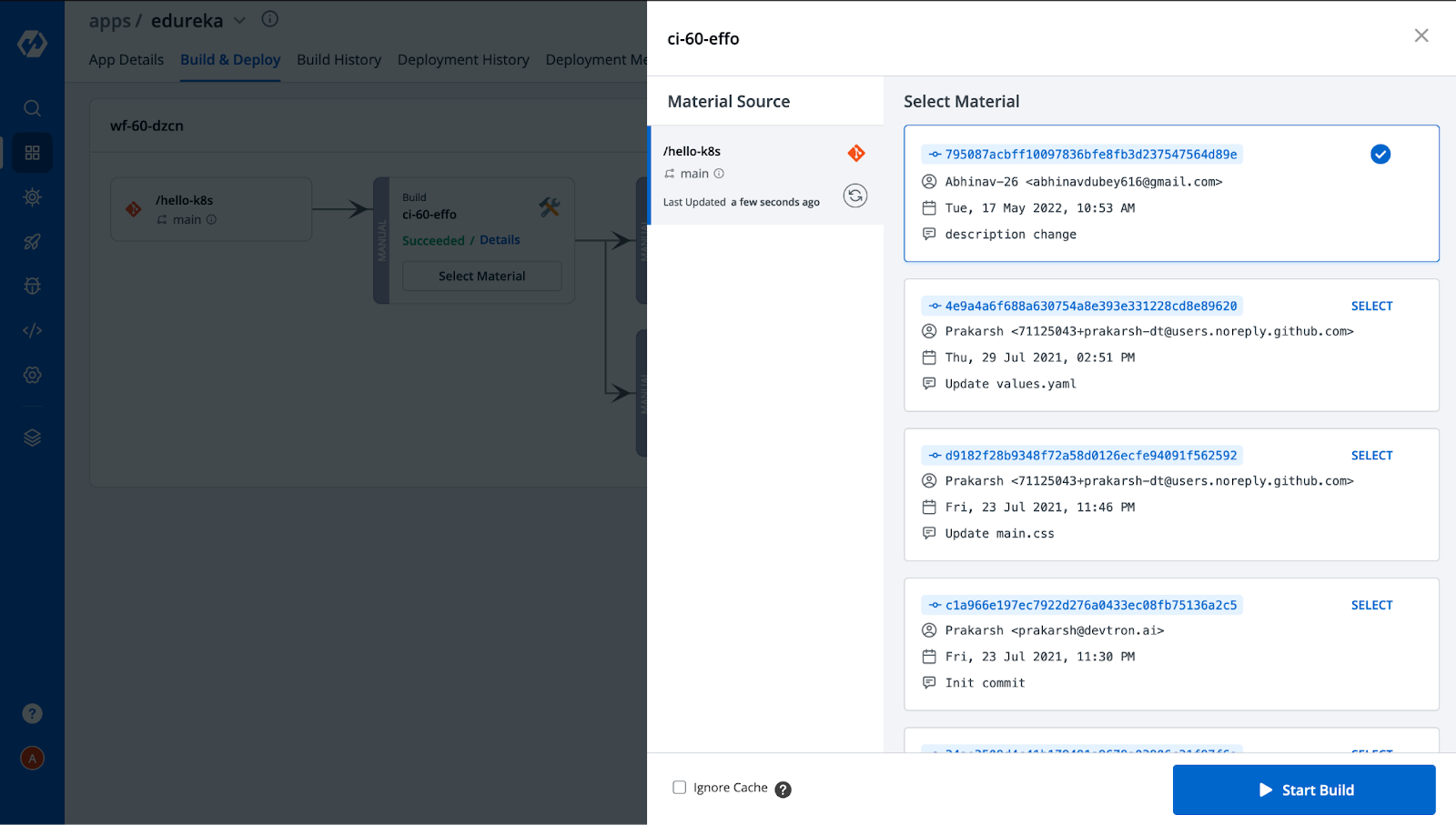

Step-7: This completes the configurations. Now we need to build the image and deploy it in two different environments. i.e, canary-poc and devtron-demo. Let’s move to the Build & Deploy tab → click on Select Material → Select the commit for which you want to build the image, and then click on Start Build.

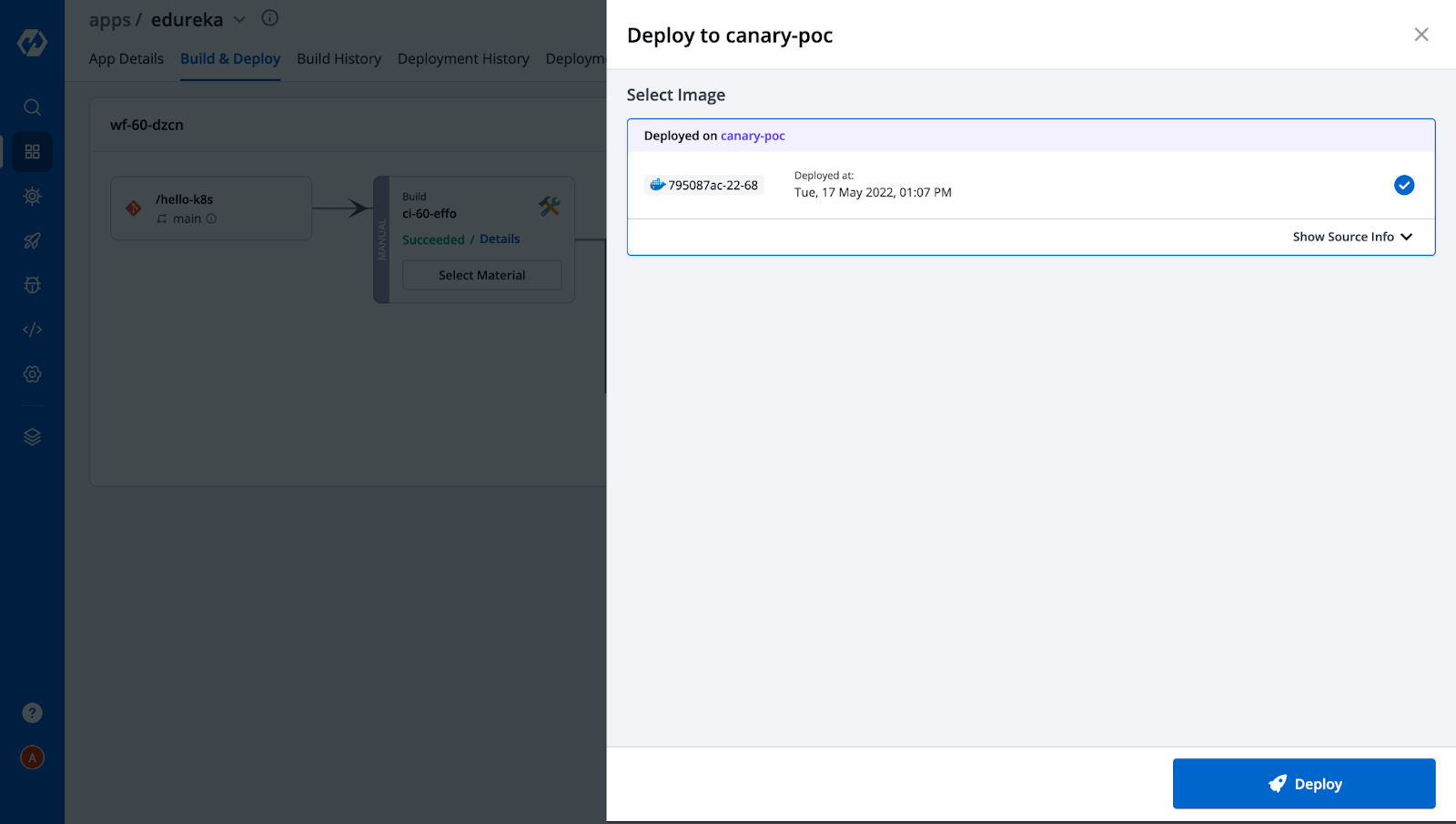

Step-8: After the image is built, we need to deploy the image to both pipelines. Click on Select Image → Deploy, as shown below.

After the successful deployment in both environments, the Build & Deploy tab would look like this,

Step-9: As we can see, the application is now deployed successfully. To learn more about the application status, navigate to the App Details tab to see a detailed dashboard with metrics and all the workloads created as per the configurations we provided while configuring the Deployment Template.

In the below snippet, all the YAML files of Kubernetes objects are already created by Devtron without having to write a single line. You can also see how easily we can navigate to different environments and check the configuration we applied for each of them with a few clicks.

Deployment is a breeze with Devtron!

App details of Canary deployments

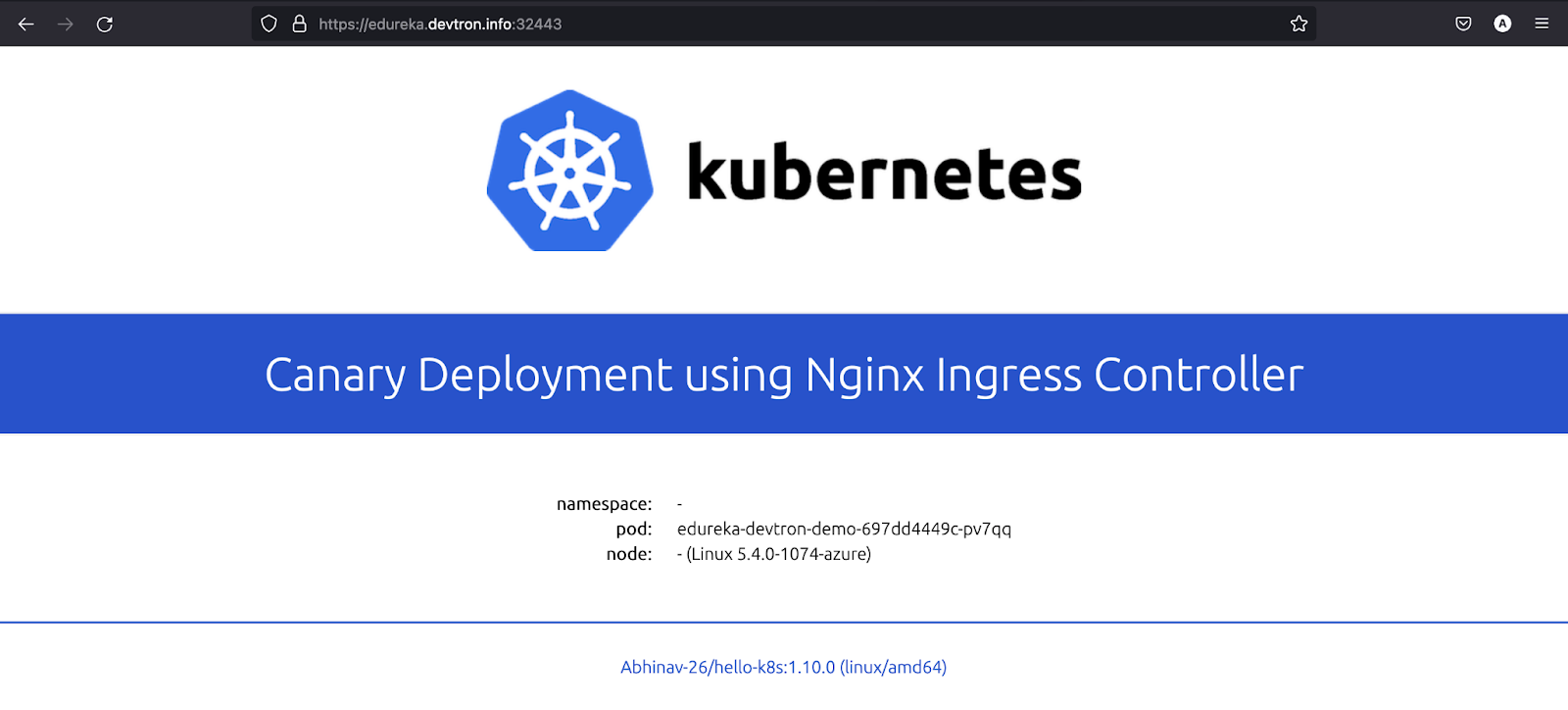

Step-10: Now let’s access our application on the ingress we have given.

[Note: The IP of the node should be mapped to the ingress host if you are using NodePort or Loadbalancer. In our case, we are using NodePort]

In the application, you can see the pod name which is serving the user traffic request. The pod is named edureka-canary-poc-59688fff4f-jmx64 since the request was routed to canary deployment. If we refresh the page again 1-2 times, we will see the pod name has changed to edureka-devtron-demo-697dd4449c-pv7qq (as shown in the image below) since it was routed to the stable release.

The magic is happening because of the ingress annotations that we gave and set the canary-weight as 50. So the incoming traffic is distributed between the pods.

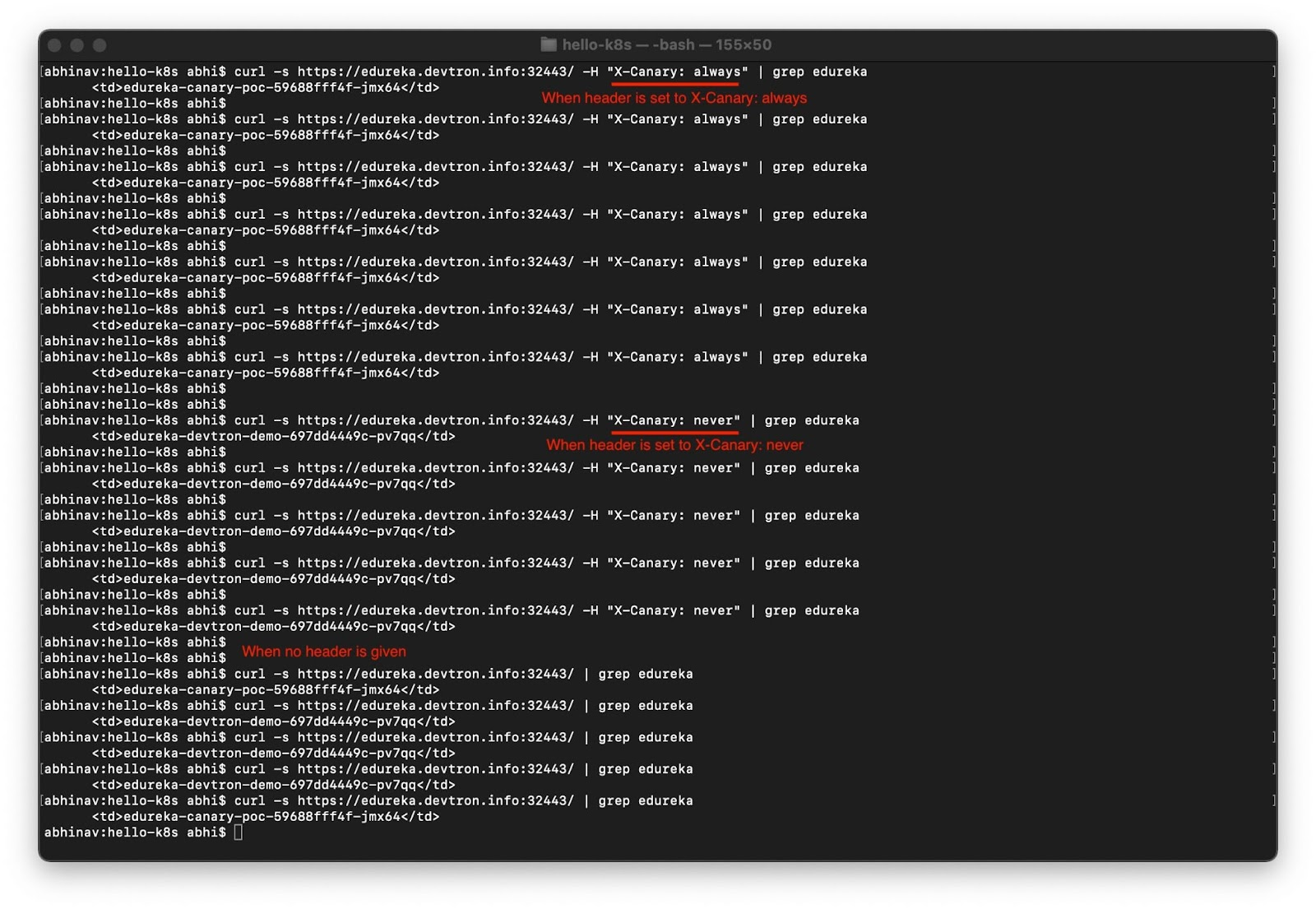

If you want to redirect all the requests to the canary or to a stable service, it can also be achieved by passing the header in the request. In the below image, as you can see, when we pass the header X-Canary: always, all the requests are routed to canary deployment, and when we pass X-Canary: never none of the requests are sent to the canary. Also, in the end, where we didn’t provide any headers, the requests were randomly distributed to canary or stable releases.

Kudos! As you can now see that configuring and implementing a Canary deployments are made easy using Devtron.

Try out Devtron on multiple environments to explore more custom requirements to match your needs. Feel free to explore Devtron and join the Discord community to get answers to all your Kubernetes/Devtron questions. Check out the GitHub repo and give it a Star, if you like it.

FAQ

What is a Canary Deployment in Kubernetes?

Canary Deployment lets you release new app versions to a small set of users before full rollout, helping reduce risk.

Routes a small % of traffic (e.g., 5%, 25%, 50%) to the new version.

Enables real-time testing in production.

Supports fast rollback and safer deployments.

Boosts developer confidence with early feedback.

How does NGINX Ingress support Canary Deployments?

NGINX Ingress uses annotations to control traffic routing for canary releases.

canary: "true" enables canary behavior.

canary-weight: "50" splits traffic between stable and canary.

canary-by-header: X-Canary routes traffic based on request headers.

How does Devtron simplify Canary Deployments?

Devtron provides a no-code way to configure and manage canary releases using its UI.

Pre-configured deployment templates with ingress/canary support.

Visual CI/CD pipelines for setup and rollout.

Environment overrides without writing YAML.

What are the benefits of using Devtron for Canary Releases?

Devtron makes canary deployments easier, faster, and more reliable.

No YAMLs needed—configure from the dashboard.

See which pods are serving traffic in real time.

Quick rollback and header-based traffic control.

Supports multi-environment testing and overrides.