Kubernetes has emerged as one of the most essential tools for deploying microservices in most of the organizations. While its popularity is rising due to its ability to effectively orchestrate containerized applications, the associated costs may quickly spiral out of control! Many organizations overlook these simple cost-saving strategies and end up with hefty cloud bills.

Effectively managing Kubernetes costs can be challenging, with expenses rapidly escalating due to factors like over-provisioning and inefficient resource utilization. Devtron simplifies cost optimization by providing comprehensive insights into resource consumption, automating scaling operations, and implementing best practices for efficient Kubernetes management.

In this blog, we will identify the key factors that drive up costs, discuss the concept of cost optimization in Kubernetes, and outline the various strategies to reduce these expenses without sacrificing any performance.

Key Factors contributing to Kubernetes Costs

When running a Kubernetes cluster there isn’t a single source that accounts for the majority of the Kubernetes costs. Instead, there are several smaller factors that add up to the Kubernetes costs and it can be classified into these 3 major categories: Compute, Network, and Storage.

Compute Costs:

In Kubernetes, we require compute resources to run both the control plane (Master node) and the worker nodes. The control plane and the worker nodes are essentially the virtual machines that are provided by the cloud providers. Now each of these machines is charged with respect to their CPU and memory capacities. So the more nodes you deploy and higher their resource specifications, the larger the compute costs will be.

Network Costs:

In Kubernetes, we require networking resources to run the infrastructure which helps us in various networking-related activities, such as data transfer, egress (data flowing out of the cloud), ingress (data flowing into the cloud), load balancing, and other networking services.

Now, this flow of data in and out of the cloud due to these various networking-related activities contributes to the overall network costs in Kubernetes. Again, the higher the flow of the data, the higher will be the network costs.

Storage Costs:

Storage costs in Kubernetes are majorly due to the data storage of the containerized applications. These storage solutions can be categorized as persistent storage (for databases) and ephemeral storage (for temporary files). Both the storage solutions are not free as there are fees associated with storing data. Persistent storage tends to be more expensive than ephemeral storage, and the cost depends on the type and size of the storage solution used.

What is cost optimization in Kubernetes?

We have explored the various factors that accumulate to higher bills when running a Kubernetes cluster for containerized applications. However, these costs can be managed and significantly reduced by being aware of the cloud services used and understanding our actual requirements. This is achieved by analyzing our current setup, identifying areas for improvement, and implementing changes that will have a positive impact on both performance and cost. So, Cost Optimization in Kubernetes refers to making the Kubernetes infrastructure and the resources as cost-effective as possible which would prevent unnecessary expenses and ensure that resources are being used effectively.

Key Strategies for Kubernetes Cost Optimization

Till now we have discussed the factors that contribute to rising Kubernetes costs and the concept of Kubernetes cost optimization. Now, it’s time to discuss the key strategies that can help us effectively reduce these expenses:

Tracking Kubernetes Expenses:

The first step in reducing the expenses is to track the Kubernetes resources being consumed and the cost arising due to it. This provides insight into which resources are driving the costs the most, helping us identify what the most important cost drivers are.

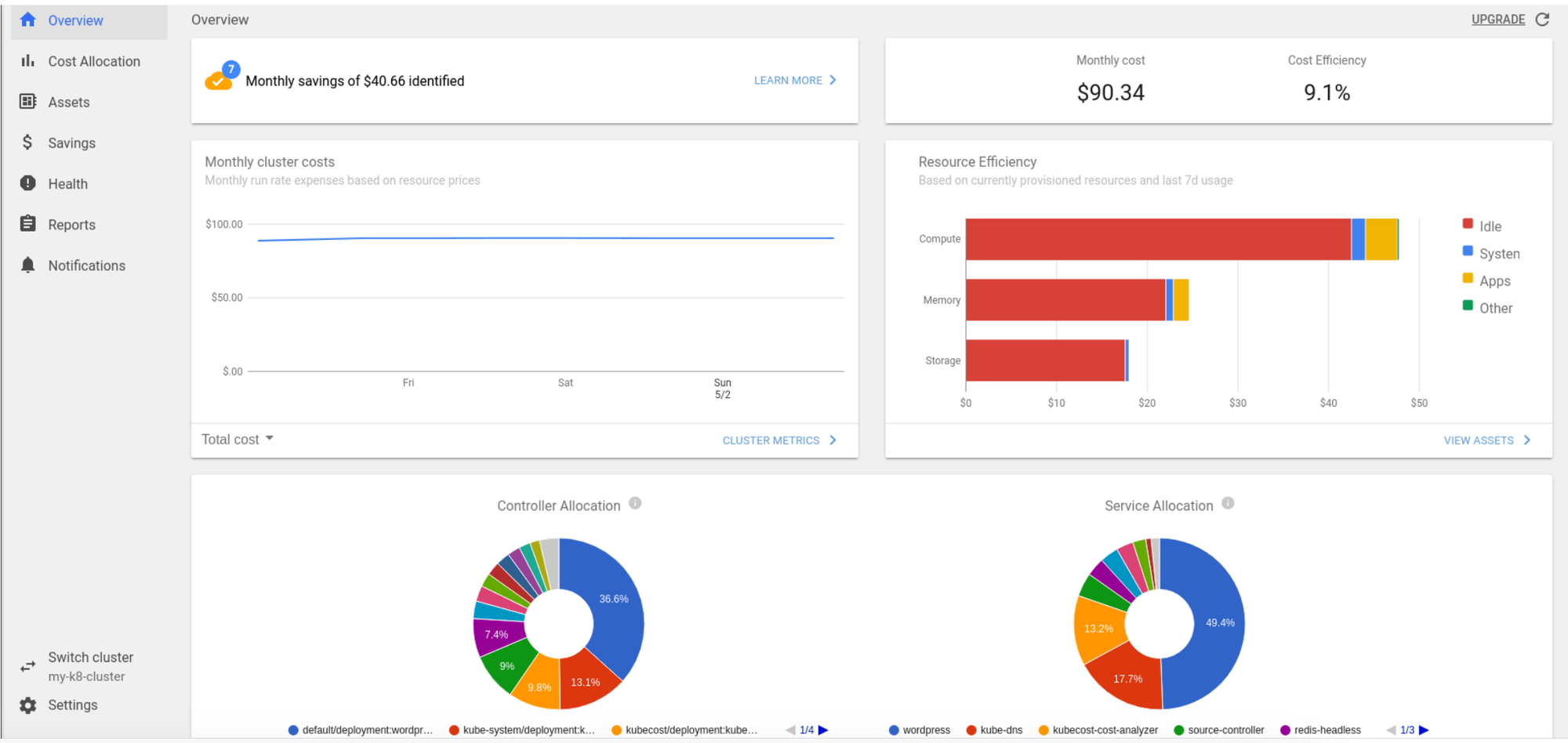

The cloud providers already provide us with basic monitoring dashboards (AWS with Cost Explorer, GCP with Cost Management, Azure with Cost Management+Billing) to track costs and provide alerting features that notify us when resource limits are being exceeded. However, the overview provided by the cloud providers can only give us a high-level view of resource usage. For in-depth analysis, we can leverage tools like Prometheus, Grafana, and Kubecost to gain a more detailed understanding of our Kubernetes usage and cost.

Set Resource Limits:

After we’ve tracked our costs and identified the key objects driving them up we can leverage the Kubernetes resource limit feature to set only limited resources for Kubernetes native objects and users. This ensures that no application or user of the Kubernetes system consumes excessive computing resources.

Implementing Kubernetes resource limits in an organization is very essential because it enforces a fair sharing of equal resources. Without resource limits, one user could consume all the resources leaving the other users unable to work and leading to the need for additional compute resources in total. By enforcing limits, we can avoid resource exhaustion and reduce overall costs.

apiVersion: v1

kind: Pod

metadata:

name: frontend

spec:

containers:

- name: app

image: images.my-company.example/app:v4

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

Shared Kubernetes Cluster:

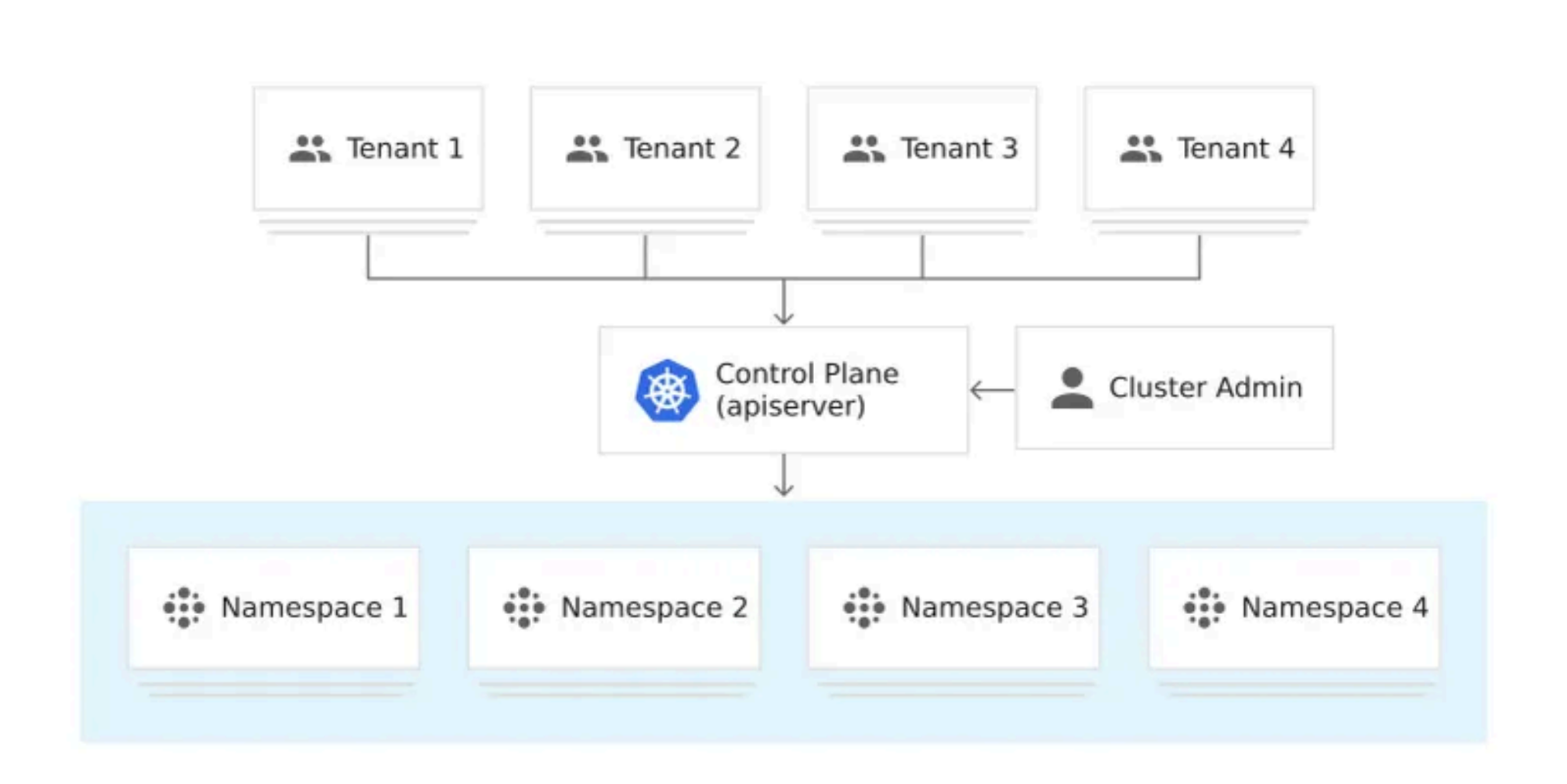

A very smart cost-saving strategy would be to reduce the number of Kubernetes clusters. Sharing Kubernetes clusters across multiple projects or teams, a practice known as multi-tenancy can drastically reduce costs. This usually works because the control plane and the computing resources can be used by several users and applications in a multi-tenancy setup. Additionally, this approach simplifies management, reducing the complexity for administrators who would otherwise have to oversee numerous clusters.

Budget-Friendly Compute Resources:

Another effective cost-saving strategy is to opt for budget-friendly computing resources offered at discounted prices. Major cloud providers offer substantial discounts when we commit to using their resources for a specific period, typically ranging from 1 to 3 years. When we commit to a 1-3 year usage period, we can get a significant discount of up to 40% to 72% across leading providers. These long-term discounts are available as “Reserved Instances” in AWS and Azure, and “Committed Use Discounts” in GCP. These options are ideal when we have predictable, continuous resource needs.

Comparison of Committed Use Resources Across Major Cloud Providers:

Cleanup Unused Resources:

Last but not least, a final Kubernetes cost-saving strategy is regularly cleaning up unused resources like workloads, images, namespaces, logs, and persistent volumes. Over time, these idle resources can accumulate and lead to unnecessary costs, especially in dynamic environments. Implementing automated policies for resource cleanup, such as pruning unused Docker images and terminating stale workloads, can help prevent wasteful spending.

For example, persistent volumes like EBS, have an independent lifecycle from pods and will continue to run even after pods they were attached to are terminated. It’s essential to delete these unattached volumes to avoid incurring costs for unused storage.

Use Ephemeral Enviornments

When deploying an application that is not designed for production such as the development version of the application, you would want to deploy the application using an Ephemerial environment. Within an ephemeral environment, the application will be scaled down to zero, until an HTTP request is made to the application.

Once the application gets a particular request, only the required number of instances will be created. This in turn helps to save infrastructure costs and prevents wasted resources. To learn more about ephemeral environments, please check out this blog.

Using Autoscalers such as Karpenter

In addition to the ephemeral environments, using the correct autoscaler can play a huge part in ensuring that users experience seamless application performance, while also managing your cloud costs effectively. By using an autoscaler such as Karpenter, you can properly scale the number of resources such as Kubernetes pods and nodes, and then resources allocated to them based on certain factors such as the current resource utilization, number of users, and even by using custom metrics from a source such as Prometheus or Grafana.

Time-Based Autoscaling

There are certain times throughout the week when developers would not require the developer environment. For example, Developers would not be developing and testing applications during the weekends, holiday seasons, or their off-hours. During these times, the resources allocated to the environments will not be utilized.

For such scenarios, it is necessary to implement a time-based autoscaler such as Winter Soldier which will scale down the resources at a fixed time, and scale them up at another time. To learn more about time-based autoscalers, please read this blog.

Real-Time Build Infrastructure

For building and deploying the application code, a CI/CD pipeline might be running multiple times a day. Every time the CI/CD pipeline is triggered, certain infrastructure needs to be provisioned for the pipeline to perform its tasks. Having a dedicated VM or node might not be the best use of resources.

As you are running applications in Kubernetes, you can provision a pod to act as the runner for the pipeline. This will enable you to better utilize the Kubernetes cluster resources, while also minimizing the infrastructure costs. Within Devtron’s CI pipeline, you can specify the amount of resources you wish to allocate the infrastructure for build pipelines. Whenever the CI/CD pipeline is created, only the specified number of resources will be allocated to the pipeline.

Using AWS Spot Instances

In Kubernetes clusters, all of the application workloads are going to be running in Nodes. You can use AWS Spot instances to provide nodes that are on-demand. These nodes are more cost-effective compared to using dedicated EC2 instances for the Kubernetes nodes.

An ideal strategy to optimize cloud costs is to create a few dedicated nodes so that the application will always stay running, and configure an autoscaler such as Karpenter to provision AWS Spot instances as required for scaling the application workloads.

Using AWS Graviton Instances

AWS Graviton instances are a family of Arm-based processors developed that are designed to deliver high performance while maintaining energy efficiency. The highlight of AWS Gravition instances is that they consume 5% less memory and 2% less CPU resources as compared to regular EC2 instances. While this may seem like a small number, it adds up when you’re running workloads at scale. To learn how you can best leverage AWS Graviton instances, please take at this webinar.

Conclusion

In this blog, we understood that optimizing Kubernetes costs is all about using resources efficiently. By understanding factors such as compute, network, and storage and implementing strategies like setting resource limits, sharing clusters, leveraging budget-friendly compute options, and cleaning up unused resources, organizations can significantly reduce their Kubernetes costs. Cost optimization in Kubernetes not only cuts expenses but also ensures efficient resource utilization without compromising performance. These strategies help ensure our Kubernetes setup remains cost-effective without sacrificing performance.