ArgoCD, is the name that pops up when it's about executing lightning-fast deployments with reliability over the Kubernetes cluster. Being a cloud-native and GitOps-enabled tool ArgoCD has simplified Kubernetes deployments, as it is easy to use, easy to get started, and offers reliable and robust deployments with its in-built capabilities like automated sync, rollback support, drift detection, advance deployment strategies, RBAC integration, and multi-cluster support. As the footprints of services and applications rise, organizations start to face challenges like scaling becomes tough, maintaining reliable deployment pipelines is difficult, and teams often struggle to manage day-to-day operations.

In this blog, we will be looking at some of the challenges and limitations that are often faced by teams while scaling their services, and also we will discuss how an open-source Kubernetes management platform Devtron reduces the operational overload of managing ArgoCD instances.

Some common architectural implementations of ArgoCD are used to handle deployments over Kubernetes clusters. Generally, ArgoCD is implemented in the following ways: Single ArgoCD instance (Hub-spoke model), ArgoCD instance per cluster, and ArgoCD instance for groups of Kubernetes environments. By implementing ArgoCD and our Kubernetes clusters into the above-mentioned architectures we can manage GitOps-based application deployments to Kubernetes environments. While these implementation patterns facilitate the GitOps-based deployments, they start to become complex and difficult to manage at scale, and at a certain point, they become a roadblock for scaling operations.

Let’s understand each of these implementation patterns of ArgoCD, and their pros and cons.

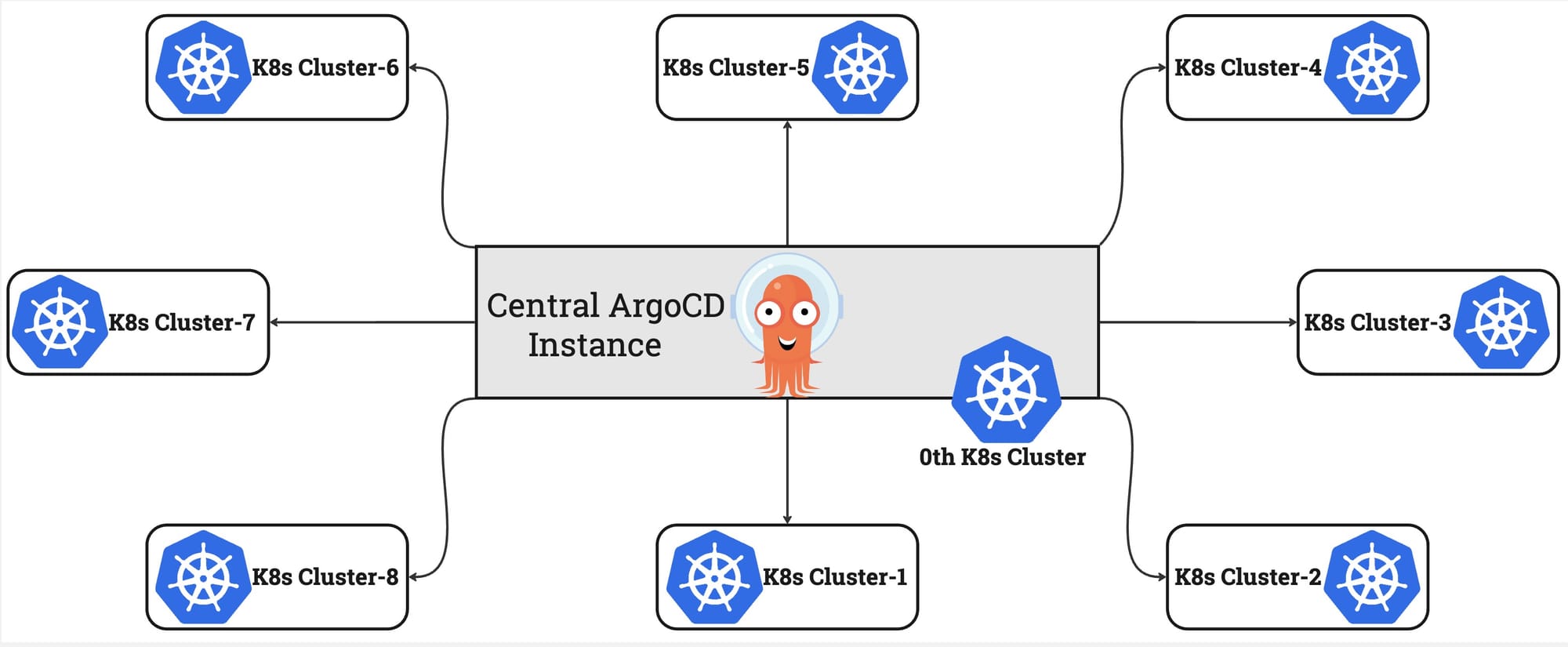

Single ArgoCD Instance for Multiple Kubernetes Clusters

Having a single ArgoCD instance to manage the deployments to multiple Kubernetes clusters is a popular approach to get started with ArgoCD. This approach provides users a centralized point i.e. the single ArgoCD instance to visualize and operate over their Kubernetes deployments and applications. Having a single ArgoCD instance provides ease to operators as they can now centralize operations like configuration management, repository credential storage, SSO integration, API key management, ArgoCD CRD handling, and RBAC policy implementation and control.

This implementation of ArgoCD requires you to spin and manage a central Kubernetes cluster (0th Kubernetes cluster from the above image), this Kubernetes cluster will be hosting the ArgoCD control plane for you. To manage the deployments over other Kubernetes clusters, you need to provide direct access to the Kubernetes API server of all your clusters. You can either publicly expose them or keep every cluster in a separate VPC and connect it to a central Kubernetes cluster, which requires a complex networking configuration. To access these clusters, ArgoCD stores the admin credentials (i.e. kubeconfigs) centrally as secrets over the central Kubernetes cluster. Once the ArgoCD at the central Kubernetes cluster and other connected clusters are up and running, the operator can manage deployments over multiple Kubernetes clusters from a centralized ArgoCD. Further, ApplicationSets and Cluster Generator capabilities enable operators to generate an Application per registered cluster in the central Argo CD instance, while RBAC policies and AppProjects provide controlled access to applications across the ArgoCD instance.

While this implementation of ArgoCD seems great for managing deployments over multiple Kubernetes clusters, there are some downsides. The very first thing is you need to spin the Kubernetes cluster to host the central ArgoCD instance. Moreover, the single ArgoCD instance will become a single point of failure for the system. Exposing the Kubernetes API server of all clusters to a single ArgoCD instance will raise some serious security concerns. If anyhow the central Kubernetes cluster is compromised by a threat actor they can obtain easy access to all other connected Kubernetes clusters as the kubeconfigs of them are stored in secrets of the central Kubernetes cluster.

Pros

- Provides a centralized place to manage and visualize deployments across multiple Kubernetes clusters.

- Ease of installation and maintenance.

- Consistent GitOps workflow implementation across clusters.

- Easier backup and disaster recovery with a single control plane.

- Quick integrations of controllers like ApplicationSet and Cluster Generator.

Cons

- Single point of failure, the central Argo CD instance acts as a single point of failure.

- Having a centralized ArgoCD instance becomes a bottleneck while managing deployments at scale.

- The Admin credentials for all clusters are stored at a central place, a threat actor can easily manage to compromise the whole system.

- Central ArgoCD needs continuous communication with other Kubernetes clusters, this can incur heavy networking costs.

- To facilitate smooth communication you need to keep holes in the firewall.

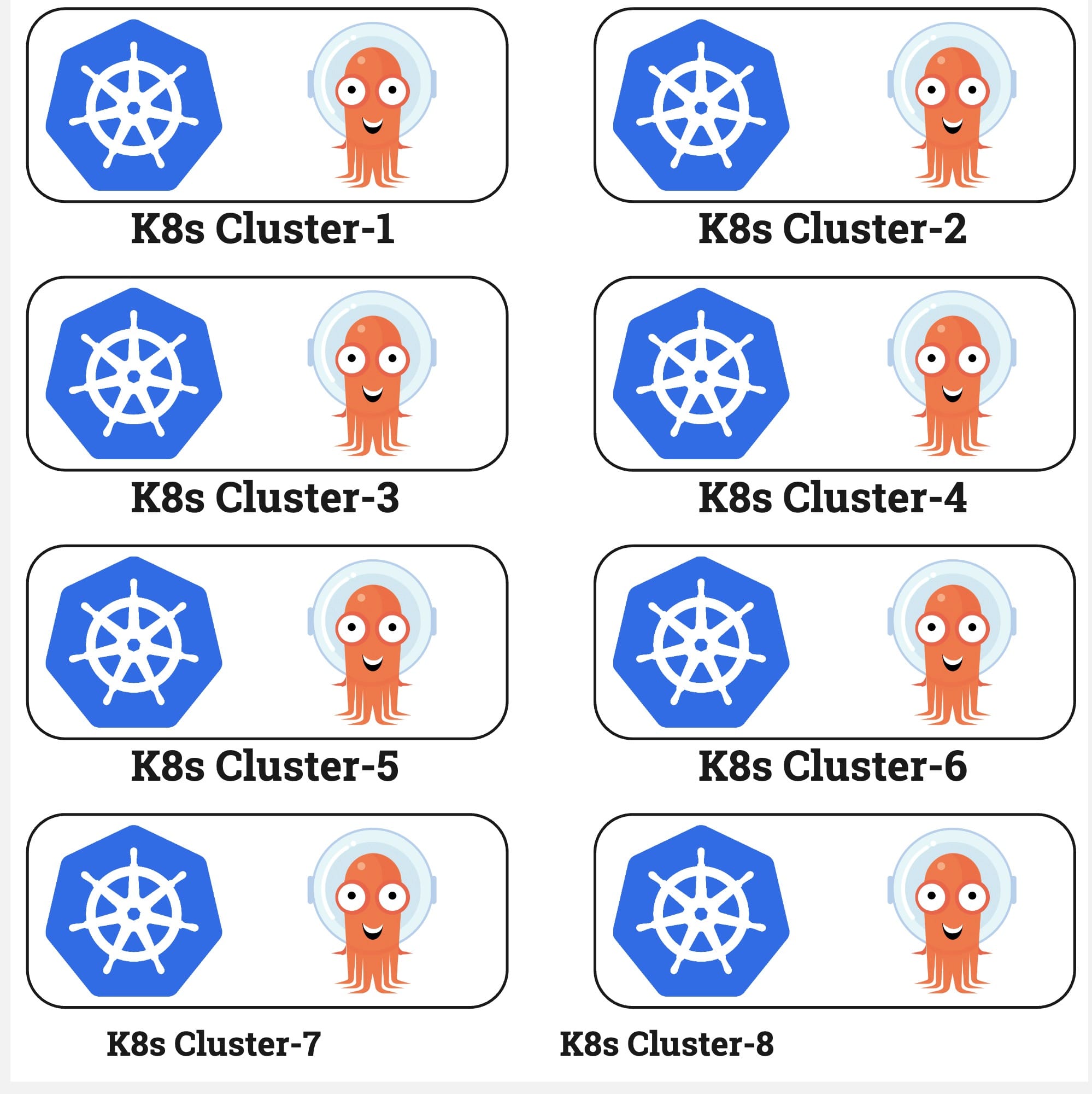

ArgoCD Instance Per Kubernetes Cluster

This is the most common implementation of ArgoCD to manage the GitOps-enabled deployments over Kubernetes clusters. In this type of ArgoCD implementation, you will have a separate ArgoCD instance per cluster, which will be responsible for handling the deployments only for that specific Kubernetes cluster. To understand this better, consider you have multiple Kubernetes clusters - some running production workloads and others dedicated to internal teams. By implementing an architecture with separate ArgoCD instances for each cluster, each team maintains complete control and isolation of their deployments. This approach ensures that production clusters remain isolated from development environments, reducing the risk of accidental deployments or configuration changes. Teams can independently manage their own ArgoCD configurations, RBAC policies, and deployment strategies without affecting other clusters, making it easier to implement cluster-specific security policies and compliance requirements.

This implementation is more secure and scalable than having a single ArgoCD instance, as you don’t have to expose your Kubernetes API servers and admin access to an external Kubernetes cluster. As there will be a separate ArgoCD instance for each cluster, it provides flexibility to teams. Moreover, the credentials that are required for ArgoCD can be scoped to that specific Kubernetes cluster which adds a layer of security. As there is a separate ArgoCD instance to handle the deployment over a specific Kubernetes cluster scaling becomes easier as the load gets effectively distributed among the environments.

While this implementation provides a secure and scalable approach, it has significant drawbacks. In this setup where each Kubernetes cluster maintains its own ArgoCD instance, it assumes each team possesses comprehensive knowledge of ArgoCD management. This assumption negatively impacts developer productivity as teams must invest time in learning and managing their individual ArgoCD instances rather than focusing on their core development tasks. Moreover, teams need to handle the overhead operations like managing robust RBAC policies, configurations, and credentials for each instance.

Pros

- Provides complete isolation between deployments across different environments and teams.

- Provides enhanced security as there is no need to share admin access and credentials with external service.

- Eliminates the single point of failure for the system.

- Improves security posture by having cluster-scoped credentials and secrets.

Cons

- This approach adds up to a significant operational overhead for your developers, as they have to manage their own ArgoCD instance.

- Performing operations like implementing/updating security policies, version upgrades, and configuration changes requires context switching across multiple ArgoCD instances.

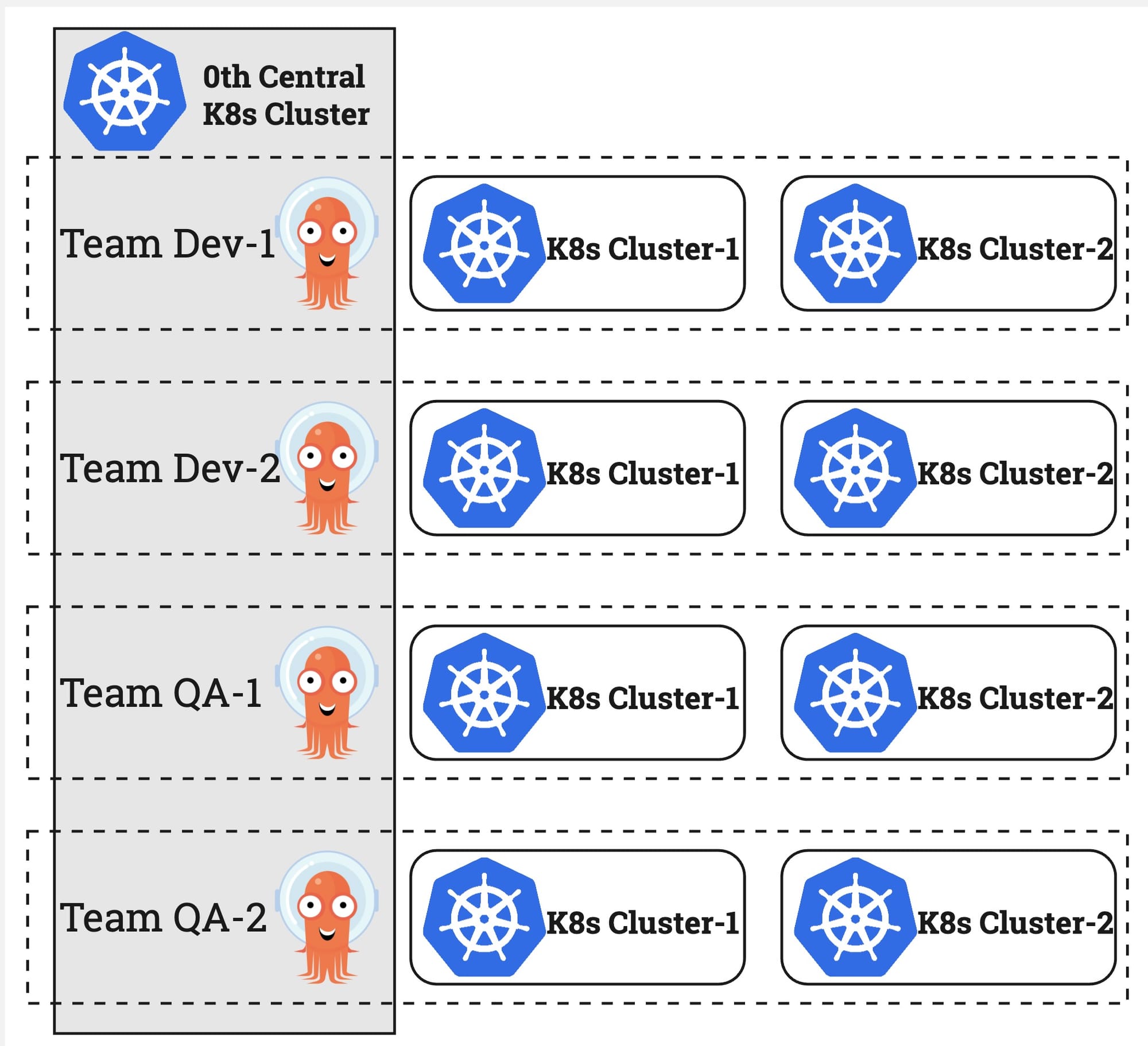

ArgoCD Instance Per Logical Group of Kubernetes Cluster

This implementation, known as a multi-tenant ArgoCD installation, offers a balanced compromise between single and multiple-instance approaches. It utilizes a central cluster to host dedicated ArgoCD instances for specific teams and their respective clusters. For example, with two development teams each managing two Kubernetes clusters, the central Kubernetes cluster would host two ArgoCD instances, each responsible for managing their designated clusters. This setup particularly benefits organizations with multiple environments requiring to manage them separately, providing a middle ground between centralized management and team isolation.

Pros

- With this implementation, the load gets distributed across multiple groups.

- Failure of one ArgoCD instance will not affect the other groups.

- The credentials are scoped for specific groups.

- A single ArgoCD instance for a group provides complete visibility for all deployments of a specific group.

Cons

- The operator still needs to manage multiple instances of ArgoCD, which are dedicated to different groups.

- The ArgoCD instances require tuning at a certain scale.

- Needs a separate Kubernetes cluster to manage ArgoCD instances.

Throughout this discussion, till now we've explored various ArgoCD ,implementations for enabling GitOps-based deployments across Kubernetes clusters. Each implementation whether single instance, multiple instances, or multi-tenant - offers distinct advantages and challenges. However, they all share a common challenge: the inherent operational overhead and complexity of managing ArgoCD instances. This consistent challenge spans maintaining control planes, handling upgrades, managing configurations, and addressing operational requirements.

Limitations of ArgoCD

The above section discusses how managing ArgoCD introduces operational challenges and overhead work for developers. Now let's take a look at the limitations of ArgoCD.

PreDeployment/PostDeployment Operations

While ArgoCD excels at Kubernetes deployments, it lacks native support for PreCD/PostCD stages. These stages are essential for executing tasks before and after a deployment. For instance, you might want to scan docker images before getting them deployed to production environments or performing post-deployment load testing of applications. ArgoCD's absence of native support for these operations, to execute these operations you are required to use external tools or custom scripts, limiting complete deployment pipeline automation and necessitating additional tooling and maintenance.

Application Promotions Across Environments

Every application follows a software lifecycle spanning development, testing, and production phases. ArgoCD lacks built-in application promotion functionality between environments, requiring external tools or scripts. To understand this limitation, consider a production application requiring a hotfix. Without native promotion capabilities, the hotfix must manually traverse through each environment rather than being promoted directly to production. This creates a time-consuming process across multiple environments when urgent fixes are needed.

Devtron a CI/CD Platform: GitOps Deployments without any Compromise

Devtron, an open-source platform, streamlines Kubernetes CI/CD operations while providing comprehensive application visibility. Throughout this discussion, we've examined the operational challenges and limitations of ArgoCD implementation. Now, let's explore how Devtron's as a platform, overcomes these obstacles and delivers a complete solution. The deployments done through Devtron over the Kubernetes clusters are powered by ArgoCD itself, but Devtron as a platform abstracts the challenges of managing ArgoCD instances. Let’s discuss some of the features of Devtron and learn how it makes it easy for developers to deploy their applications over Kubernetes clusters. Let’s see what key features Devtron offers when it comes to managing deployments over Kubernetes:

Managing Deployments Over Multiple Kubernetes Clusters

Devtron comes as a unified platform for managing applications and their deployments over multiple Kubernetes clusters. Unlike the traditional practice where you have to manage a separate ArgoCD instance to manage deployments over a Kubernetes cluster. Devtron allows you to onboard multiple Kubernetes clusters to Devtron’s dashboard and manage applications and deployments from a single UI. With Devtron developers can execute smooth and quick deployments over multiple Kubernetes clusters as they don’t have to worry about managing ArgoCD instances.

Promote Application Across Environment

Once the applications are deployed into one environment, developers need to promote them across environments, i.e., from the dev environment to testing and from testing to production. ArgoCD lacks the capability to promote applications across environments. Devtron, as a layer over ArgoCD, enables developers to promote their applications smoothly while managing the stability in configurations.

Flexible CI/CD Workflows

Devtron allows you to manage both CI and CD pipelines on a single dashboard, which means with Devtron you do not have to build images with tools like Jenkins or GitHub actions. Along with the CI/CD flow Devtron also offers stages like Pre/Post CI/CD operation, where you can perform security scans and load testing of applications. Moreover, to help maintain production stability Devtron also provides approval-based deployments, where each deployment needs to be approved by stakeholders.

Robust RBAC

Devtron allows administrators to configure a robust RBAC for your Kubernetes infrastructure, through an intuitive and simplified UI. To provide ease of access to your Kubernetes infrastructure Devtron provides support for seven SSO providers.

Ease of Rollbacks

As it’s a saying ‘anything that can go wrong will go wrong’ so sometimes the deployments to production clusters may go wrong. Devtron provides a one-click rollback functionality, where you can quickly roll back to previous stable versions.

Conclusion

When it comes to executing GitOps-based deployments to Kubernetes cluster ArgoCD excels them. However the implementation patterns of ArgoCD with Kubernetes clusters come with multiple challenges and give an operational overhead to teams, where they have to manage an ArgoCD instance along with Kubernetes clusters. Devtron stands as a comprehensive solution that leverages the ArgoCD’s strength while addressing the limitations of ArgoCD. By providing unified cluster management, built-in flexible CI/CD workflows, application promotion capabilities, and robust RBAC controls, Devtron eliminates the complexity of managing multiple ArgoCD instances while providing the reliability and efficiency that Developer and DevOps teams need.