What is Elasticsearch Curator?

Curator is a tool from Elastic (the company behind Elasticsearch) to help manage your Elasticsearch cluster. You can create, backup, and delete some indices, Curator helps make this process automated and repeatable. Curator is written in Python, so it is well supported by almost all operating systems. It can easily manage the huge number of logs that are written to the Elasticsearch cluster periodically by deleting them and thus helps you to save the disk space.

Below mentioned steps, explains how you can clear old indices from your cluster.

1.) Configuring Elasticsearch Curator using Yaml

A very elegant way to configure and automate Elasticsearch Curator execution is using a YAML configuration. Create the file ‘values.yml’ with the following content.

# Default values for elasticsearch-curator.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

cronjob:

# At 01:00 every day

schedule: "0 1 * * *"

annotations: {}

labels: {}

concurrencyPolicy: ""

failedJobsHistoryLimit: ""

successfulJobsHistoryLimit: ""

jobRestartPolicy: Never

pod:

annotations: {}

labels: {}

rbac:

# Specifies whether RBAC should be enabled

enabled: false

serviceAccount:

# Specifies whether a ServiceAccount should be created

create: true

# The name of the ServiceAccount to use.

# If not set and create is true, a name is generated using the fullname template

name:

psp:

# Specifies whether a podsecuritypolicy should be created

create: false

image:

repository: untergeek/curator

tag: 5.7.6

pullPolicy: IfNotPresent

hooks:

install: false

upgrade: false

# run curator in dry-run mode

dryrun: false

command: ["/curator/curator"]

env: {}

configMaps:

# Delete indices older than 7 days

action_file_yml: |-

---

actions:

1:

action: delete_indices

description: "Clean up ES by deleting old indices"

options:

timeout_override:

continue_if_exception: False

disable_action: False

ignore_empty_list: True

filters:

- filtertype: age

source: name

direction: older

timestring: '%Y.%m.%d'

unit: days

unit_count: 7

field:

stats_result:

epoch:

exclude: False

# Having config_yaml WILL override the other config

config_yml: |-

---

client:

hosts:

- CHANGEME.host

port: 9200

# url_prefix:

# use_ssl: True

# certificate:

# client_cert:

# client_key:

# ssl_no_validate: True

# http_auth:

# timeout: 30

# master_only: False

# logging:

# loglevel: INFO

# logfile:

# logformat: default

# blacklist: ['elasticsearch', 'urllib3']

resources: {}

# We usually recommend not to specify default resources and to leave this as a conscious

# choice for the user. This also increases chances charts run on environments with little

# resources, such as Minikube. If you do want to specify resources, uncomment the following

# lines, adjust them as necessary, and remove the curly braces after 'resources:'.

# limits:

# cpu: 100m

# memory: 128Mi

# requests:

# cpu: 100m

# memory: 128Mi

priorityClassName: ""

# extraVolumes and extraVolumeMounts allows you to mount other volumes

# Example Use Case: mount ssl certificates when elasticsearch has tls enabled

# extraVolumes:

# - name: es-certs

# secret:

# defaultMode: 420

# secretName: es-certs

# extraVolumeMounts:

# - name: es-certs

# mountPath: /certs

# readOnly: true

# Add your own init container or uncomment and modify the given example.

extraInitContainers: {}

## Don't configure S3 repository till Elasticsearch is reachable.

## Ensure that it is available at http://elasticsearch:9200

##

# elasticsearch-s3-repository:

# image: jwilder/dockerize:latest

# imagePullPolicy: "IfNotPresent"

# command:

# - "/bin/sh"

# - "-c"

# args:

# - |

# ES_HOST=elasticsearch

# ES_PORT=9200

# ES_REPOSITORY=backup

# S3_REGION=us-east-1

# S3_BUCKET=bucket

# S3_BASE_PATH=backup

# S3_COMPRESS=true

# S3_STORAGE_CLASS=standard

# apk add curl --no-cache && \

# dockerize -wait http://${ES_HOST}:${ES_PORT} --timeout 120s && \

# cat <<EOF | curl -sS -XPUT -H "Content-Type: application/json" -d @- http://${ES_HOST}:${ES_PORT}/_snapshot/${ES_REPOSITORY} \

# {

# "type": "s3",

# "settings": {

# "bucket": "${S3_BUCKET}",

# "base_path": "${S3_BASE_PATH}",

# "region": "${S3_REGION}",

# "compress": "${S3_COMPRESS}",

# "storage_class": "${S3_STORAGE_CLASS}"

# }

# }

securityContext:

runAsUser: 16 # run as cron user instead of rootYou can modify the above values.yaml according to the requirements, some modifications are suggested below:

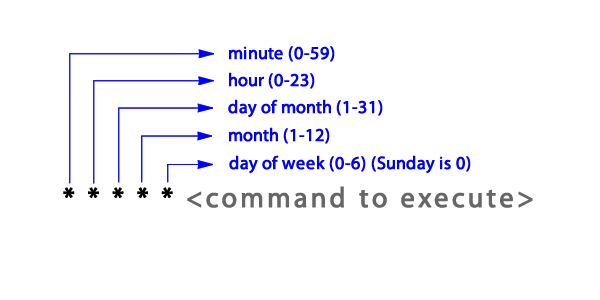

schedule: "0 1 * * *"

* represents all the possible numbers for that position

Schedule Cron Job: In the above code, at line number 7, the Cron Job is Scheduled to run at 1 AM every day. You can schedule your Cron Job accordingly, below is the syntax of Cron Job for your reference.

client:

hosts:

- CHANGEME.host

Elasticsearch host endpoint: In the above code, from line number 79 onwards you can specify multiple Elasticsearch host endpoints under the hosts.

In Cluster Elasticsearch services can be provided using the syntax: .

Example: es1-elasticsearch-client.monitoring

filters:

- filtertype: age

source: name

direction: older

timestring: '%Y.%m.%d'

unit: days

unit_count: 7

field:

stats_result:

epoch:

exclude: False

Delete Old Indices: You can specify how old the indices should be, to get deleted. At line number 69 of the above code, unit_count specifies the indices that are older than 7 days will be deleted.

2.) Install Elasticsearch Curator using Helm

Helm Chart will create a Cron Job to run Elasticsearch Curator. To install the chart, use the following command:

$ helm install stable/elasticsearch-curator

3.)Remove Elasticsearch Curator

To remove the Elasticsearch Curator, use the following command:

$ helm delete es1-curator --purgeFAQ

What is Elasticsearch Curator and How Does it Help Manage Elasticsearch Clusters?

Elasticsearch Curator is a tool that automates the management of Elasticsearch indices, including creating, backing up, and deleting indices. Written in Python, it simplifies maintaining your Elasticsearch cluster by automating tasks such as clearing old indices to save disk space.

How Do I Configure Elasticsearch Curator Using YAML?

You can configure Elasticsearch Curator with a YAML file that defines tasks such as deleting old indices. The configuration includes setting schedules for actions, such as cleaning up indices older than 7 days, and specifying Elasticsearch host endpoints.

How Do I Install Elasticsearch Curator Using Helm?

To install Elasticsearch Curator using Helm, you can use the command helm install stable/elasticsearch-curator. This command sets up a Cron Job to run Curator on a scheduled basis, helping automate the management of your Elasticsearch indices.

How Do I Remove Elasticsearch Curator from My Cluster?

To remove Elasticsearch Curator from your cluster, you can use the Helm command: helm delete es1-curator --purge. This will uninstall Curator and remove any associated resources.