1. AWS EKS simplifies Kubernetes management, but improper subnet/IP planning can cause scalability issues.

2. IP shortages in subnets lead to pods stuck in the

ContainerCreating state and deployment failures.3. Each worker node reserves IPs from subnets via ENIs, which may cause IP wastage if not configured properly.

4. Updating the AWS-node CNI plugin with

WARM_IP_TARGET, MINIMUM_IP_TARGET, and WARM_ENI_TARGET helps optimize IP allocation.5. Using prefix mode, ENI configs, or patching CNI settings can prevent IP exhaustion and ensure cluster scalability.

Introduction

The popularity of AWS Elastic Kubernetes Service (EKS) is consistently rising as a managed Kubernetes solution. From resource management to enhancing and implementing new requirements, EKS really comes with an easy, user-friendly approach to overseeing all components. An abundance of good documentation and regular updates offered by the AWS community further enhances user experience, simplifying operations for end-users.

However, when it comes to the scalability of your workloads or Kubernetes cluster, challenges arise if proper planning was not undertaken during the initial phase of the cluster setup. A prominent issue arises in the management of IP addresses, a critical factor in scaling clusters. The insufficiency of available IP addresses within your subnets can precipitate an alarming shortage within your cluster. An IP shortage can lead to operational challenges, impacting the deployment and functioning of applications. This article delves into this issue and its corresponding solution, providing an in-depth exploration of the matter.

Issues Arising from IP Shortages

If your EKS cluster is facing an IP shortage issue, then you would likely have come across the subsequent error message when attempting to deploy a new application or scale an existing one within the pod events:

# Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "82d95ef9391fdfff08b86bbf6b8c4b6568b4ee7bb81fce" network for pod "example-pod_namespace": network plugin cni failed to set up pod "example-pod_namespace" network: add cmd: failed to assign an IP address to container

Under such conditions, your pod will be stuck in the "ContainerCreating" state, unable to initiate until an IP address is assigned to it. Upon investigation of the cluster's private subnets, you'll likely discover that the specific subnet where the worker node is allocated and the pod is assigned exhibits an available IP address count of 0.

What could be a possible solution?

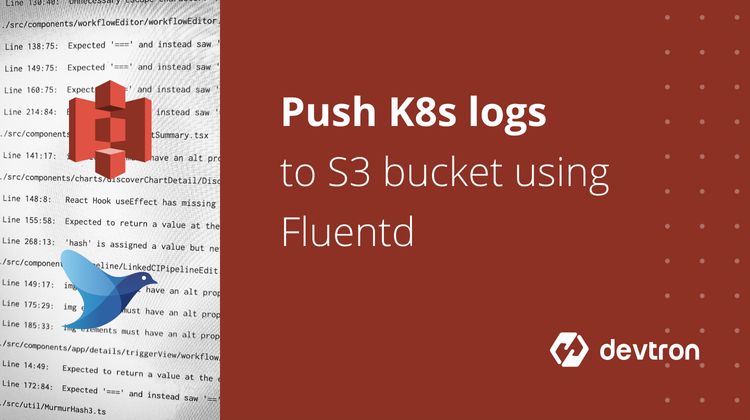

In order to understand the resolution for this issue, we have to first understand the functioning of the EKS cluster and how IP addresses get allocated to worker nodes and further to pods.

Understanding IP Allocation in AWS EKS Clusters

Within an AWS EKS cluster, IP addresses play a vital role in facilitating communication among worker nodes, services, and external entities. The management of IP addresses is primarily managed by the AWS-node daemonset as a default mechanism. This daemonset is responsible for the allocation of distinctive IP addresses to each worker node. It ensures that each worker node receives a unique IP address by requesting IPs from the Amazon VPC's IP address range associated with the cluster's subnet.

For the detailed information, check Amazon VPC CNI documentation

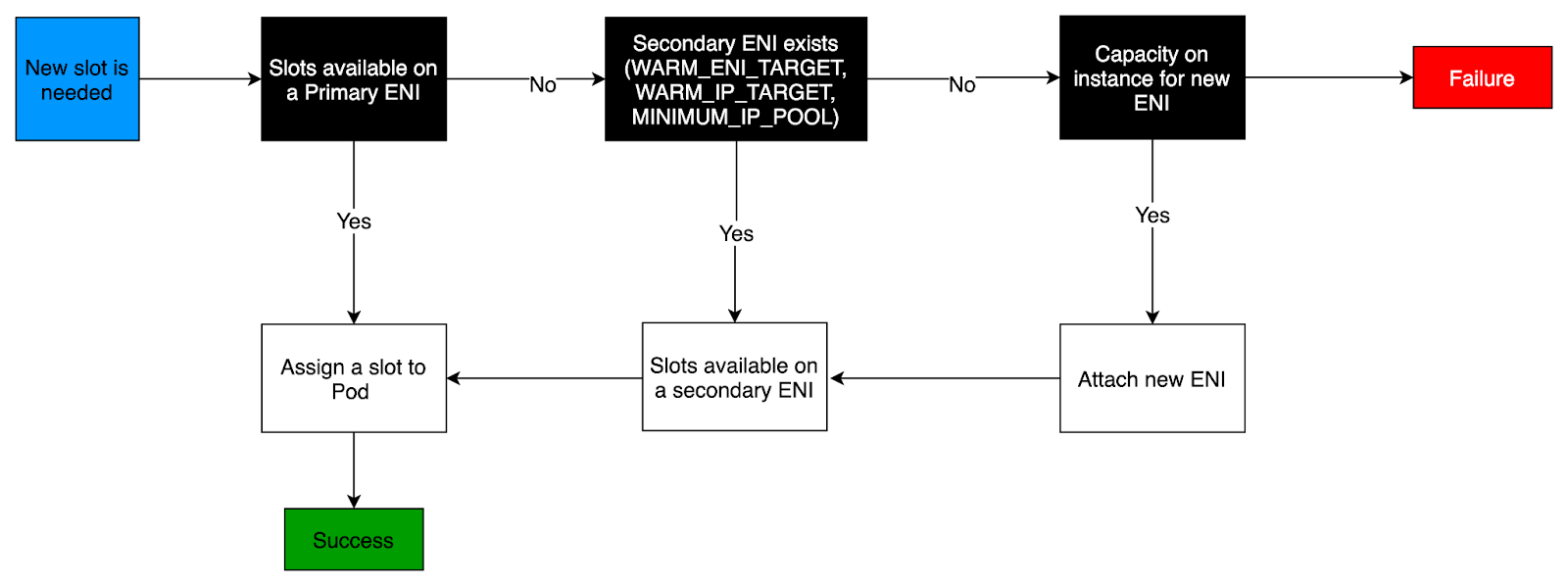

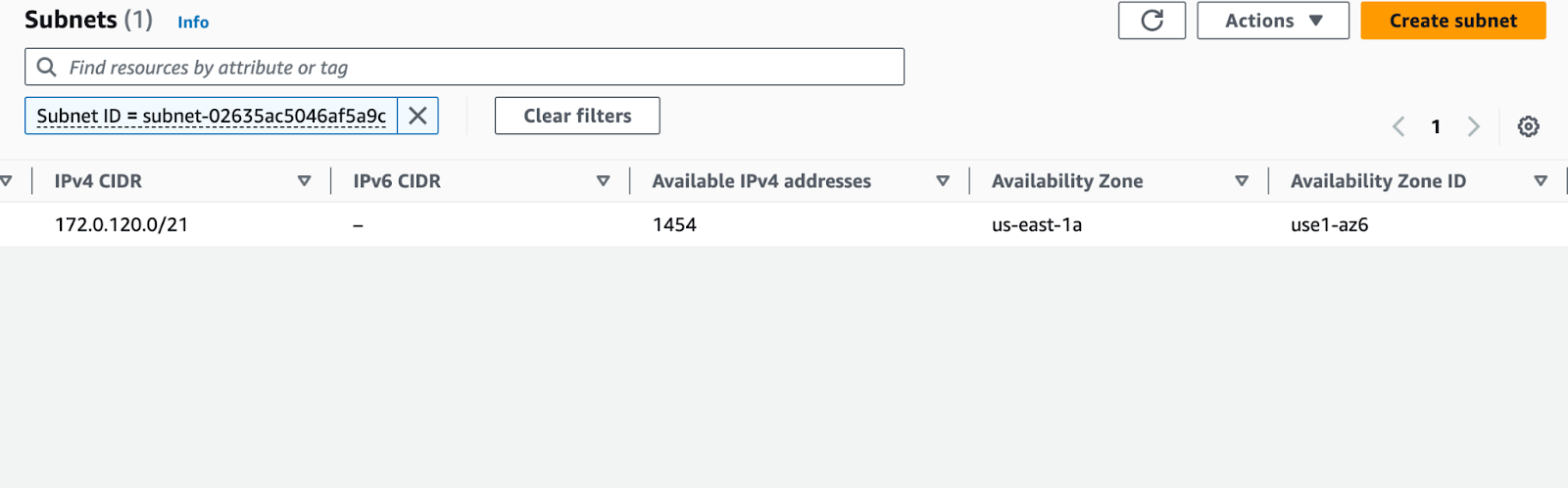

By default, upon the joining of a new worker node into the cluster, a specific number of IP addresses is affixed to the worker node, based on the associated network interfaces. For instance, consider the scenario of a compute-optimized instance such as c5a.2xlarge, having 2 network interfaces attached. In this instance, a total of 30 private IP addresses will be allocated from the corresponding subnet where this worker node has been allocated. Now it does not matter whether you have 5 pods running in this worker node or 15, these 30 IP addresses will be attached to this worker node which is obviously a wastage of lots of IP addresses. In the below screenshot, you can see the numbers of IPs attached to the existing node.

To view this option select worker node in EC2 instance console -> choose networking section.

To check each instance type default IP allocation count, refer to the AWS official documentation.

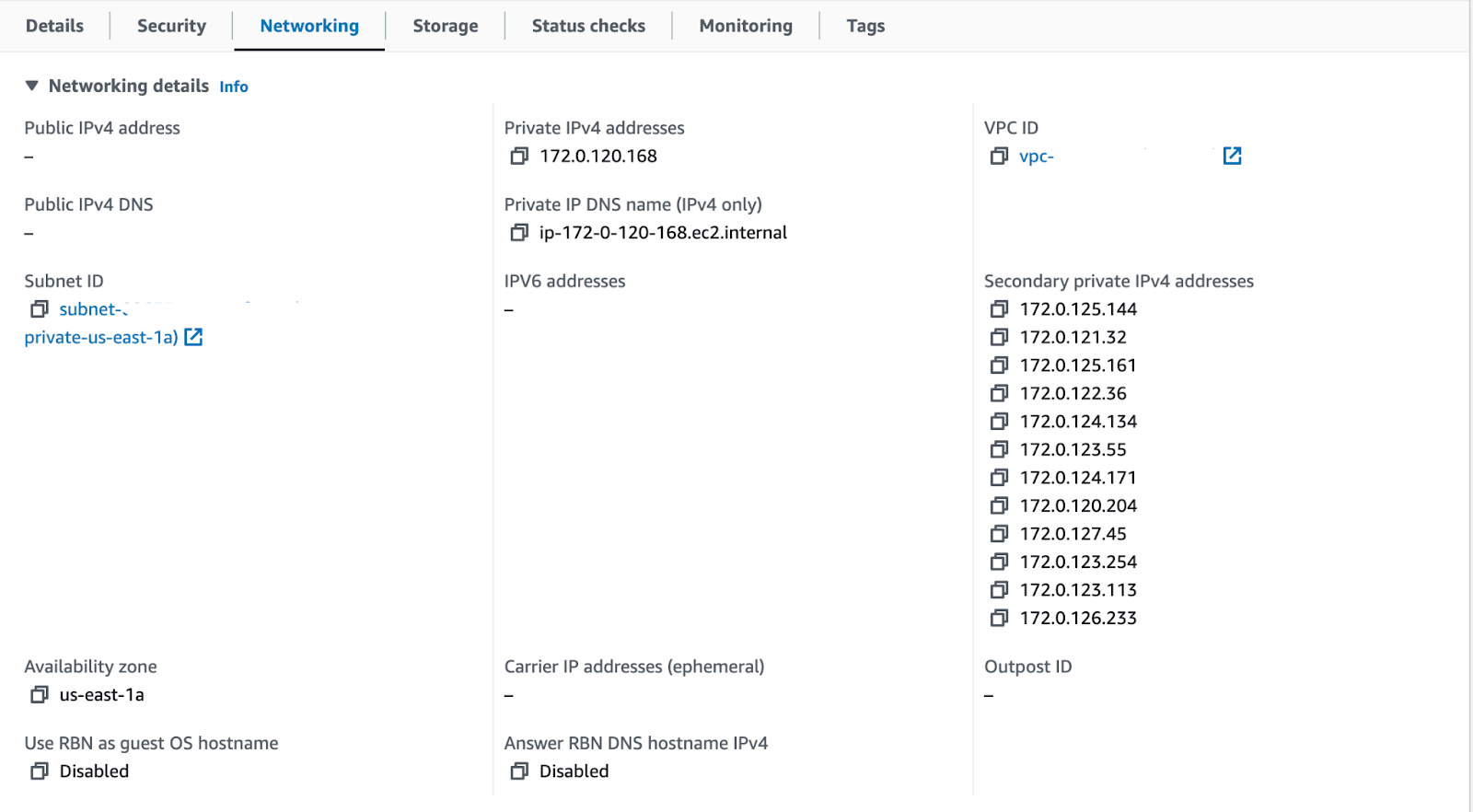

To check the available IP addresses on the subnet

Open VPC console -> choose subnet -> select subnets of the clusters

In the above snapshot, it is clear that in this subnet we have 1454 IP addresses available. So what if we ran out of IP addresses? Let’s see how it can be resolved if such a scenario exists.

Avoiding IP Shortages

There can be multiple ways to avoid IP shortages in your EKS clusters. Following are the different ways that can be helpful.

- Adding ENI configuration in the cluster with different subnets. Check out this detailed documentation which talks about adding custom ENI configurations.

- Update the existing cni-plugin to assign the minimum IP at the boot time of the new worker node.

- Prefix Mode for Linux

In this blog post, we will proceed with the implementation of updating the existing cni-plugin as we don’t have to create extra objects. With this approach, we just have to update the existing cni-plugin which is the default cni-plugin provided by AWS EKS.

Updating existing CNI Plugin

To update the existing cni-plugin, we will add/configure 3 environment variables in the AWS-node daemonset. For detailed information, check out the official documentation which talks about the environment variables.

1. WARM_IP_TARGET

The number of Warm IP addresses to be maintained. A Warm IP is available on an actively attached ENI but has not been assigned to a Pod. In other words, the number of Warm IPs available is the number of IPs that may be assigned to a Pod without requiring an additional ENI.

For Example: Consider an instance with 1 ENI, each ENI supporting 20 IP addresses. WARM_IP_TARGET is set to 5. WARM_ENI_TARGET is set to 0. Only 1 ENI will be attached until a 16th IP address is needed. Then, the CNI will attach a second ENI, consuming 20 possible addresses from the subnet CIDR.

2. MINIMUM_IP_TARGET

The minimum number of IP addresses to be allocated at any time. This is commonly used to front-load the assignment of multiple ENIs at instance launch.

For Example: Consider a newly launched instance. It has 1 ENI and each ENI supports 10 IP addresses. MINIMUM_IP_TARGET is set to 100. The ENI immediately attaches 9 more ENIs for a total of 100 addresses. This happens regardless of any WARM_IP_TARGET or WARM_ENI_TARGET values.

3. WARM_ENI_TARGET

The number of Warm ENIs to be maintained. An ENI is “warm” when it is attached as a secondary ENI to a node, but it is not in use by any Pod. More specifically, no IP addresses of the ENI have been associated with a Pod.

For Example: Consider an instance with 2 ENIs, each ENI supporting 5 IP addresses. WARM_ENI_TARGET is set to 1. If exactly 5 IP addresses are associated with the instance, the CNI maintains 2 ENIs attached to the instance. The first ENI is in use, and all 5 possible IP addresses of this ENI are used. The second ENI is “warm” with all 5 IP addresses in the pool. If another Pod is launched on the instance, a 6th IP address will be needed. The CNI will assign this 6th Pod an IP address from the second ENI and 5 IPs from the pool. The second ENI is now in use and no longer in a “warm” status. The CNI will allocate a 3rd ENI to maintain at least 1 warm ENI.

You have the choice to implement this either by manually adding the required environment variables to the manifest or by using the patch command to configure the environment variables.

Note: Opting for the patch option is advisable, as it ensures that other elements within the manifest remain unaffected and unaltered.

Option 1: Adding the environment variables in the manifest

Run the following command

kubectl edit daemonset aws-node -n kube-system

And add the environmental variables in the env section of the container i.e, spec.template.spec.containers.env as

env:

- name: WARM_IP_TARGET

value: "2"

- name: MINIMUM_IP_TARGET

value: "10"

Option 2: Adding env variables using patch

kubectl set env daemonset aws-node -n kube-system WARM_IP_TARGET=10 MINIMUM_IP_TARGET=2

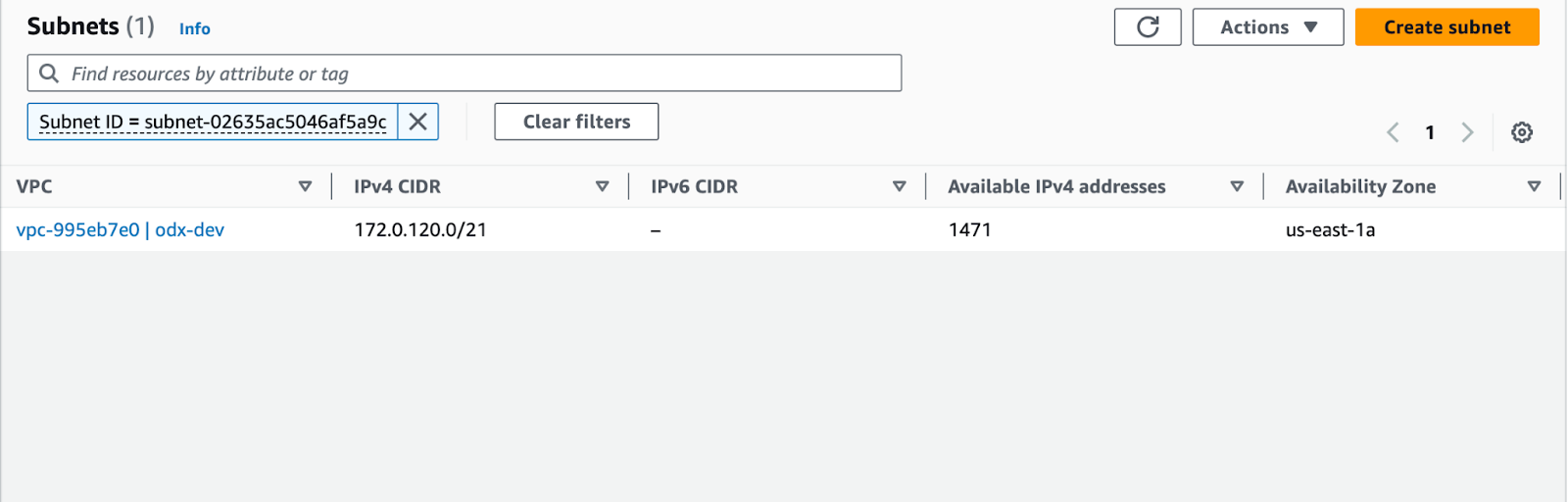

After the implementation of this adjustment takes effect across each pod of the aws-node, you will observe that when a new node becomes part of the cluster, it will initiate with an allocation of only 12 IPs.

And it has reduced only 13 IPs from the subnet, one is for the node private IP and 12 are reserved for pods.

Conclusion

By reserving a specific number of warm IP addresses and ENIs, you ensure that each worker node has a minimum number of IPs available for pod assignment, reducing the risk of IP shortage. While the solution mentioned above offers advantages, it's important to be aware of a few tradeoffs:

- If multiple pods are scheduled on a single node, exceeding the warm IP count, there might be a slight delay in the startup time for these pods.

- In cases where multiple pods are scheduled on a single node, surpassing the warm IP count, and the subnet lacks any available IP addresses, the scheduling process will fail. In such scenarios, the remaining option involves utilizing extra ENI-configurations with distinct subnets.

IP shortage can pose challenges to the scalability and smooth functioning of AWS EKS clusters. By configuring the aws-node daemonset using the WARM_IP_TARGET, MINIMUM_IP_TARGET, and WARM_ENI_TARGET environment variables, you can effectively mitigate IP shortage concerns. This approach ensures that each worker node has a minimum number of IP addresses reserved for pod assignment while dynamically allocating additional IPs.

Feel free to connect with us on our Discord Community if you have any queries. We would be more than happy to help you.

FAQs

1. Why does AWS EKS run out of IP addresses?

EKS runs out of IPs when worker nodes consume subnet IPs via ENIs, and the subnet CIDR range becomes exhausted. Each node reserves multiple IPs regardless of actual pod usage, which may lead to wastage and shortages.

How do I check available IP addresses in an EKS cluster?

You can check available IPs by navigating to VPC Console → Subnets → Select Subnet associated with your cluster. The available IP count will be shown under the subnet details.

What happens when an EKS cluster runs out of IPs?

When a cluster runs out of IPs, new pods cannot be scheduled and remain stuck in the ContainerCreating state. Errors like “failed to assign an IP address to container” appear in pod events.

How can I avoid IP shortages in EKS?

You can avoid IP shortages by:

- Using prefix mode for ENIs

- Adding custom ENI configurations with larger subnets

- Updating the AWS CNI plugin with environment variables (

WARM_IP_TARGET,MINIMUM_IP_TARGET,WARM_ENI_TARGET) to optimize IP allocation.

What is the role of WARM_IP_TARGET in EKS CNI?

WARM_IP_TARGET defines the number of unused (warm) IPs that the CNI plugin should keep available on a node. This ensures pods can start quickly without waiting for a new ENI to attach.