1. Django is Kubernetes-ready — but deployment complexity can slow you down without the right tools.

2. Devtron automates the process, handling Dockerfile creation, manifests, and CI/CD pipelines for faster releases.

3. Manual deployment offers full control but requires deep Kubernetes knowledge and more time.

4. Best practices matter — optimize resources, configure probes, and ensure secure, efficient deployments.

5. Automation reduces risk — over 70% of cloud-native failures stem from misconfiguration, which Devtron helps prevent.

Introduction

Django is built for speed, security, and scalability, making it a go-to framework for modern web applications. With its built-in authentication, ORM, and security features, it handles the heavy lifting so developers can focus on building great products. But when it comes to deploying Django on Kubernetes, the process isn’t always as seamless—misconfigured containers, inefficient scaling, and deployment roadblocks can slow things down.

Devtron changes that. By automating and simplifying Kubernetes deployments, it removes complexity and helps you get your Django applications up and running with ease.

In this guide, we’ll walk you through both methods, along with best practices to ensure a smooth, efficient deployment.

Two Ways to Deploy Django Applications on Kubernetes:

- Using Devtron for Automated Deployment

- Using Kubernetes Manually

Did you know? Over 70% of cloud-native failures stem from misconfigured deployments. From bloated images to inefficient resource allocation, small mistakes can cause big inefficiencies. But don’t worry—this guide will help you avoid them!

Deploying Django Applications on Kubernetes

Deploying a Django application to Kubernetes involves several steps. Let’s first review the overall process and then discuss the various steps in depth.

Steps for Deployment

- Write and build the Django Application

- Containerize the Django Application

- Push the container to a Container Registry such as DockerHub

- Create the required YAML Manifest for Kubernetes Resources

- Apply the YAML manifest to the Kubernetes clusters

Prerequisites

Before proceeding with the deployment process, please make sure that you have the following prerequisites

- A Django application

- Docker

- Kubectl

- A Kubernetes Cluster such as kind

Method 1: Deploying Django Applications Using Devtron

Devtron is a Kubernetes management platform that simplifies the entire DevOps lifecycle. It automates the creation of Dockerfiles, and Kubernetes manifests, builds the application, and manages deployment through an intuitive UI.

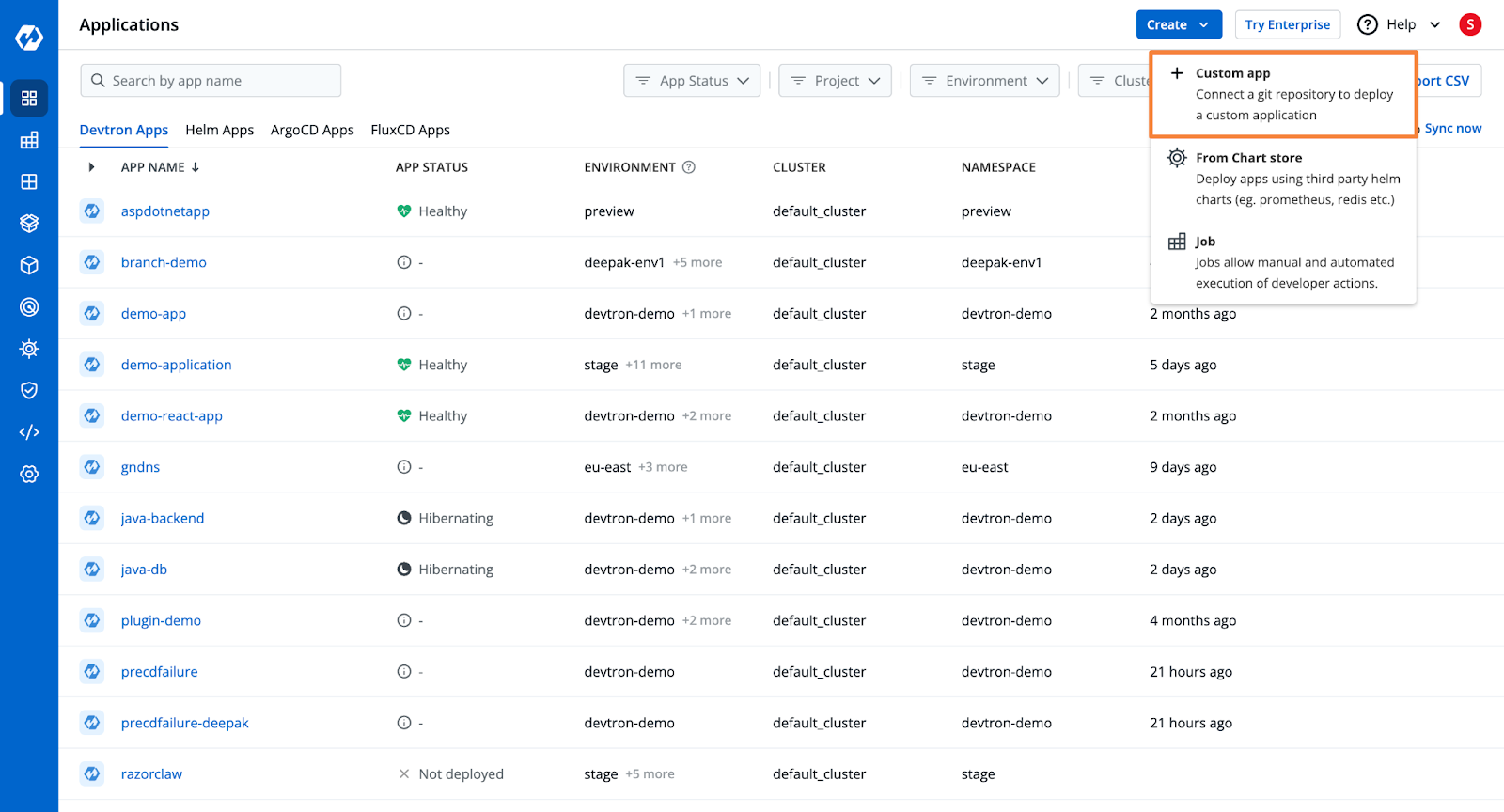

Step 1: Create a Devtron Application and Add Git Repository

- From Devtron’s home page, create a new Devtron application.

- Add the Git Repository containing the Django application code.

Check out the documentation to learn more about the application creation process.

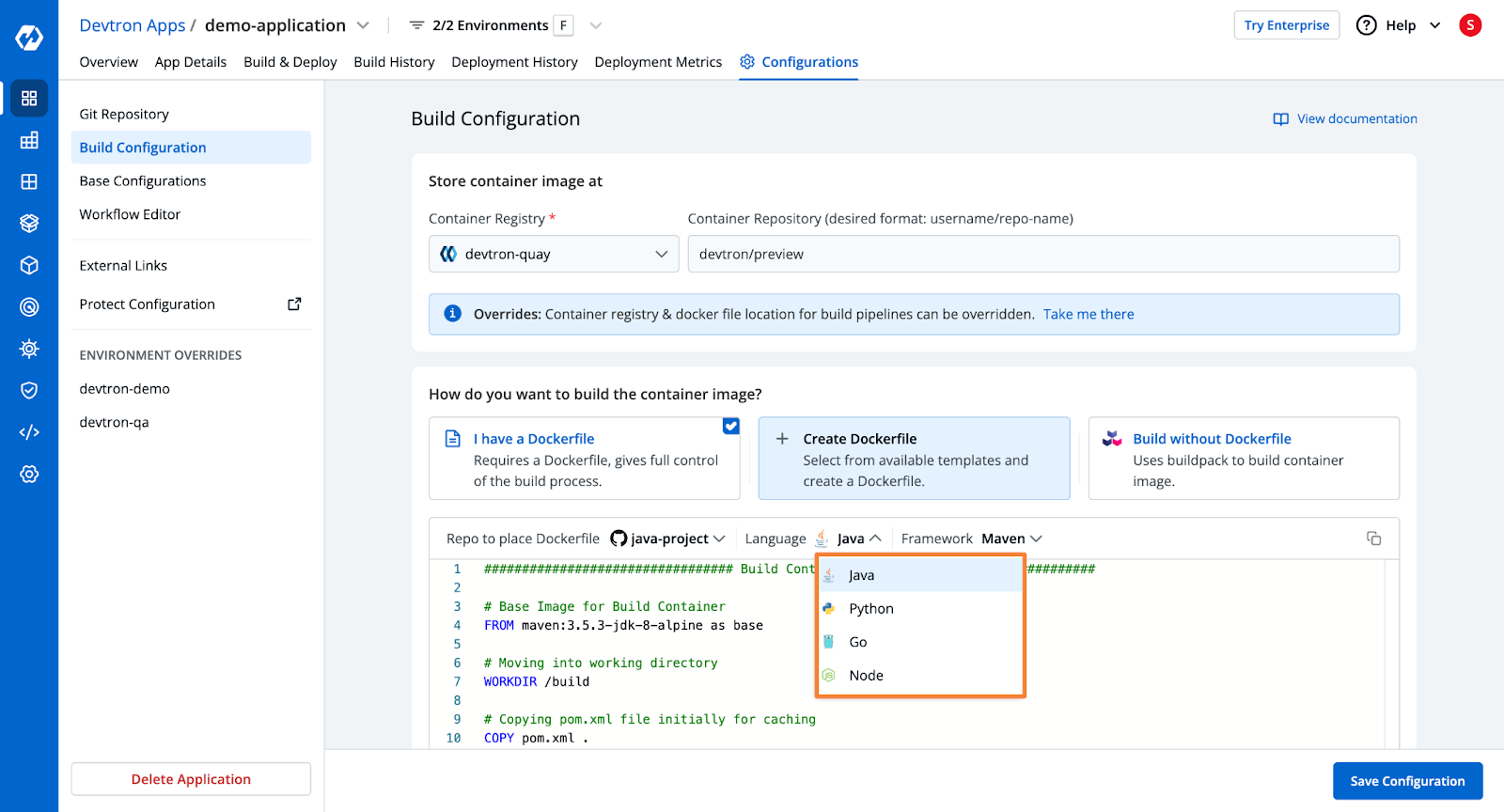

Step 2: Configure the Build

- Devtron will pull code from the repository and build the Docker container.

- You need to configure an OCI Container Registry.

- Choose from three build options:

- Use an existing Dockerfile

- Create a Dockerfile (using Devtron's template for Django applications)

- Use Buildpacks

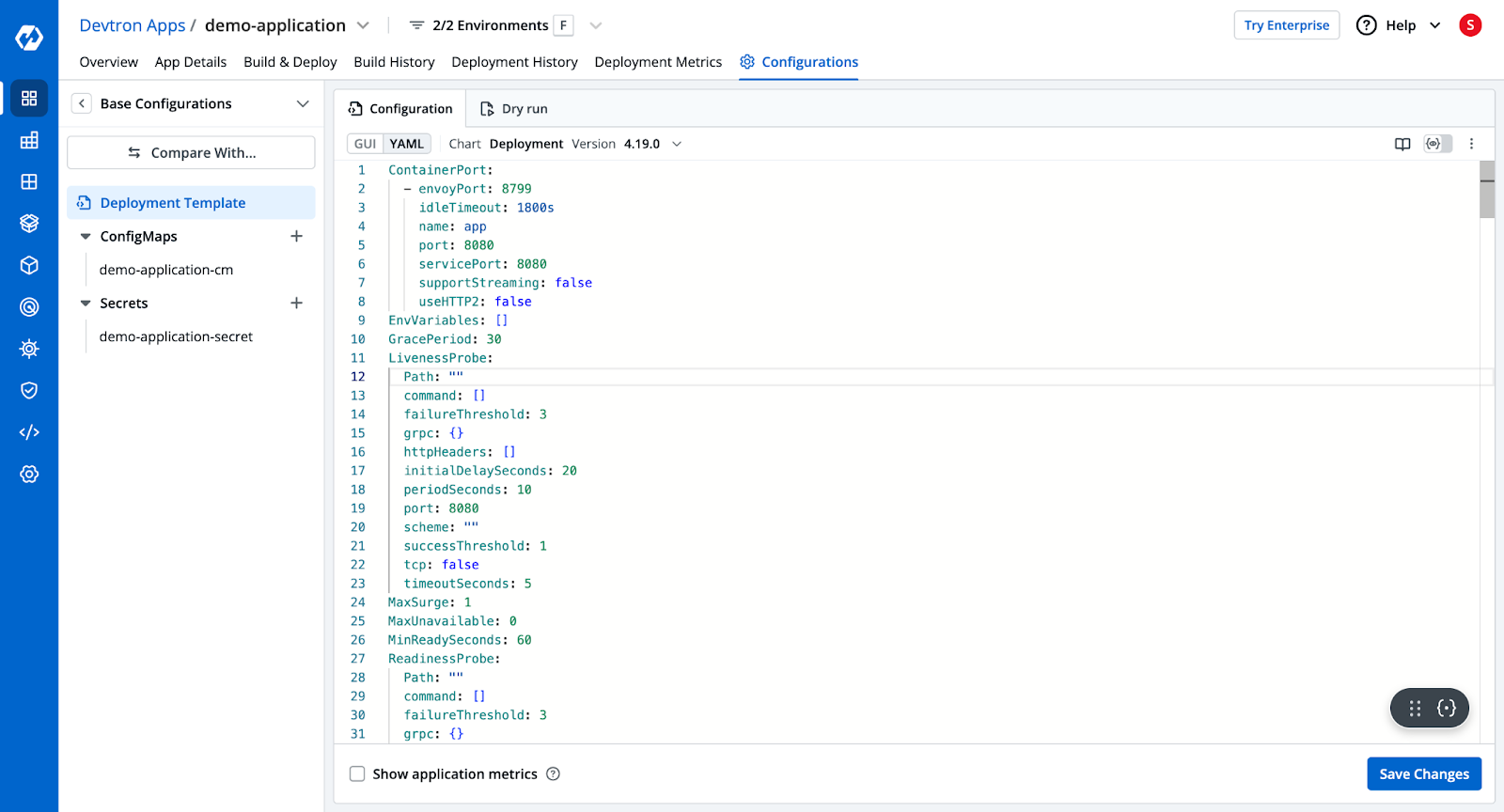

Step 3: Deployment Configurations

- Devtron provides a pre-configured YAML template for Kubernetes deployment.

- Configure ingress, autoscalers, and other deployment settings.

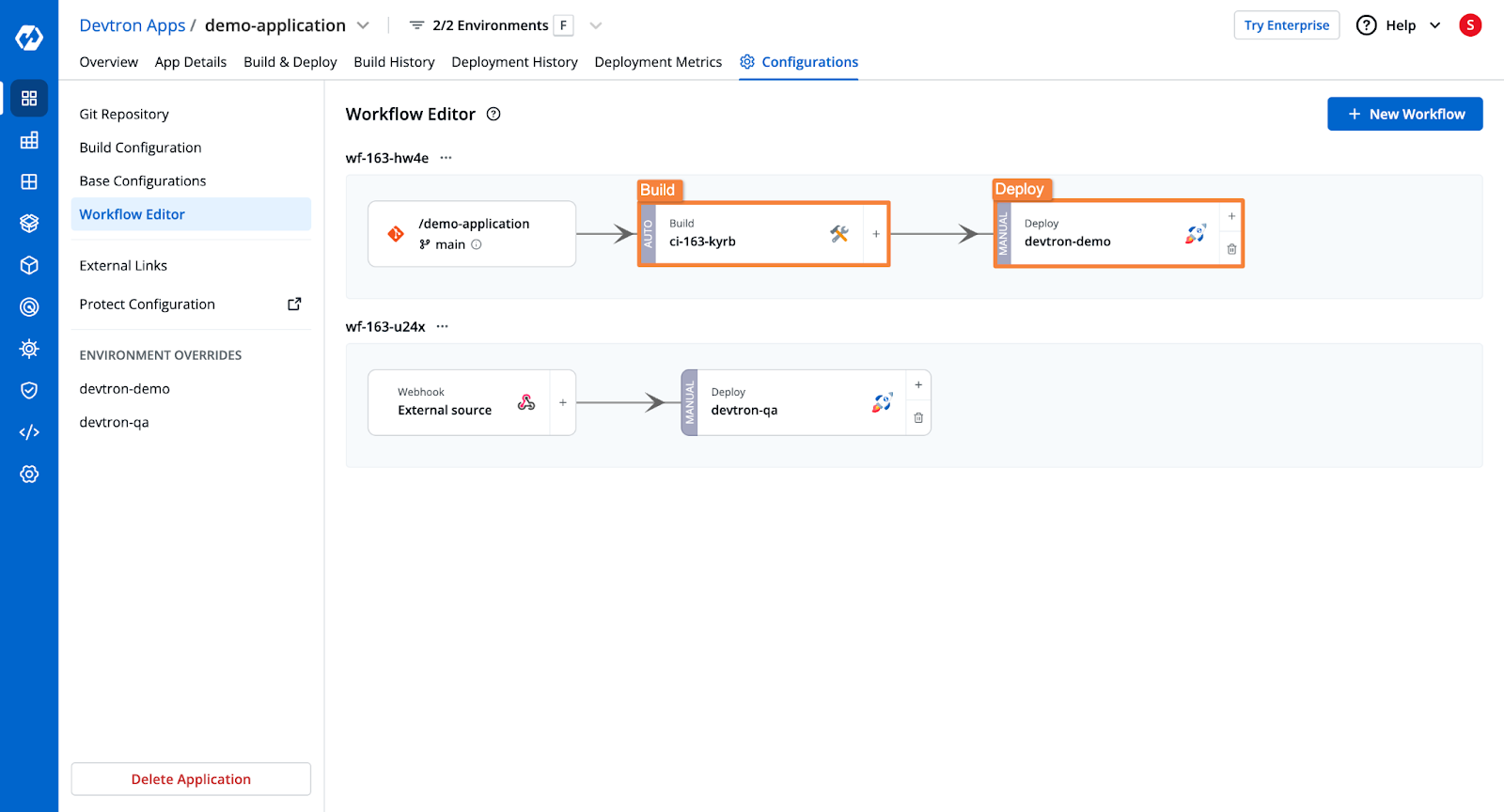

Step 4: Create the CI/CD Pipelines

- The CI pipeline will build the application and push the image to a registry.

- The CD pipeline will trigger deployments in the Kubernetes cluster.

- Configure Pre and Post Stages (e.g., security scanning, unit testing).

Please check the documentation to learn more about the pipeline configurations.

Step 5: Trigger the Build and Deploy Pipelines

- Select the Git branch and trigger the build stage.

- Once the build is complete, trigger the deployment stage.

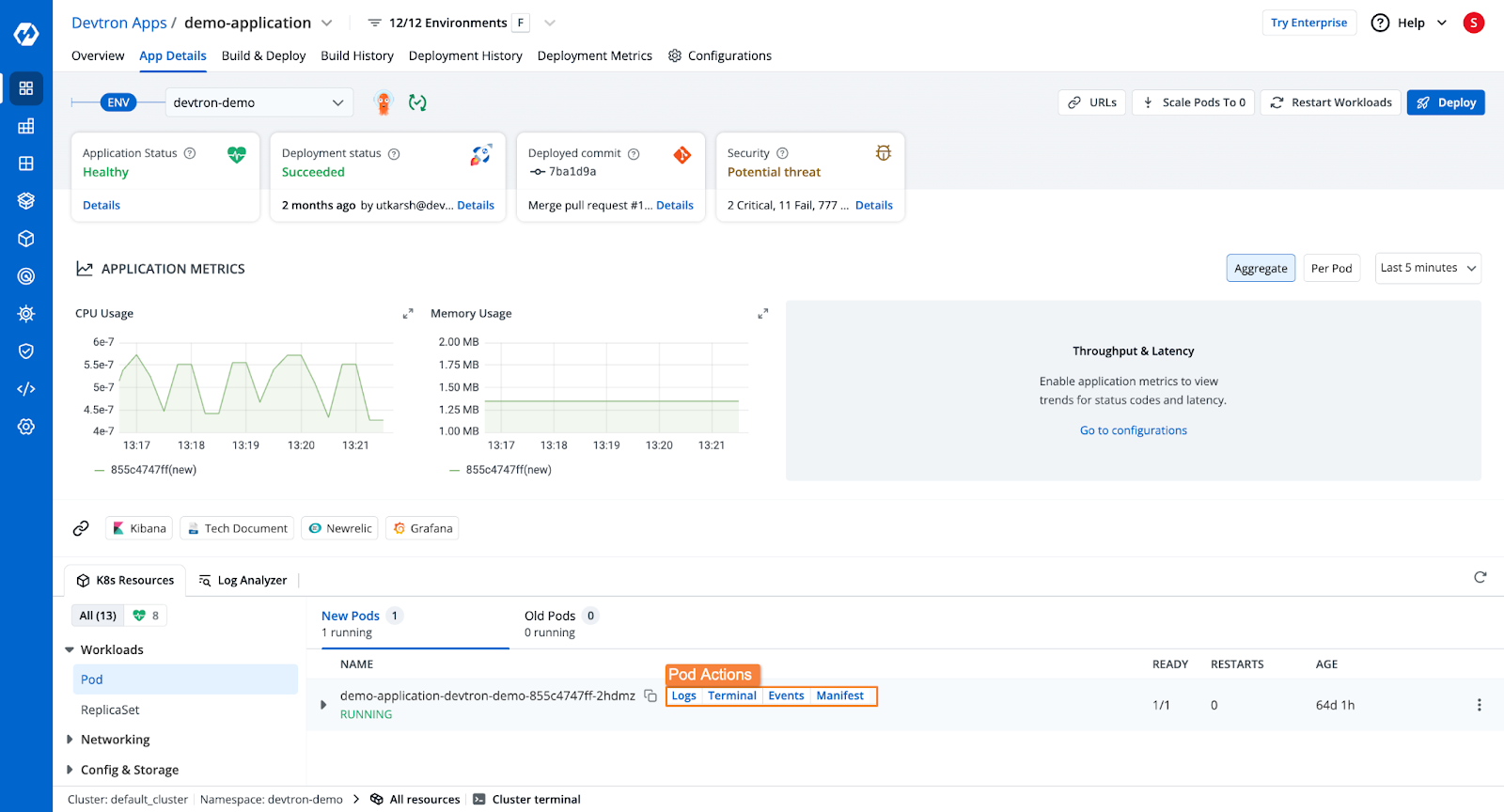

- Devtron will deploy the application and show:

- Deployment status

- Application health

- Kubernetes resource details

- Security vulnerabilities

- Rollback options in case of errors

Once the application is deployed, you will be able to see the application's health, deployment status, security vulnerabilities, the Kubernetes resources of the application, and more.

Method 2: Deploying Django Applications Manually on Kubernetes

Step 1: Create the Dockerfile

A Dockerfile is a set of instructions to build a container image. Below is the Dockerfile to containerize your Django application:

# Stage 1: Build stage

FROM python:3.11-slim as builder

WORKDIR /app

# Install dependencies

COPY requirements.txt .

RUN pip install --no-cache-dir --upgrade pip && \

pip install --no-cache-dir -r requirements.txt

# Stage 2: Runtime stage

FROM python:3.11-slim

WORKDIR /app

# Copy application files

COPY --from=builder /usr/local/lib/python3.11/site-packages /usr/local/lib/python3.11/site-packages

COPY --from=builder /usr/local/bin /usr/local/bin

COPY . .

# Set environment variables

ENV PYTHONUNBUFFERED=1

# Collect static files

RUN python manage.py collectstatic --noinput

# Expose the application port

EXPOSE 8000

# Start the application using Gunicorn

CMD ["gunicorn", "--bind", "0.0.0.0:8000", "myproject.wsgi:application"]

Step 2: Build and Push the Docker Image

Run the following command to build the Docker image:

- Run the following command to build the Docker image:

docker build -t devtron/Django-app:v1 .

- Push the image to DockerHub:

docker push devtron/Django-app

Step 3: Creating the Kubernetes Deployment and Service Manifests

- Create a deployment.yaml file:

apiVersion: apps/v1

kind: Deployment

metadata:

name: Django-deployment

spec:

replicas: 3

selector:

matchLabels:

app: Django

template:

metadata:

labels:

app: Django

spec:

containers:

- name: Django-container

image: devtron/Django-app

ports:

- containerPort: 8080

- Create a service.yaml file:

apiVersion: v1

kind: Service

metadata:

name: Django-service

spec:

selector:

app: Django

ports:

- protocol: TCP

port: 80

targetPort: 8080

type: NodePort

Step 4: Deploy to Kubernetes

Run the following command to apply the manifests:

kubectl apply -f deployment.yaml service.yaml

Your Django application is now deployed to Kubernetes!

Common Challenges and Solutions

1. Database Connection Management

- Use Managed Databases (Amazon RDS, Google Cloud SQL, Azure Database) for stability.

- If running in-cluster, use Persistent Volumes (PVs) to prevent data loss.

2. Optimizing Gunicorn Worker Management

- Set Optimal Worker Count (2–4 workers per CPU core) for efficient request handling.

- Enable Horizontal Pod Autoscaler (HPA) for dynamic scaling.

3. Health Checks & Readiness Probes

- Use Liveness Probes to detect and restart failing applications.

- Use Readiness Probes to ensure traffic is routed only to ready pods.

Conclusion

In this blog, we explored two approaches for deploying Django applications on Kubernetes:

- Automated Devtron Deployment with built-in CI/CD pipelines and advanced configurations.

- Manual Kubernetes Deployment using Docker and YAML manifests.

Using Devtron simplifies Kubernetes deployments, reducing manual efforts and improving efficiency. Start deploying applications today using Devtron’s platform!

Our upcoming Agentic AI will make Kubernetes deployments even smarter, automating syncs, enforcing policies, and optimizing multi-cluster management so your apps stay healthy and resilient with minimal manual work. 🚀

FAQ

What are the prerequisites for how to deploy django applications to kubernetes effectively?

To deploy Django applications to Kubernetes effectively, ensure you have a containerized Django app (using Docker), a Kubernetes cluster set up (like Minikube or cloud providers), and manifests for Kubernetes resources like Deployments, Services, ConfigMaps, and Secrets. Familiarity with Helm and Ingress controllers is also beneficial for managing configurations and traffic routing.

How can I troubleshoot issues during how to deploy django applications to kubernetes effectively?

To troubleshoot issues during Django deployment to Kubernetes, check pod logs with kubectl logs, inspect resource states using kubectl describe, and verify YAML configurations. Use tools like kubectl exec for debugging within containers and monitor the cluster with observability tools like Prometheus and Grafana.

What tools are recommended for how to deploy django applications to kubernetes effectively?

Recommended tools for deploying Django applications to Kubernetes include Docker for containerization, kubectl for cluster management, and Helm for streamlined application deployment. Additionally, use Ingress controllers (e.g., NGINX) for traffic routing and monitoring tools like Prometheus and Grafana to ensure smooth operations.

What are the best practices for how to deploy django applications to kubernetes effectively?

Best practices for deploying Django applications to Kubernetes include using ConfigMaps and Secrets for managing environment-specific settings and sensitive data, setting up Horizontal Pod Autoscalers (HPA) for scalability, and enabling health probes (readiness and liveness). Always monitor application performance and follow security best practices, such as implementing RBAC and using network policies.