Go applications thrive in cloud-native environments, but deploying them on Kubernetes isn’t always straightforward. Container images can often be bloated with unnecessary files, manifests may be misconfigured, and small mistakes can lead to inefficiencies and downtime. Ensuring a streamlined deployment process is crucial to making the most of Kubernetes' scalability and resilience.

Devtron is a platform for simplifying and automating the deployment process to eliminate common pitfalls. However, for those who prefer a hands-on approach, deploying manually is always an option.

In this guide, we’ll walk you through both methods step by step while also covering best practices to optimize your deployment. Let’s dive in and make your Kubernetes deployment seamless!

- Devtron for Automated Deployment

- Using Kubernetes Manually

Did you know? Over 70% of cloud-native failures stem from misconfigured deployments. From bloated images to inefficient resource allocation, small mistakes can cause big inefficiencies. But don’t worry—this guide will help you avoid them!

Deploying Go Applications on Kubernetes

Deploying a Go application to Kubernetes involves several steps. Let’s first review the overall process and then discuss the various steps in depth.

Steps for Deployment

- Write and build the Go Application

- Containerize the Go Application

- Push the container to a Container Registry such as DockerHub

- Create the required YAML Manifest for Kubernetes Resources

- Apply the YAML manifest to the Kubernetes clusters

Prerequisites

Before proceeding with the deployment process, please make sure that you have the following prerequisites

- A Go application (You can clone this repo)

- Docker

- Kubectl

- A Kubernetes Cluster such as kind

Method 1: Deploying Go Applications Using Devtron

Devtron is a Kubernetes management platform that simplifies the entire DevOps lifecycle. It automates the creation of Dockerfiles, and Kubernetes manifests, builds the application, and manages deployment through an intuitive UI.

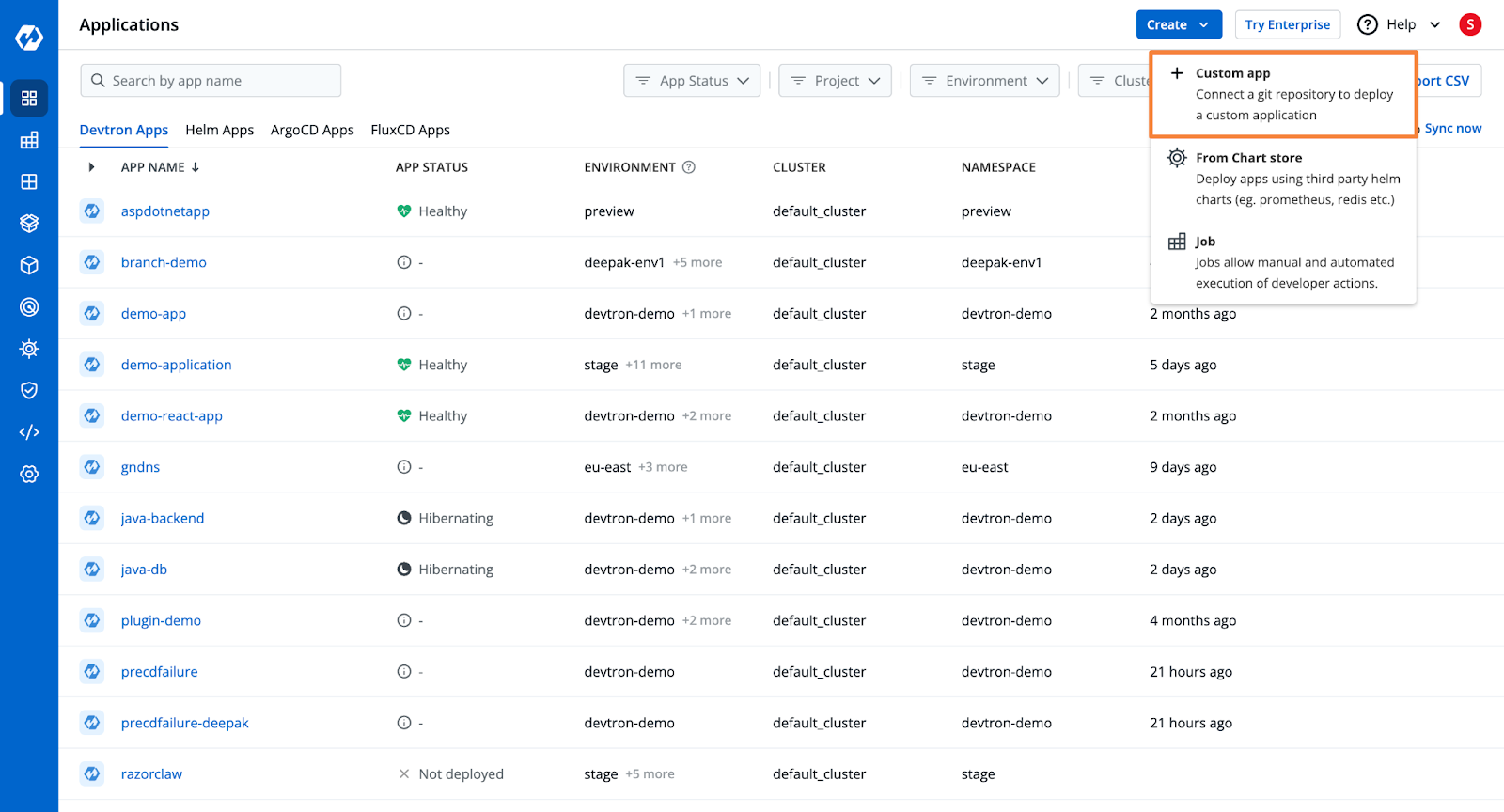

Step 1: Create a Devtron Application and Add Git Repository

- From Devtron’s home page, create a new Devtron application.

- Add the Git Repository containing the Go application code.

Check out the documentation to learn more about the application creation process.

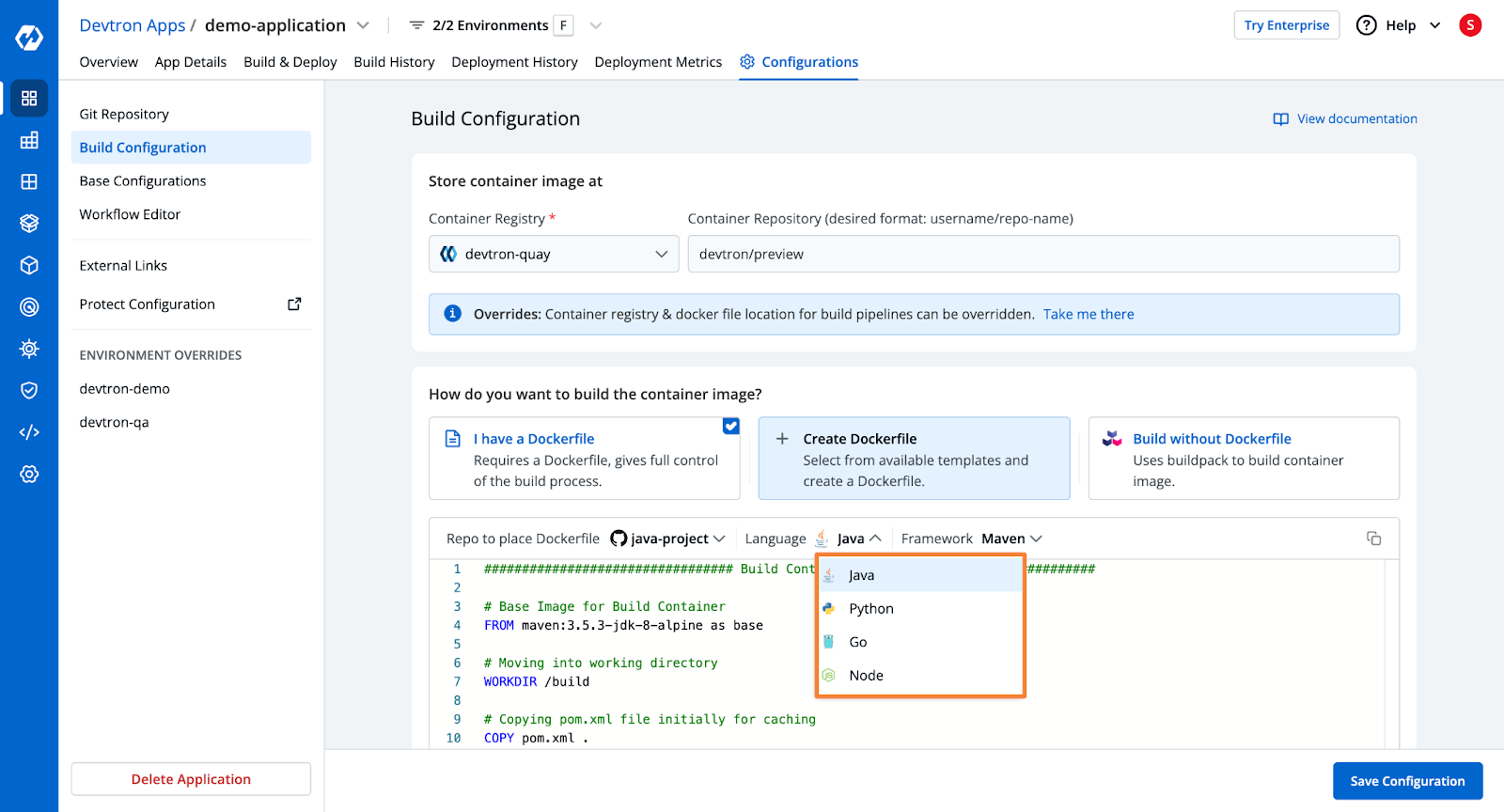

Step 2: Configure the Build

- Devtron will pull code from the repository and build the Docker container.

- You need to configure an OCI Container Registry.

- Choose from three build options:

- Use an existing Dockerfile

- Create a Dockerfile (using Devtron's template for Go applications)

- Use Buildpacks

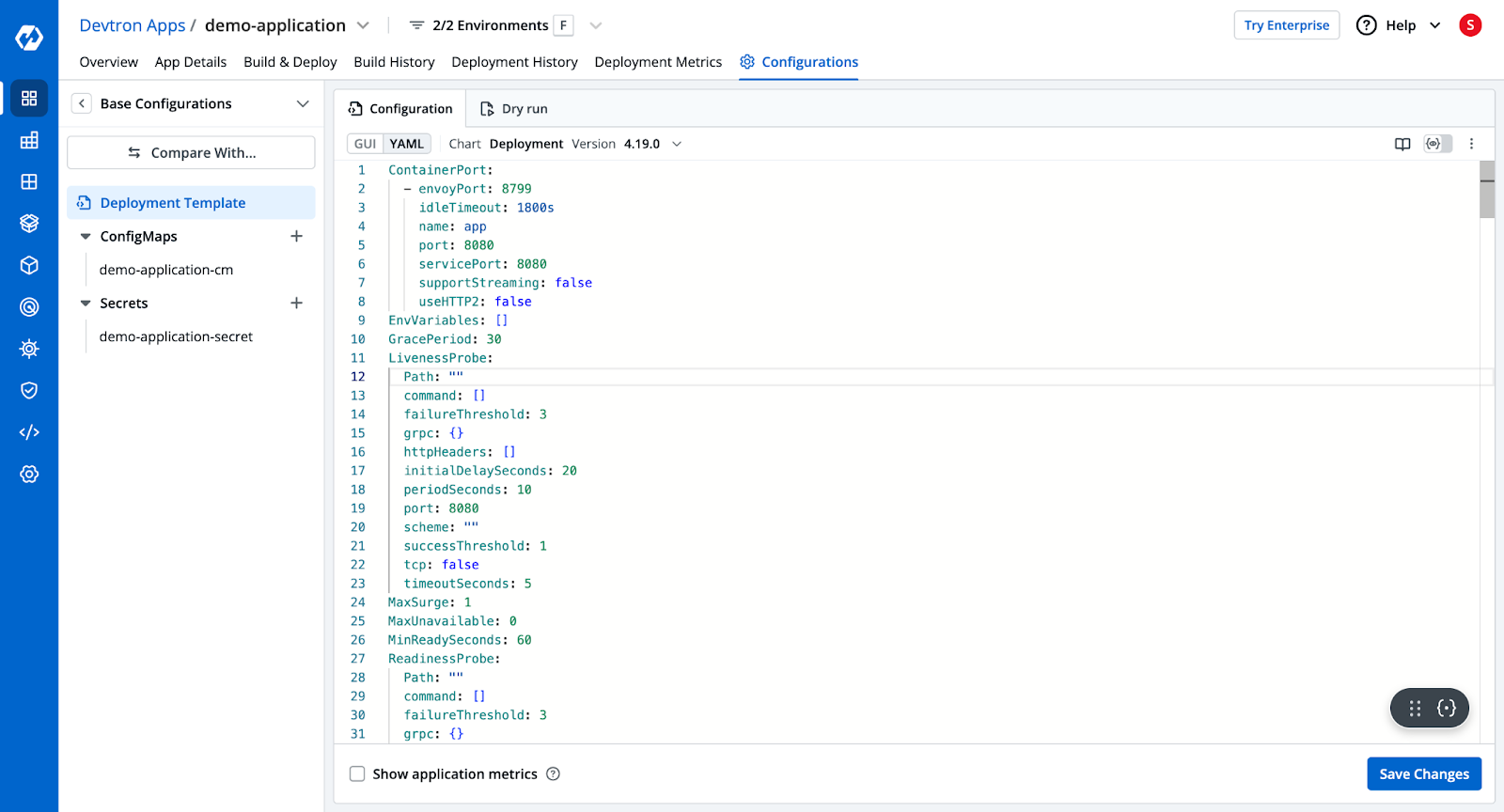

Step 3: Deployment Configurations

- Devtron provides a pre-configured YAML template for Kubernetes deployment.

- Configure ingress, autoscalers, and other deployment settings.

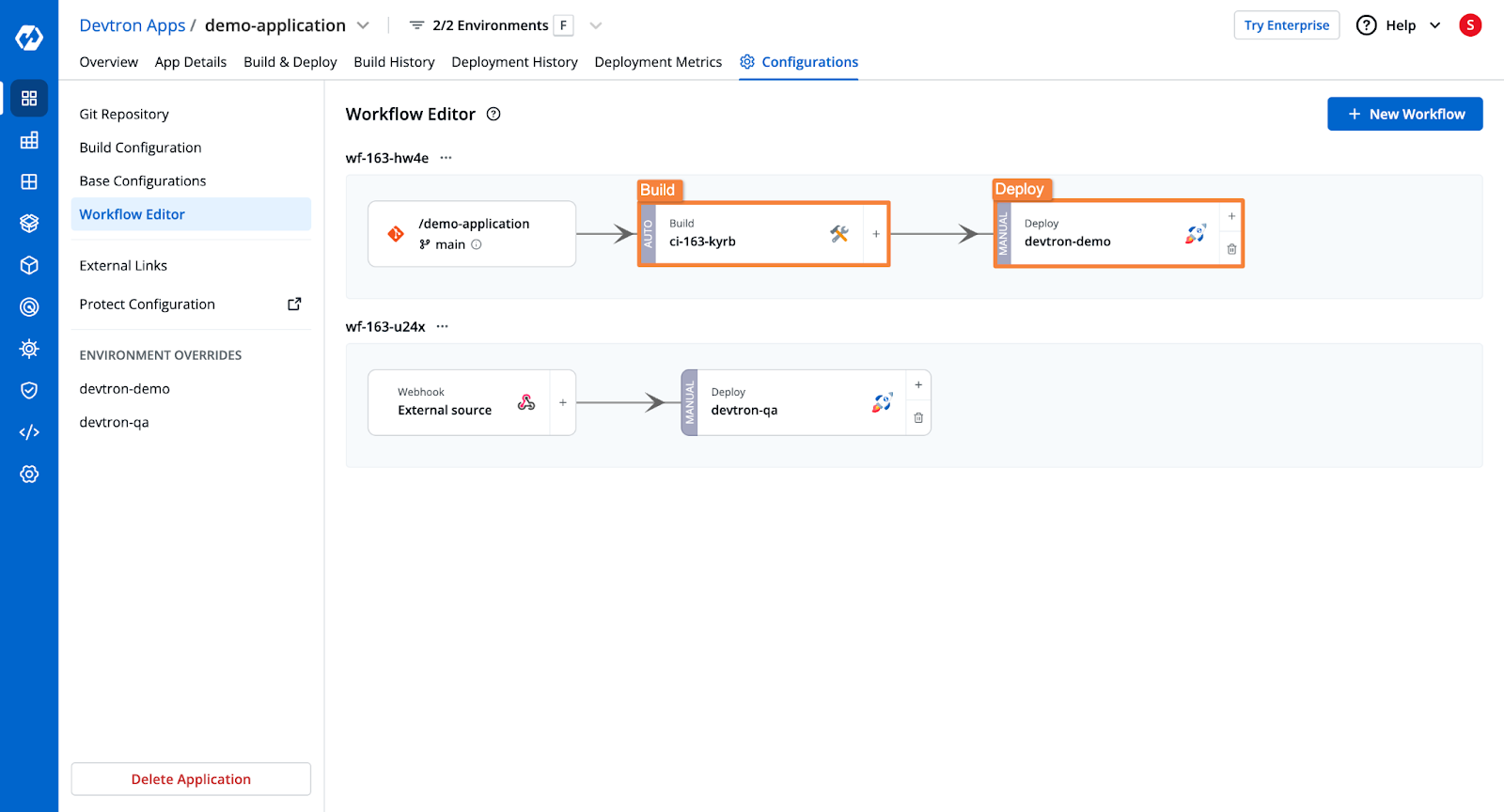

Step 4: Create the CI/CD Pipelines

- The CI pipeline will build the application and push the image to a registry.

- The CD pipeline will trigger deployments in the Kubernetes cluster.

- Configure Pre and Post Stages (e.g., security scanning, unit testing).

Please check the documentation to learn more about the pipeline configurations.

Step 5: Trigger the Build and Deploy Pipelines

- Select the Git branch and trigger the build stage.

- Once the build is complete, trigger the deployment stage.

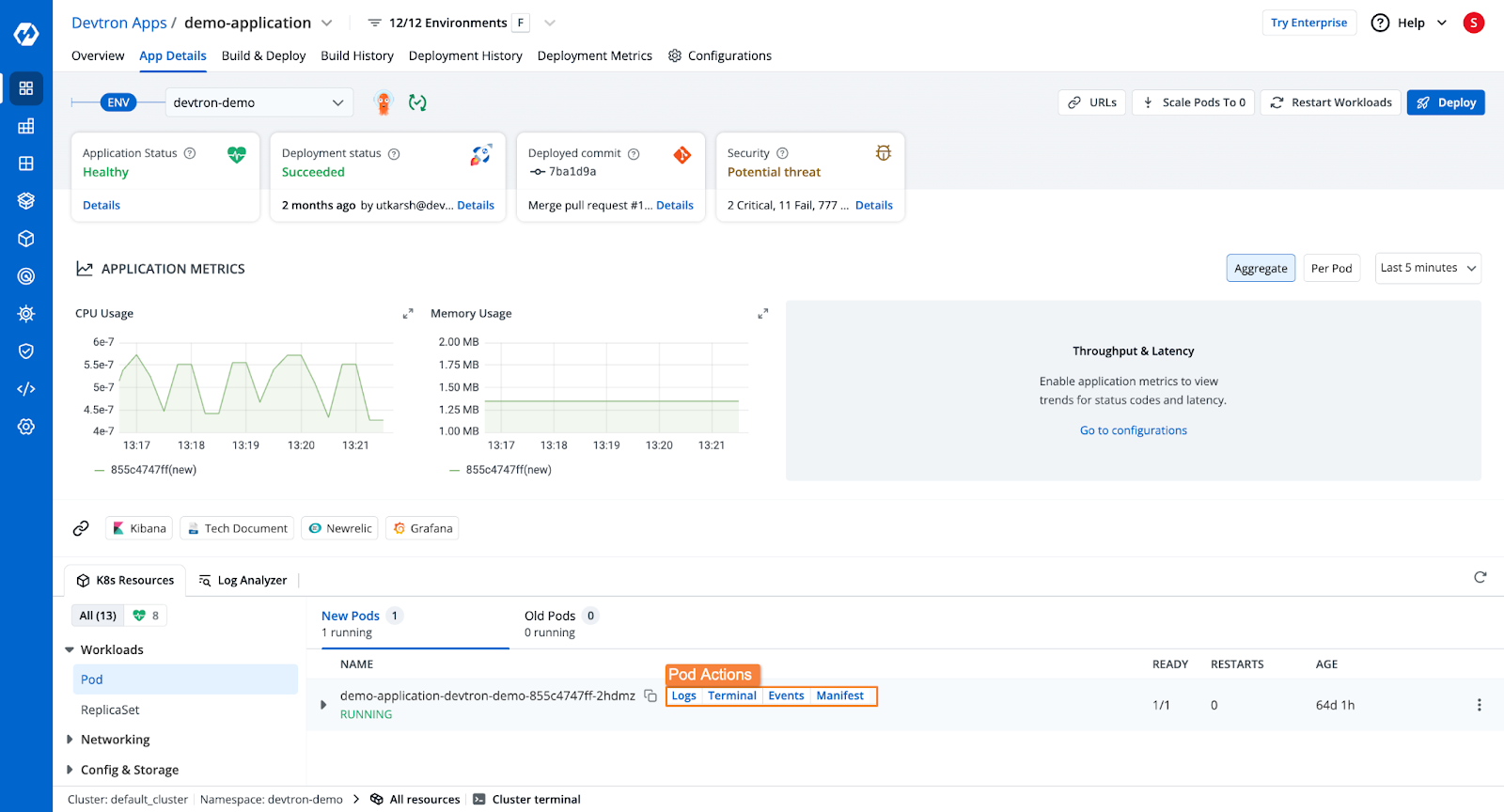

- Devtron will deploy the application and show:

- Deployment status

- Application health

- Kubernetes resource details

- Security vulnerabilities

- Rollback options in case of errors

Once the application is deployed, you will be able to see the application's health, deployment status, security vulnerabilities, the Kubernetes resources of the application, and more.

Method 2: Deploying Go Applications Manually to Kubernetes

Step 1: Create the Dockerfile

A Dockerfile is a set of instructions to build a container image. Below is the Dockerfile to containerize your Go application:

FROM golang:1.16 AS builder

WORKDIR /app

COPY go.mod go.sum ./

RUN go mod download

COPY . .

RUN go build -o main .

FROM gcr.io/distroless/static

WORKDIR /app

COPY --from=builder /app/main .

CMD ["./main"]

Step 2: Build and Push the Docker Image

Run the following command to build the Docker image:

- Run the following command to build the Docker image:

docker build -t devtron/goapp:v1 .

- Push the image to DockerHub:

docker push devtron/goapp

Step 3: Creating the Kubernetes Deployment and Service Manifests

- Create a deployment.yaml file:

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-deployment

spec:

replicas: 3

selector:

matchLabels:

app: go

template:

metadata:

labels:

app: go

spec:

containers:

- name: go-container

image: devtron/goapp

ports:

- containerPort: 8080

- Create a service.yaml file:

apiVersion: v1

kind: Service

metadata:

name: go-service

spec:

selector:

app: go

ports:

- protocol: TCP

port: 80

targetPort: 8080

type: NodePort

Step 4: Deploy to Kubernetes

Run the following command to apply the manifests:

kubectl apply -f deployment.yaml service.yaml

Your Go application is now deployed to Kubernetes!

Common Challenges and Solutions

1. Container Image Size Management

- Use Multi-Stage Builds to separate build and runtime environments.

- Use Lightweight Base Images like Alpine or Distroless to reduce size.

2. Resource Management

- Set Memory and CPU Limits to avoid overconsumption.

- Implement Autoscaling (HPA) to handle varying workloads.

3. Deployment Strategies

- Rolling Updates to ensure zero-downtime deployments.

- Graceful Shutdown Handling to avoid breaking live traffic.

Conclusion

In this blog, we explored two approaches for deploying Go applications on Kubernetes:

- Automated Devtron Deployment with built-in CI/CD pipelines and advanced configurations.

- Manual Kubernetes Deployment using Docker and YAML manifests.

Using Devtron simplifies Kubernetes deployments, reducing manual efforts and improving efficiency. Start deploying applications today using Devtron’s platform!