In Kubernetes, we know that the smallest deployable unit is a pod and these pods get deployed on some nodes. Nodes are the virtual machines that run your containerized workloads with the help of kubelet and container runtime. To learn about the different components of Kubernetes and how they work, please check out this blog. With different types of nodes available out there, the obvious question that comes to our head is which nodes are good for our pods? Which nodes are perfect for our pods?

The process of placing a pod on a particular node is called scheduling. However, it's important to keep in mind that Kubernetes is a highly dynamic orchestrator, with changes happening quite frequently. So, even if your pods get scheduled on some nodes for the moment, chances are Kubernetes will kill the pod if the node is facing disk pressure, memory pressure, or any other adverse condition.

So, scheduling your workloads on nodes is one of the most important things that you should configure correctly in your Kubernetes cluster. Your initial attention to careful scheduling of your workloads will save you from troubleshooting the issues in staging/production environments. One of the advanced scheduling methods that Kubernetes provides is by using Taints and Tolerations.

Within the blog, you will learn all about the scheduling workloads in Kubernetes by using Taints and Tolerations.

What are taints & tolerations?

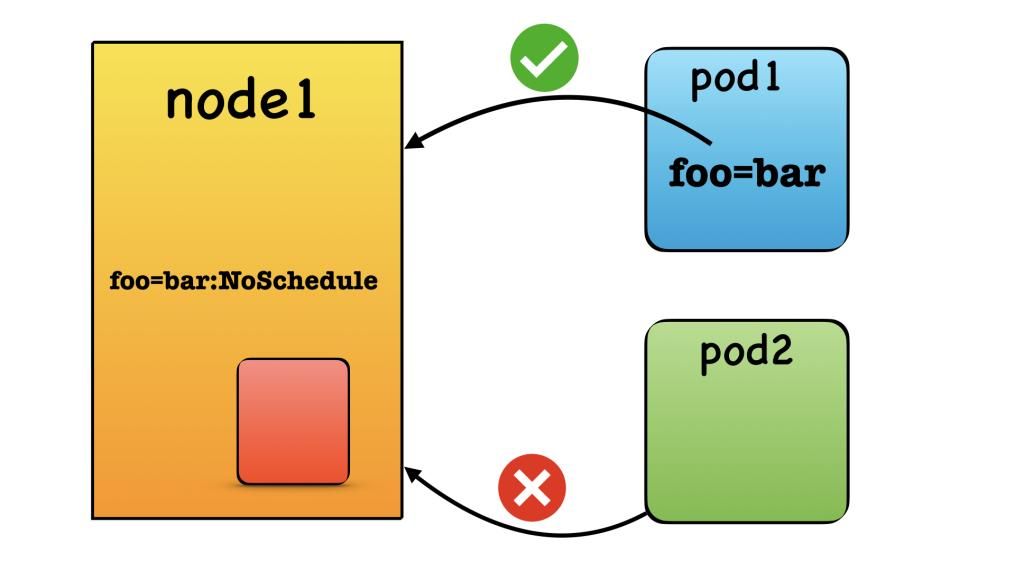

Taints and tolerations are one of the more advanced modes of scheduling workloads on Kubernetes. They are used to restrict scheduling workloads to a particular node. To understand this with the help of an analogy, a taint is simply a lock that is placed on the node. Unless a pod has the exact key to open the lock, it will not get scheduled on the node. The tolerations are what act as the key to the taint on the node.

Since Taints act as locks, we apply them on the Kubernetes nodes, and every single pod that has to be scheduled in the cluster will be repelled from this node. It’s a way of telling Kubernetes that don’t schedule these pods on the nodes under consideration. Only pods that have the correct key i.e. correct toleration value, they will be scheduled to the tainted node.

Please note that we are applying the taints on the nodes. Taints are the property of the nodes. You really should understand that it’s node who’s telling that "I don’t like this set of pods". It’s not like the node is saying that I have some love for certain types of pods.

Here's an example of what Taints looks like:

key=value:effect

Notice the effect in the last, we use the effect to describe the behavior of what would happen if the pods are not fulfilling the criteria of nodes. What will happen if pods do not tolerate the taints by nodes?

Essentially there are three effects that can be applied to the taint.

- NoSchedule: NoSchedule means do not schedule the pods that cannot tolerate the taints.

2. preferNoSchedule: It means that try not scheduling the pods on the nodes if the pods don’t tolerate the taints but in case there are no more nodes left then you can schedule.

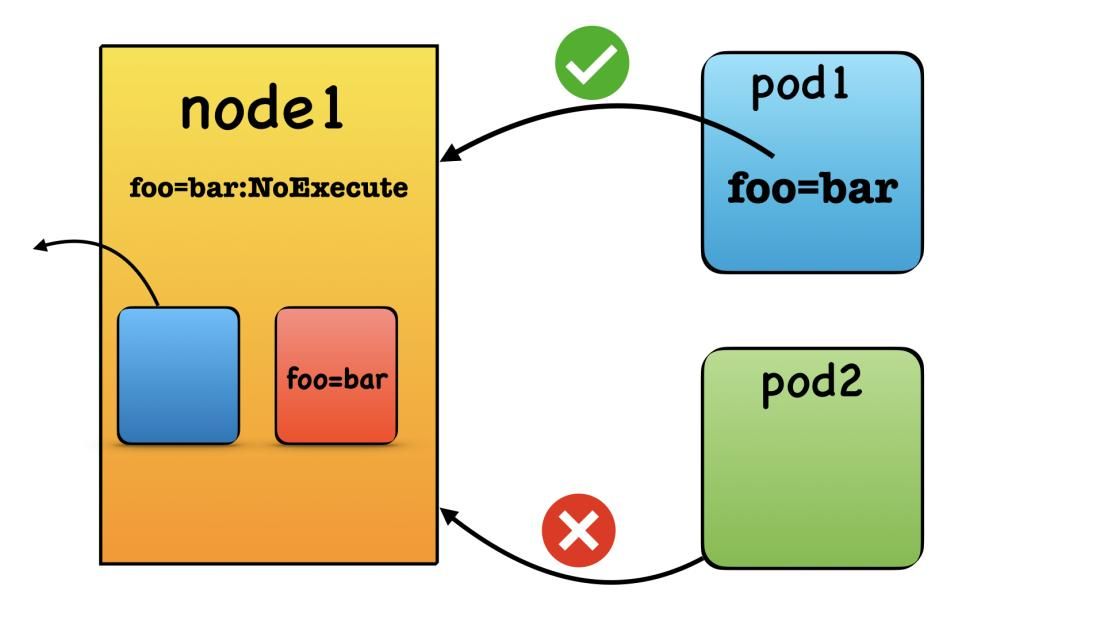

3. NoExecute: This is the most aggressive one and it means that pods that were scheduled earlier on the nodes and out of those pods that don’t satisfy the taints will be evicted.

Node Controller

Kubernetes has the controller-manager which has multiple different types of controllers. A controller is responsible for managing a certain aspect of the cluster. One of these controllers is the Node Controller which manages the nodes. It also automatically taints a node based on certain conditions of the node. Some of these taints are as follows:

• node.kubernetes.io/not-ready: This taint is applied whenever the node is not in a ready state. The Node manifest has a field called Ready. This taint is applied when the field has a value of False.

• node.kubernetes.io/memory-pressure: This taint is applied whenever the node has a memory pressure, i.e., it is low on memory resources.

You can find a comprehensive list of similar taints in the official Kubernetes documentation.

These taints are applied to the nodes by the nodeController and while scheduling, the scheduler checks these taints and schedules the workloads accordingly.

Scheduling a pod using Taints and Tolerations

Now that you understand how taints and tolerations work let's look at how you can use them to schedule your workloads in a Kubernetes cluster. Scheduling a pod using taints and tolerations involves several steps which are as follows.

- Add a taint to the Node

- Create a Pod manifest and use the correct toleration

- Apply the Pod Manifest to the cluster.

Let's look at each step in detail. You can follow along with the below steps. Please ensure that you have a cluster running.

Add a Taint to the Node

As we have already seen before, the Node Controller may automatically add some taints to the Node when certain conditions are met. However, if you want to manually add a taint to the node, you can do so using the below command.

kubectl taint nodes <node-name> key=value:NoScheduleYou could have a node with some specific configurations. For example, you may have some highly reliable nodes that are designed for production workloads, and some less reliable nodes that are cheaper, and suitable for a development environment. In this case, you would want to prevent production pods from being scheduled on the less reliable nodes, so you can taint them to repel those pods.

If you wish to remove a particular taint from a node, you can edit the Node manifest using kubectl edit node <node-name, or you can append the previous command with a - as shown below

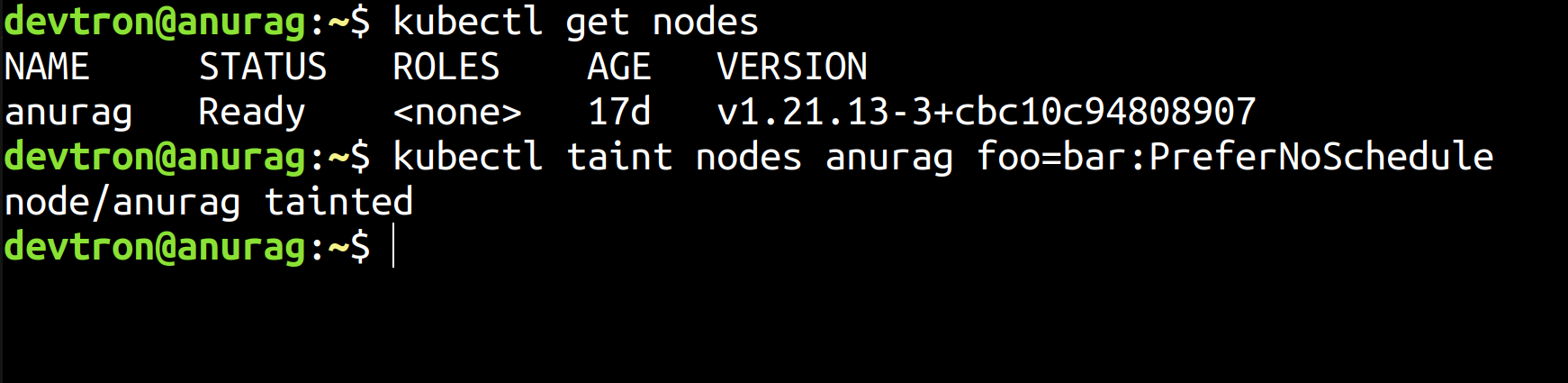

kubectl taint nodes <node-name> key=value:NoSchedule-As an example, let's say that you wanted to taint a node named Anurag with the key-value pair of foo=bar. And you wish to set the effect as PreferNoSchedule. You can use the below command to add this taint to the node.

kubectl taint nodes anurag foo=bar:PreferNoSchedule

This Taint will ensure that no pods will be scheduled for the anurag node. However, since we are using the PreferNoSchedule rule, which is a soft role, the Kubernetes Scheduler will try to find a different node to schedule the pod to. But, if a different node does not exist, the scheduler may place the pod on the anurag node.

On the other hand, if we set a hard rule i.e. NoSchedule, the pod would stay in the pending state if no other node was available.

Add a toleration to the Pod

Now let's say that we want to schedule a pod to the node that we just tainted. In order to allow the pod to be scheduled on the node, we need to add the proper tolerations to the node. Referring back to the earlier analogy, we have to provide the pod with the proper key to open the lock on the node.

To add the toleration to the pod, you need to write the configuration for the toleration in the pod.spec section of the manifest. Let's assume that we wanted to schedule a Nginx pod. You can use the below manifest file to create a nginx pod which will be scheduled on the tainted node.

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

spec:

containers:

- name: nginx-container

image: nginx

tolerations:

- key: "foo"

operator: "Equal"

value: “bar”

effect: "PreferNoSchedule"The above toleration will ensure that the pod will be scheduled to a node which has the taint foo=bar:PreferNoSchedule.

Tolerate Pods with Devtron

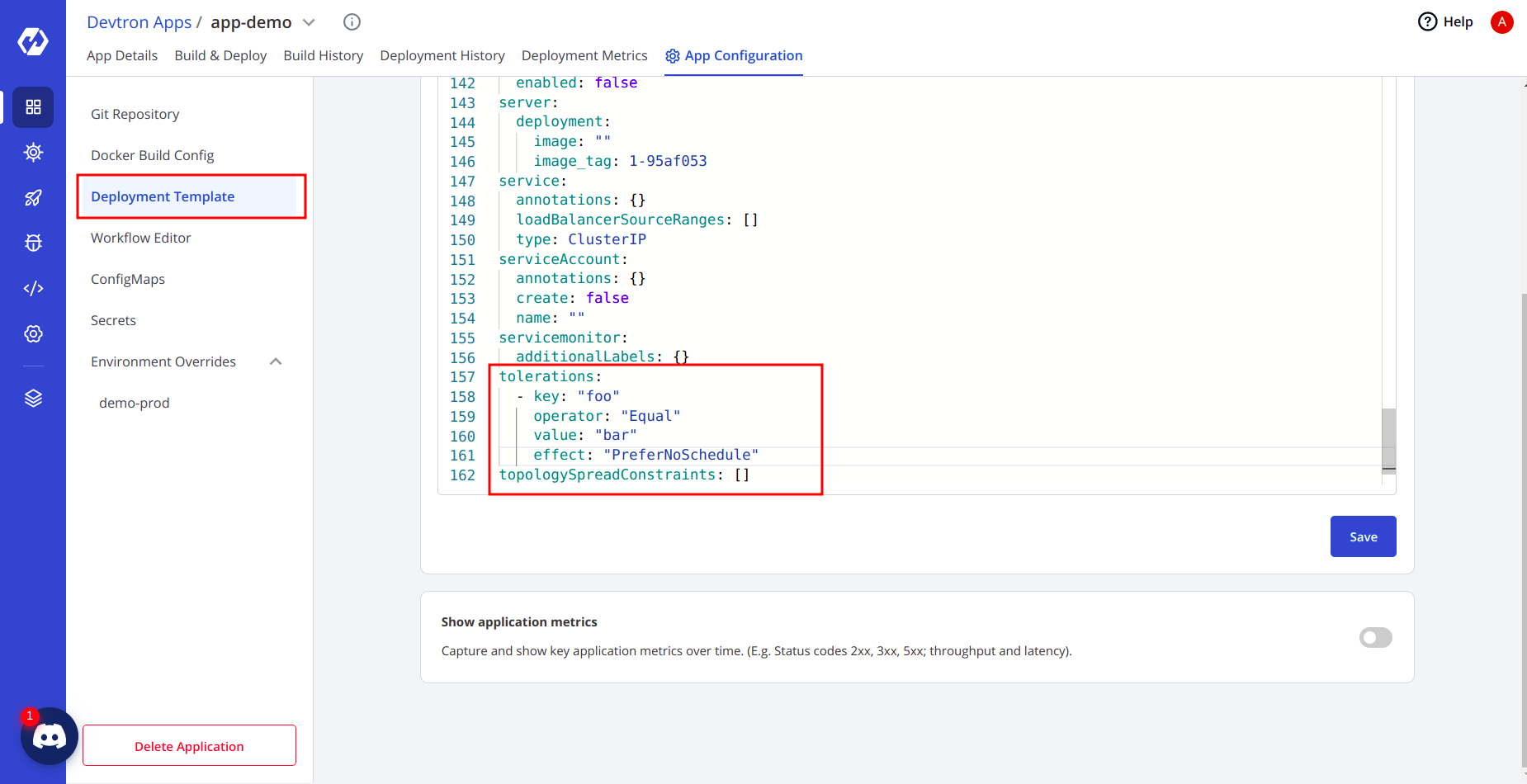

With Devtron you can use it to add tolerations to your pods. To add tolerations to your pods you can use the deployment template.

Go to your application > App Configuration > Deployment Template.

In the deployment template, search for tolerations and add it there.

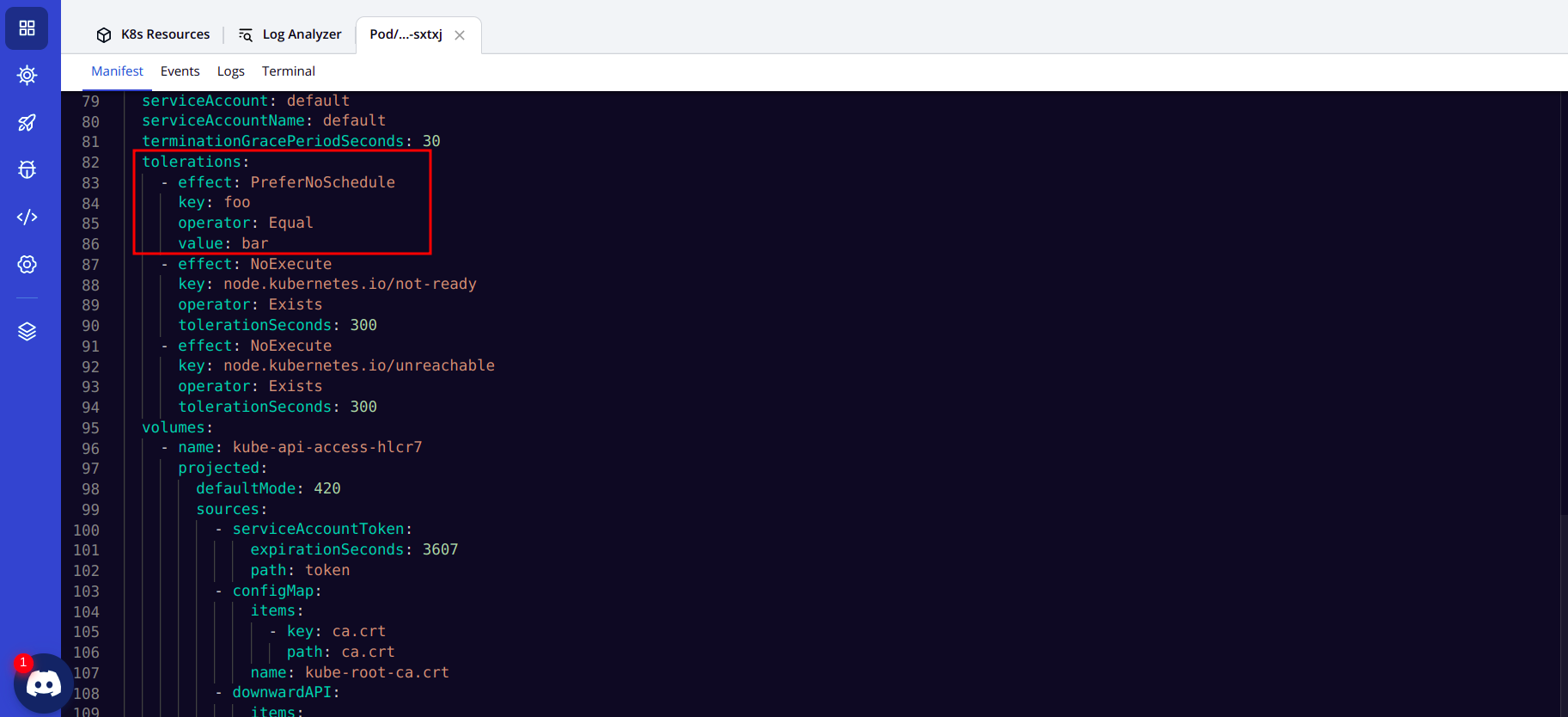

I have one demo application running in the background and I will add tolerations to that.

And after saving this deployment template I will run the deployment pipeline once again for changes to take place.

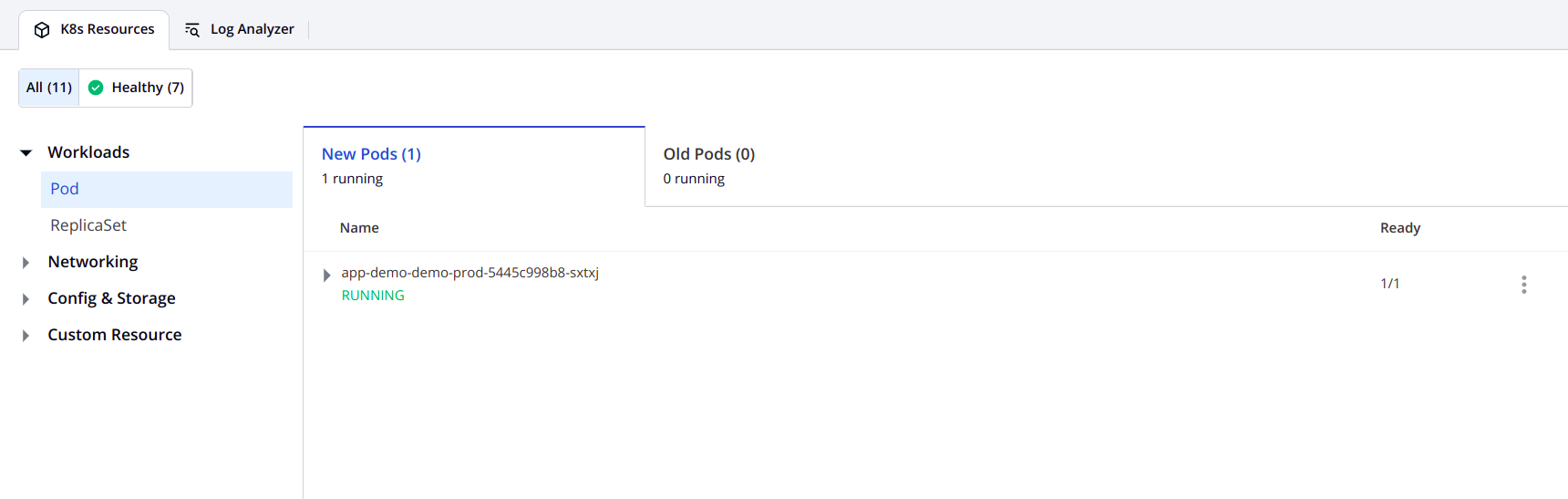

After running, you will see the status of all your resources. All the resources are healthy now.

Now let’s check whether the taints were applied during the new deployment or not. For that hover over the pod, and you will see that that tolerations were applied to the pods.

Differences between NodeAffinity & Taints

In the case of NodeAffinity we are telling the pods that you love some types of nodes and the scheduler will try to send the pods to the nodes that pods love. So, is it mandatory that the pods will be scheduled only on the nodes that they have an affinity towards?

Clearly No, we have not mentioned anywhere that pods hate any kind of nodes. So, if the nodes that pods love are full/not available, then the scheduler will schedule it on some other nodes.

In the case of taints & tolerations, it’s the node that is in focus. The node is demanding that I don’t like these sets of pods. Depending on the situation, we can have different effects on taints. Again, here, pods can be scheduled on other nodes because other nodes don’t have any criteria.

Conclusion

There are many different ways to schedule workloads on Kubernetes. One of the commonly used methods is by applying Taints & tolerations to your nodes and pods. Taints restrict workloads from being scheduled on any particular node.

Tolerations on the other hand are defined in the pod, and allow the pods to get scheduled on the tainted nodes. They provide a lot of flexibility to define different rules and strategies for scheduling pods to particular nodes.

Do star the project if you like Devtron Star